|

|

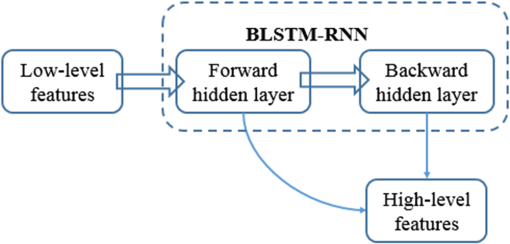

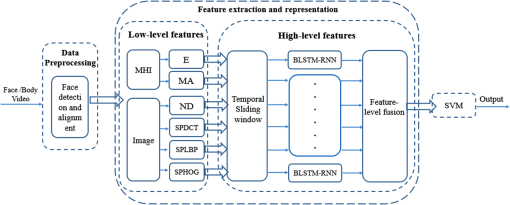

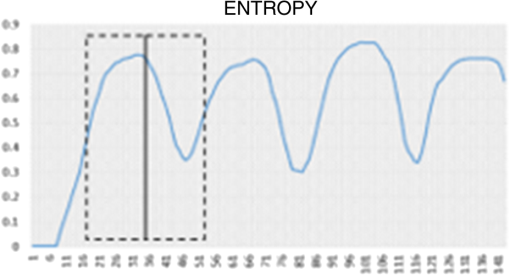

1.IntroductionHuman behavior recognition is an important subject in the field of pattern recognition, which is of great interest for human–computer interaction. Although they are constrained by one’s physiology, the temporal factors of human behavior, e.g., facial movements or body gestures, are described by four phases: neutral, onset, apex, and offset.1 Different phases have different manifestations and intensities. Some researchers have shown that active unit (AU) activation detection2 and genuine (spontaneous) emotion recognition3 both benefit from different temporal segments. In addition, they have been proven to be beneficial for emotion recognition, especially for multimodal emotion recognition combining facial expression, body expression, voice, etc.4–11 Therefore, the temporal segment detection of human behavior warrants further exploration. The work introduced in this letter offers the following contribution: to date, we are the first to introduce the bilateral long short-term memory recurrent neural networks (BLSTM-RNN) for the automatic detection of the temporal phases of human behavior. A high-level feature that simultaneously contains temporal–spatial information is learned with the BLSTM-RNN method, which has synthesized both local and global temporal information. It shows outstanding performance. The remainder of this letter is organized as follows. Section 2 introduces related works. Section 3 provides details of the overall methodology. Section 4 describes the experiments and the extensive experimental results. Finally, the conclusion is given in Sec. 5. 2.Related WorkIn this section, we will review some existing methods that are related to temporal segments. A number of studies have detected the temporal segments by temporal rules drawn up by researchers or other classification schemes, such as support vector machines (SVM)12 and hidden Markov models (HMMs).13 Pantic and Patras14,15 used the temporal rules they drew up to detect the temporal segment of facial AUs. Focusing on bimodal affect recognition, Gunes and Piccardi10 proposed a method to automatically detect temporal segment of facial movements and body gestures, which includes both frame- and sequence-based strategies. HMM was applied as a sequence-based classifier. Several different algorithms provided in the Weka tool, including SVM, AdaBoost, and C4.5, have been utilized as frame-based classifiers. Jiang et al.2 used HMMs to detect the temporal segment of facial AUs. Chen et al.16 design two features to describe face and gesture information, then use SVM to segment expression phase. However, the segment accuracy (Acc) of the existing methods is still to be improved. Recently, deep learning methods have become very popular within the community of computer vision. BLSTM-RNN is one of the state-of-the-art machine-learning techniques. In this letter, to synthesize the local and global temporal–spatial information more efficiently, we present an automatic temporal segment-detection framework that uses BLSTM-RNN17 to learn high-level temporal–spatial features. The framework is evaluated in detail over the face and body (FABO) database.18 The result of the experiments in Sec. 4 proves that the proposed framework outperforms other state-of-the-art methods for solving the problem of temporal segment detection. 3.MethodologyThis section presents the details of our method. Figure 1 shows an overview of our proposed method, which consists of two major parts: (1) data preprocessing and (2) feature extraction and representation. We use SVM as a classifier. Fig. 1Flow chart of the proposed method to detect temporal segments of body gesture and facial movement over the FABO database.  3.1.Data PreprocessingFace detection is a very important step in the entire pipeline of facial movement temporal segment detection, which directly affects the effectiveness of the feature extraction. Before considering more accurate feature extraction by using methods, such as discrete cosine transform (DCT) combined,19 the local binary pattern (LBP),20 the pyramid histogram of oriented gradient (PHOG),21 the entropy (),22 the motion area (MA),16 and the neutral divergence (ND),16 we follow the methods of23–26 All frames are aligned to this base face through affine transformation and cut to . 3.2.Feature Extraction and Representation3.2.1.Low-level featuresWe extract and MA based on the motion history image (MHI). An MHI is a static image template which is helpful in understanding the motion location and path as it progresses.27 As is common for facial movements, we extract six low-level descriptors, including the sum of pyramid LBP (SPLBP), the sum of pyramid two-dimensional DCT combined (SPDCT), the sum of PHOG (SPHOG), , MA, and ND. For body gestures, only , MA, and ND are extracted as low-level descriptors. Brief introductions can be found in Refs. 16, 1920.21.–22. 3.2.2.High-level featuresAfter the first step, we obtain several low-level features. Additional temporal information is obtained by learning the high-level features in the time domain with the BLSTM-RNN method and feature-level fusion strategy, which was used to synthesize the local and global temporal information. Because the input of BLSTM-RNN is required to be a sequence, first, we employ a fixed-size temporal window with its center located at the current frame. Figure 2 shows some examples. In our experiments, we set the temporal window size equal to that of Chen et al.16 Therefore, each low-level feature vector has the dimensionality of the temporal window size. Then these feature vectors can be used as input for BLSTM-RNN. Fig. 2Examples of feature representation of current frame, which frames are within a fixed-size temporal window centered at current frame.  For BLSTM-RNN, we use the implementation of Theano.28 Figure 3 shows the network structure of high-level features. First, we use low-level features as input for BLSTM-RNN and compute the forward hidden sequence and the backward hidden sequence , respectively. Then we link them together by concatenation. Then we use a feature-level fusion strategy for all of the extracted high-level features. This fusion can be implemented by concatenating all feature vectors together. Finally, we employ SVM29 as the classifier to detect temporal phases. 4.Experiments4.1.Experimental SetupWe conduct the experiments on the bimodal face and body database FABO.18 This database consists of both face and body recordings using two cameras simultaneously. So far, it is the only bimodal database that has both expression annotation and temporal annotation. We choose videos in which the ground truth expressions from both the face camera and body camera are identical. Among them, 129 videos were used for training and 119 videos were used for testing. In this section, we select the ACC as the measure to evaluate the results. The calculating equation of ACC is given as where denotes the number of temporal phase categories, denotes the precision of the ’th temporal phase class, and and denote the number of correct classification and the number of wrong classifications in the ’th temporal phase class, respectively.4.2.Experimental ResultsIn this section, we compare the ACC in percent under different conditions. 4.2.1.Result of feature-level fusionIn this part, we apply three methods for feature-level fusion, and the best one is used on the experimental results. Table 1 shows the result of feature-level fusion from features of body gestures using BLSTM-RNN with a softmax classifier. In the table, the item BLSTM(1) means using the output of the first layer of BLSTM as features; the item BLSTM(2) means using the output of the second layer of BLSTM as features; the item BLSTM(1+2) means concatenating the output of the first and second layers of BLSTM as final features; the item ALL(before) means first simply concatenating all low-level features together, then combining them for input into a BLSTM-RNN; the item ALL(after) means first use each low-level feature as input into a BLSTM-RNN, then simply concatenate all high-level features together. The results in this table indicate that the most accurate result is 95.20. It is obtained by first using each single feature as input into a BLSTM-RNN, of which the output of the first and second layers is concatenated together as high-level features. Then apply softmax as the classifier. Table 1Result of feature-level fusion from features of body gestures using BLSTM-RNN with softmax classifier.

Table 2 presents the results of feature-level fusion from features of facial movement by using BLSTM-RNN with softmax. This table indicates that the most accurate result is 88.54, which is obtained by first using each low-level feature as input into a BLSTM-RNN, and concatenating the output of the first and second layers, before simply concatenating all high-level features together. Table 2Result of feature-level fusion from features of facial movement using BLSTM with softmax.

4.2.2.Results of some classifiers on low-level features and high-level featuresIn this part, we compare different classifiers for temporal segmentation. Table 3(a) contains the classification results of some classifiers [e.g., softmax, SVM, random forest (RF)] for body gestures. In this table, the item ALL(low level) means simply concatenating all low-level feature vectors together. The item ALL(high level) means simply concatenating all high-level features together. The results in this table show that: (1) the best result is 95.30, which is obtained with the SVM, and (2) the results on high-level features are more accurate than those on low-level features. It shows the validity of high-level features. Table 3Classification results of some classifiers for body gesture and facial movement.

Table 3(b) shows the classification results of some classifiers for facial movement. The results in this table show the following: (1) the best result is 89.52, which is obtained with the SVM and (2) the results on high-level features are more accurate than on low-level features. It shows the validity of high-level features. From Table 3, we can see that SVM performs best, so we apply SVM as our final classifier. 4.2.3.Performance comparisonIn this part, we present a comparison of results from feature- and decision-level fusion for facial movement and body gesture, respectively, in Table 4, and a comparison of results from our approach and relevant experiments on FABO database in Table 5. Table 4Result of feature- and decision-level fusion for facial movement and body gesture.

Table 5Performance comparison between the proposed and state-of-the-art approaches.10,16 Table 4 shows the result of feature- and decision-level fusion for facial movement and body gesture. These results indicate that the results obtained for feature-level fusion are more accurate than those obtained for decision-level fusion. Thus, we employed feature-level fusion as our final strategy. The performance of the proposed approach and the state-of-the-art approach reported in Refs. 10 and 16 is compared in Table 5. Gunes and Piccardi10 proposed a method to automatically detect temporal segment of facial movement and body gesture, which includes both frame- and sequence-based strategies. HMM was applied as a sequence-based classifier. Several different algorithms provided in the Weka tool, including SVM, AdaBoost, and C4.5, have been utilized as frame-based classifiers. They obtained an ACC of 57.27% for face and 80.66% for body, respectively. Chen et al.16 designed two features to describe face and gesture information, then used SVM to segment expression phase. They only used body video to detect the temporal segment of the expression; they did not detect the temporal segment of facial movement and body gesture separately. They obtained an ACC of 83.10% for expression. Results of the proposed approach are obviously more accurate than those of the state-of-the-art methods. 5.ConclusionsThis letter presents a temporal segment detection framework using BLSTM-RNN to learn high-level temporal–spatial features. The framework is evaluated in detail using data obtained from the FABO database. A comparison with other state-of-the-art methods shows that our method outperforms the other approaches in terms of temporal segment detection. In the future, we plan to focus on affect recognition based on temporal selection face and body display. AcknowledgmentsThis work was supported by the National Natural Science Foundation of China (Grant No. 61501035), the Fundamental Research Funds for the Central Universities of China (Grant No. 2014KJJCA15), and the National Education Science Twelfth Five-Year Plan Key Issues of the Ministry of Education (Grant No. DCA140229). ReferencesP. Ekman,

“About brows: emotional and conversational signals,”

Human Ethology: Claims and Limits of a New Discipline: Contributions to the Colloquium, 169

–248 Cambridge University Press, New York

(1979). Google Scholar

B. Jiang et al.,

“A dynamic appearance descriptor approach to facial actions temporal modeling,”

IEEE Trans. Cybern., 44

(2), 161

–174

(2014). http://dx.doi.org/10.1109/TCYB.2013.2249063 Google Scholar

H. Dibeklioğlu, A. A. Salah and T. Gevers,

“Recognition of genuine smiles,”

IEEE Trans. Multimedia, 17

(3), 279

–294

(2015). http://dx.doi.org/10.1109/TMM.2015.2394777 Google Scholar

N. Ambady and R. Rosenthal,

“Thin slices of expressive behavior as predictors of interpersonal consequences: a meta-analysis,”

Psychol. Bull., 111

(2), 256

–274

(1992). http://dx.doi.org/10.1037/0033-2909.111.2.256 Google Scholar

T. Balomenos et al.,

“Emotion analysis in man–machine interaction systems,”

in Proc. Workshop Multimodal Interaction Related Machine Learning Algorithms,

318

–328

(2004). Google Scholar

A. Kapoor and R. W. Picard,

“Multimodal affect recognition in learning environments,”

in Proc. ACM Int. Conf. Multimedia,

677

–682

(2005). Google Scholar

C. L. Lisetti and F. Nasoz,

“MAUI: a multimodal affective user interface,”

in Proc. ACM Int. Conf. Multimedia,

161

–170

(2002). Google Scholar

M. Kächele et al.,

“Fusion of audio-visual features using hierarchical classifier systems for the recognition of affective states and the state of depression,”

Int. Conf. on Pattern Recognit. Appl. and Methods, 1

(1), 671

–678

(2014). DRESES 1062-6417 Google Scholar

M. Valstar et al.,

“AVEC 2016—Depression, mood, and emotion recognition workshop and challenge,”

in Proc. AVEC Workshop,

(2016). Google Scholar

H. Gunes and M. Piccardi,

“Automatic temporal segment detection and affect recognition from face and body display,”

IEEE Trans. Syst. Man Cybern. B, 39

(1), 64

–84

(2009). http://dx.doi.org/10.1109/TSMCB.2008.927269 Google Scholar

S. Piana et al.,

“Adaptive body gesture representation for automatic emotion recognition,”

ACM Trans. Interact. Intell. Syst., 6

(1), 6

(2016). Google Scholar

C. Cortes and V. Vapnik,

“Support-vector networks,”

Mach. Learn., 20

(3), 273

–297

(1995). Google Scholar

L. Rabiner and B. Juang,

“An introduction to hidden Markov models,”

IEEE ASSP Mag., 3

(1), 4

–16

(1986). http://dx.doi.org/10.1109/MASSP.1986.1165342 Google Scholar

M. Pantic and I. Patras,

“Dynamics of facial expression: recognition of facial actions and their temporal segments from face profile image sequences,”

IEEE Trans. Syst. Man Cybern. B, 36

(2), 433

–449

(2006). http://dx.doi.org/10.1109/TSMCB.2005.859075 Google Scholar

M. Pantic and I. Patras,

“Detecting facial actions and their temporal segments in nearly frontal-view face image sequences,”

in Proc. IEEE Int. Conf. on Systems, Man and Cybernetics,

3358

–3363

(2005). http://dx.doi.org/10.1109/ICSMC.2005.1571665 Google Scholar

S. Chen et al.,

“Segment and recognize expression phase by fusion of motion area and neutral divergence features,”

in Proc. IEEE Int. Conf. on Automatic Face & Gesture Recognition and Workshops (FG’11),

330

–335

(2011). http://dx.doi.org/10.1109/FG.2011.5771419 Google Scholar

S. Hochreiter and J. Schmidhuber,

“Long short-term memory,”

Neural Comput., 9

(8), 1735

–1780

(1997). http://dx.doi.org/10.1162/neco.1997.9.8.1735 NEUCEB 0899-7667 Google Scholar

H. Gunes and M. Piccardi,

“A bimodal face and body gesture database for automatic analysis of human nonverbal affective behavior,”

in Proc. Int. Conf. on Pattern Recognition,

(2006). Google Scholar

N. Ahmed, T. Natarajan and K. R. Rao,

“Discrete cosine transform,”

IEEE Trans. Comput., C-23

(1), 90

–93

(1974). http://dx.doi.org/10.1109/T-C.1974.223784 Google Scholar

T. Ojala and M. Pietikäinen,

“Multiresolution gray-scale and rotation invariant texture classification with local binary patterns,”

IEEE Trans. Pattern Anal. Mach. Intell., 24

(7), 971

–987

(2002). http://dx.doi.org/10.1109/TPAMI.2002.1017623 Google Scholar

P. F. Felzenszwalb et al.,

“Object detection with discriminatively trained part-based models,”

IEEE Trans. Software Eng., 32

(9), 1627

–1645

(2010). Google Scholar

S. Kirkpatrick, Jr. C. D. Gelat and M. P. Vecchi,

“Optimization by simulated annealing,”

Science, 220

(4598), 671

–680

(1983). http://dx.doi.org/10.1126/science.220.4598.671 SCIEAS 0036-8075 Google Scholar

K. Sikka,

“Multiple kernel learning for emotion recognition in the wild,”

in Proc. of the 15th ACM on Int. Conf. on Multimodal Interaction,

517

–524

(2013). Google Scholar

A. Dhall et al.,

“Emotion recognition in the wild challenge 2014: baseline, data and protocol,”

in Proc. of the 16th Int. Conf. on Multimodal Interaction,

461

–466

(2014). Google Scholar

X. Zhu and D. Ramanan,

“Face detection, pose estimation, and landmark localization in the wild,”

in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR 2012),

2879

–2886

(2012). http://dx.doi.org/10.1109/CVPR.2012.6248014 Google Scholar

X. Xiong and F. De la Torre,

“Supervised descent method and its applications to face alignment,”

in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR),

532

–539

(2013). http://dx.doi.org/10.1109/CVPR.2013.75 Google Scholar

J. W. Davis,

“Hierarchical motion history images for recognizing human motion,”

in Proc. IEEE Workshop on Detection and Recognition of Events in Video,

39

–46

(2001). http://dx.doi.org/10.1109/EVENT.2001.938864 Google Scholar

F. Bastien et al.,

“Theano: new features and speed improvements,”

in Proc. NIPS Workshop on Deep Learning and Unsupervised Feature Learning,

(2012). Google Scholar

E. Osuna, R. Freund and F. Girosi,

“Training support vector machines: an application to face detection,”

in Proc. IEEE Computer Society Conf. on Computer Vision and Pattern Recognition,

130

–136

(2000). http://dx.doi.org/10.1109/CVPR.1997.609310 Google Scholar

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||