|

|

1.IntroductionThe human face conveys much information, which people have a remarkable ability to extract, identify, and interpret. Age and gender are known to influence the structure and appearance of the face, and human observers can reliably infer both. Recently, there has been an increase in the development of automatic facial analysis techniques with a view for developing machine-based systems that mimic these abilities of the human visual system. Both being demographic attributes of the human face, they play important roles in real-life applications that include biometrics, demographic studies, targeted advertisements, human–computer interaction systems, and access control. With much progress in automatic face detection and recognition, much research is now focused on automatic demographic identification. Interestingly, research has shown that age estimation and classification are affected by gender differences1 as well as actual age.2 Indeed, both facial age and gender classifications have been studied together as related problems.3–5 Similarly, the two problems have been tackled simultaneously in other fields such as automatic speech recognition.6–8 Like other branches of facial analysis, automatic aging and gender classification are hindered by a host of factors including illumination variation, facial expressions, and pose variation to mention but a few. Several approaches have been documented in the literature to circumvent these problems.2,9 Research on facial aging can be categorized into age estimation, age progression, and age invariant face recognition (AIFR).10 Age estimation refers to the automatic labeling of age groups or the specific ages of individuals using information obtained from their faces. Age progression reconstructs the facial appearance with natural aging effects, and AIFR focuses on the ability to identify or verify people’s faces automatically, despite the effects of aging. In this work, we are focused on age estimation. Gender classification automatically assigns one of the two sex labels (male/female) to a facial image. Studies have shown that we humans are able to differentiate between adult male and female faces with up to 95% accuracy.11 However, the accuracy rate reduces to just above chance when considering child faces.12 An initial and key step in age and gender classification is feature extraction; this is the process of parameterizing the face with a view for defining an efficient descriptor. Several feature extraction methods have been used by researchers including, but not limited to, anthropometric features, local binary pattern (LBP),3 locality preserving projections (LPP),13 and neural network architectures.14 However, the active appearance model (AAM),15 which takes into account both facial shape and textures, remains the most popular feature extraction technique.16 It was first applied to the problem of age synthesis and estimation by Lanitis et al.,17 and since then it has been widely used in facial aging.9,10 Additionally, AAM features have been used in gender classification research,18 although LBP remains the most widely used feature descriptor for gender estimation. One of the key benefits of the AAM is its ability to reduce the facial shape and texture to a small number of parameters, making later computational analysis tractable. This process is driven by principal component analysis (PCA), a dimensionality reduction technique, which is also used to combine the texture and shape vectors. PCA, however, captures only the characteristics of the face data (predictor variables). It does not give importance to how each face feature may be related to the class label (age or gender). We can therefore say that the AAM works in an unsupervised manner. However, in the problem of estimation, there is a need to capture the facial information that is best related to the individual class labels. In this work, our contributions include improving on the conventional AAM, by the use of partial least-squares (PLS) regression in place of PCA. PLS is a dimensionality reduction technique that maximizes the covariance between the predictor and the response variable, thereby generating latent scores having both reduced dimension and superior predictive power. We term the model as supervised appearance model (sAM). The feature extraction model is then applied to the problems of age estimation and gender classification. Finally, we evaluate the performance of the classifications using the FGNET-AD benchmark database (DB). 2.Previous and Related Work2.1.Age EstimationOver the last 15 years, several pieces of research have been published on facial age estimation. The algorithms usually take one of two approaches: age group or age-specific estimation. The former classifies a person as either child or adult, while the latter is more precise as it attempts to estimate the exact age of a person. Each of these approaches can be further decomposed into two key steps: feature extraction and pattern learning/classification. 2.1.1.Feature extractionTwo feature extraction techniques have been used in the literature: local and holistic. The local approach, also known as the part-based or analytic approach, concentrates on salient parts of the face, such as the facial anthropometry and wrinkles. Using local features, the earliest work on age estimation can be traced back to Kwon and Lobo.19 Two-dimensional (2-D) images were classified into three age groups: babies, young adults, and senior adults. They represented the face as ratios of distances between feature points, as well as using a snakelet transform to represent wrinkles. The ratios were used to discriminate infants from adults, and the snakelets to discriminate young from senior adults. Several other approaches have extended this basic idea, using sobel edge detection with region tagging,20 Gabor filters and LBP,21 and Robinson compass masks22 to define wrinkle and texture features. More detailed craniofacial growth models have also been developed to define the ratios between facial features23 coupled with the adaptive retinal sampling method.24 A drawback of local features is that they are not suited for specific age estimation, because geometric features describe only shape changes that are predominant in childhood and local textures are limited to wrinkles, which manifest in adulthood. Holistic, also known as global methods, considers the entire face when extracting features. Subspace learning techniques have been used extensively in the literature; these include PCA, neighborhood preserving projections, LPP, orthogonal LPP,25,26 locality sensitive discriminant analysis (LSDA), and marginal Fisher analysis (MFA).1 The AAM,15 a statistical feature extraction method that captures both shape and texture variation, has been the most widely used technique.10 Lanitis et al.17 were the first to perform specific age estimation using the AAMs. Recently, biologically inspired features (BIF)27 have been used by several researchers14,28 with promising results.10 It is worth noting that in the past, researchers have used a hybrid of local and global features thereby achieving improved results.21 2.1.2.Age learningThis has been approached in two main ways: either as a regression problem thereby considering the ordinal relationship between ages or as that of a multiclass classification. Following the latter approach, conventional classification algorithms, such as support vector machines (SVM)14 and relevance vector machines,29 have been employed. Estimation via the use of regression was first presented by Lanitis et al.17 using a quadratic function (QF). Lanitis et al.30 compared the QF to three traditional classifiers: shortest distance classifiers, multilayer perceptron (MLP), and the Kohonen self organizing maps. They reported that MLP and QF had the best performance. Geng et al.31 described aging pattern subspace (AGES), a method that learns aging pattern of individuals and uses AAM for feature extraction. Multiple linear regression was proposed by Fu et al.25 Using Gaussian mixture models, Yan et al.32 proposed patch kernel regression. For a comparison of some recent regression algorithms, the reader is referred to the work of Fernández et al.33 2.2.Gender ClassificationGender classification is also approached in two major steps: feature extraction and classification. Feature extraction techniques reported in the literature can be categorized into geometric and appearance based. Geometry-based models use measurements extracted from facial landmarks to describe the face. In one of the earliest works on gender classification, Ferrario et al.34 used 22 fiducial points to represent the length and width of the face, then Burton et al.35 deployed 73 fiducial points; afterward discriminant analysis was used by Burton et al.35 to classify the human faces. In a second analysis, the authors used 30 ratios and 30 angles. Fellous36 extended the works of Ferrario et al.34 and Burton et al.35 Out of 40 fiducial points, 22 distances were extracted; these dimensions were further reduced to 5, using discriminant analysis. Having experimented on a small DB of 52 faces, the algorithm was reported to have achieved 95% gender recognition rate. In summary, geometric models maintain only the geometric relationships between facial features, thereby discarding information about facial texture. These models are also sensitive to variations in imaging geometry such as pose and alignment. Appearance-based methods extract pixel intensities and use them to represent the face. Some of the earlier researchers37 preprocess the image and feed in pixel intensities into classifiers. The preprocessing step mainly involves alignment, illumination normalization, and image resizing. More researchers performed subspace transformations to either reduce dimensions or explore the underlying structure of the raw data.2 Other appearance-based feature extraction methods include the AAM, scale-invariant features, Gabor wavelets, and LBP.2 The classification step is typically achieved using binary classifiers. SVMs have been the most widely used, other classifiers that have been applied include decision trees, neural networks, boosting, bagging, and other ensembles. For more detailed information on gender classification, the reader is referred to the review by Ng et al.2 To summarize the literature regarding age estimation and gender classification, several feature extraction methods have been utilized and adapted by researchers. While the majority of age estimation and gender classification techniques have been developed for grayscale images, techniques have also been developed for handling color images. When dealing with color images, early researchers treated the three color channels as independent grayscale images, by concatenating the three channels into a single long vector.38 Under this simple representation, the spatial relationships that exist between the color pixels are destroyed, and the dimension of the image becomes three times that of the classical grayscale model. Furthermore, research has shown that there is high interchannel correlation among the RGB channels,39 and therefore simple concatenation results in redundancy. As such, several efficient techniques of incorporating color channels have been suggested. The i1i2i340 color transform has been used in the past to decorrelate the RGB channels using Karhunen–Loève transform.39,41 Recently, quaternion, a powerful mathematical tool, has been applied to the problem.42,43 This has proven to be a good feature extraction method due to its ability to preserve the spatial relationships among R, G, and B channels. Additionally, it retains the holistic properties of PCA. Also, quaternion algebra has been applied to complex-type moments for color images43 and has been shown to be invariant to image rotation, scale, and translation transformations. However, the method still works in an unsupervised manner, and hence does not take into consideration the class labels of the response variables. Recently, deep learning convolutional neural networks (DLNN), a class of machine learning techniques that perform both automatic supervised and unsupervised feature extraction, as well as transformation for pattern analysis and classification44 have gained wide popularity among researchers and have been applied directly to the problem of age estimation and gender classification.5,45 In general, the methods perform well due to their ability to capture intricate structures in large datasets. Moreover, DLNN eliminates the trouble of hard-engineered feature extraction.46 Table 1 summarizes the advantages and disadvantages of commonly used feature extraction methods. Table 1Advantages and disadvantages of existing methods.

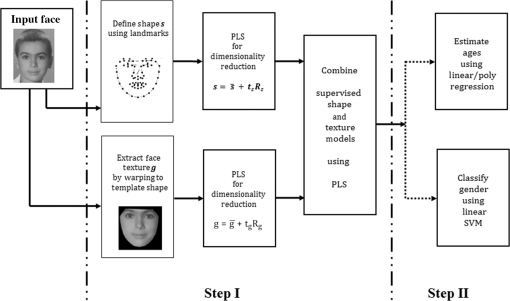

3.Related Algorithm to the Proposed Framework3.1.Partial Least-Squares for Dimension ReductionPLS regression, introduced by Wold in Ref. 56, is a statistical method that creates latent features via a linear combination of the predictor () and response () variables. It generalizes and combines features from multiple regression and PCA.57 Hence, PLS has the ability to do both dimensionality reduction and regression simultaneously. The technique is very useful when there is need to predict a dependent variable from a large set of predictors. Although similar to PCA, it is much more powerful in regression applications, because PCA finds the direction of highest variance only in , so the principal components (PCs) best describe . However nothing guarantees that these PCs, which explain optimally, will be appropriate predictors of . On the other hand, PLS searches for components (latent vectors) that capture directions of highest variance in as well as the direction that best relates and (i.e., covariance between and ). Hence it performs simultaneous decomposition of and . In other words, PCA performs dimensionality reduction in an unsupervised manner, while PLS does in a supervised manner. Let denote an matrix of predictor variables, where is the number of data samples and the dimensions (features) of the each data, and be an matrix of response variables. Here, refers to the response variable’s number of features, for most classification problems, . PLS decomposes the two centered matrices (having zero mean) into where and are matrices of extracted linear latent vectors also known as the latent scores, the matrices and are loadings having and dimensions, respectively, the matrix and the matrix are the matrices of residuals. The scores can be computed directly from the mean centered feature set where is the matrix of raw uncentered predictor variables, a matrix representing the mean of has the same dimension with the zero-mean-predictor variable , similarly, the matrices and having the same dimension as represent the uncentered and mean response variables, respectively. The matrix of weights is computed by solving an optimization problem. The estimate of ’th direction vector is formulated as for .From Eq. (2), it is also possible to reconstruct the original data from the latent score by inverting the matrix . This operation is straightforward when is a square matrix, however only the approximate inverse can be computed for a nonsquare . However, only the approximate inverse can be computed for a nonsquare Here, we term the projection coefficient.Several methods for computing PLS have been proposed in the literature. In this work, we shall use the SIMPLS algorithm proposed by De Jong,58 thereby taking advantage of the method’s speed. Suppose we have a mean centered training set consisting of observations, whose class labels are known and denoted by . Given a test set , whose class label has to be predicted, PLS can be used for dimensionality reduction by projecting the test data onto the weight matrix . Hence, the latent scores matrix for the test data is computed as shown below 4.Proposed Framework4.1.OverviewFigure 1 illustrates the framework for age and gender classification. Step I describes the modeling of an sAM, which involves capturing shape and texture variations via PLS regression. The model is fully described in Sec. 4.2. Step II of the framework shows how the extracted facial features are utilized for age estimation or gender classification; this is outlined in Sec. 4.3. In Sec. 4.4, an algorithm summarizing the proposed framework is presented. 4.2.Supervised Appearance ModelLike the conventional AAM, the proposed sAM captures both shape and texture variability from the training dataset. This is done by forming a parameterized model using PLS dimensionality reduction to capture the variations as well as combine them in a single model. The shape of each face in the training DB is represented by a set of 2-D landmarks stacked to form a vector given by As suggested by Cootes et al.,15 we remove rotational, translational, and scaling variations from the landmark locations by aligning all the shapes using generalized procrustes analysis.59 Next, a supervised shape model is formed by performing PLS as described in Sec. 3. Here, we use the matrix of shapes . as the predictor variable and the class labels are stored in a vector . Using Eq. (4), each shape can be represented using a linear equationThis can be written as where is the mean shape, is a vector of latent scores representing the shapes, and is the projection coefficient of shapes.To build the supervised texture model, all face images are affine warped to the mean shape ; this is done so that the control points of the training images match that of a fixed shape. Illumination variations are then normalized by applying a scaling and an offset to the warped images.15 Finally, each matrix of image pixel intensities (textures) is converted to vector . By applying PLS to the matrix , a linear model of textures is obtained where is the gray-level texture, is a vector of latent scores representing the texture, and is the projection coefficient of textures.Hence, both shape and texture can be summarized by the latent vectors and . Consequently, a combined model of shape and texture can be formed by concatenating the two vectors To further eliminate the correlation that may exist between shape and texture, PLS is applied to . Since both and have zero mean, also has zero mean. Hence, the PLS decomposition can be achieved by directly substituting into Eq. (4), where replaces , here, we use a matrix to represent the latent scores for all the faces in the DB.Thus, the sAM describing each face can be represented by a linear equation where is a vector of latent scores representing both shape and texture of a particular individual and is the projection coefficient of the combined model. It is worth noting that has two components as shown in Eq. (11), a projection coefficient associated with and which is associated with .Similar to the conventional AAM, the linear nature of the supervised model makes it possible to express both shape and texture in terms of the We have now defined an sAM an extension of the AAM model, since the parameter summarizes both shape and texture information, it gives us a convenient way of representing faces with a view for solving the problems of age and gender classification. 4.3.Age and Gender ClassificationThe sAM model contains both shape and texture components and can be supervised to model age and gender directly, which make it ideal as a facial model in these applications. In this work, we learn the aging pattern using a regression approach. Hence, an aging function relating faces to ages can be defined using where is a vector of ages of all individuals in the DB, is a matrix for the sAM parameter for each face in the DB, and is the total number of samples.While several linear and nonlinear regressors have been used in the literature, here we experiment with simple models, hence we choose ordinary least-square (OLS) and QF regressions. Thus, for each face the is computed from its corresponding sAM parameter using where is the intercept also called an offset, and are vectors of regression coefficients, and Eqs. (14) and (15) correspond to OLS and QF, respectively.Gender determination is a binary classification problem, where the test data are either labeled male or female. Given a training set for , with and , a classifier is learned such that Here, we denote as male and as female. While many classifiers have been proposed in the literature, SVM has been one of the most successful for binary classifications.The goal of SVM is to find an optimal separating hyperplane (OSH) that best separates the two classes. It works by first mapping the training sample via a function into a higher (infinite) dimensional space . Then, an OSH is found in by solving an optimization problem. However, the mapping from input space to the feature space is not done explicitly; rather, it is done via the kernel trick, which computes the inner dot products of the training data. For detailed explanation of SVM, the reader is referred to Ref. 60. In this work, the kernel function deployed is the linear kernel given by 4.4.Algorithm for the Proposed FrameworkThe proposed framework entails capturing the facial shape and texture using PLS regression, before combining the two statistical models into a single holistic model. We term this computational abstraction as sAM. Furthermore, the framework shows how the sAM parameterized face is used for age and gender classifications; this is summarized in Algorithm 1. Algorithm 1sAM age and gender classification framework.

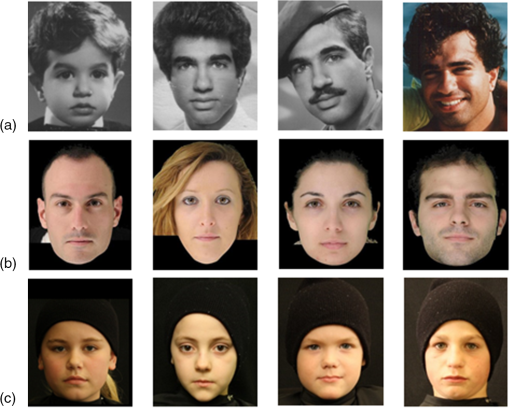

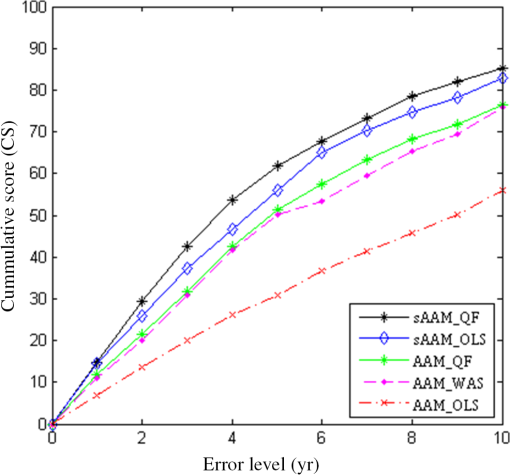

5.ExperimentsIn this section, the effectiveness of the proposed feature extraction technique is evaluated. sAM is compared to the conventional AAM in the two problems of age estimation and gender classification. Age estimation is evaluated by incorporating the sAM features into two simple traditional regression algorithms: linear and QFs. Furthermore, we perform gender classification by feeding the sAM features into a linear SVM classifier. Here, we have restricted our experiments to simple classifiers to fully explore the efficacy of the feature extraction method. 5.1.Databases UsedAge estimation experiments are performed on one of the most widely used FGNET aging DB.61 Initially, gender classification experiments are conducted on the FGNET-AD, then to further show how age variation affects performance of gender classifiers, we perform two more experiments: one on Politecnico di Torino’s “HQFaces” DB62 and the other on the Dartmouth children’s faces DB.63 In addition to comparing sAM to AAM, the algorithms are also compared to state-of-the-art work. 5.1.1.FGNET-ADThe FGNET aging DB is made of 1002 images of 82 subjects, with ages distributed in the range of 0 to 69. Hence, each subject has multiple images. With more than 700 images within the age of 0 to 20, the age distribution is not balanced; this makes the FGNET-AD a challenging dataset. Additionally, the quality of the images varies from grayscale to colored, with individuals from different races displaying varying pose and facial expressions. Other inter- and intraquality variations include illumination, sharpness, and resolution. Gender distribution for FGNET-AD is 48 males and 34 females having 571 and 431 photographs, respectively. 5.1.2.HQFaces databaseHQFaces is a DB of 184 high-quality, controlled images collected at the Politecnico di Torino, Italy. All having a resolution of and photographed under the same lightening conditions. The subjects are Caucasian, and predominantly adults having an age range of 13 to 50 yr, out of which 57% are male. For the purpose of our experiments, 143 frontal images were used. 5.1.3.Dartmouth children’s faces databaseDartmouth children’s faces DB is an image library formed at the University of Dartmouth, Hanover, New Hampshire. It is made of high-quality images of 80 Caucasian children ranging from the ages of 6 to 16 yr, with a gender ratio of . Additionally, all subjects were photographed under two lightening conditions, at five angles and displaying eight facial expressions. A sample of images contained in the above mentioned DBs is shown in Fig. 2. In this work, images from these sources were cropped to ; this was done to reduce computational cost. 5.2.Age and Gender Classification ExperimentsFace shape for the FGNET dataset is represented by a set of 68 landmarks defined in 2-D space . On the other two datasets, 79 fiducial points are used to describe the face shape. As stated earlier, for each face shape, the 2-D coordinates are converted into a single vector by stacking the -coordinates over the -coordinates as shown in Eq. (6). Facial texture in the form of image pixels is captured by the approach of Cootes et al.15 First, all color images are converted to grayscale, then all the images are aligned to a mean shape via warping, thus “shape-free patches” are created using piecewise affine method,64 a simple nonparametric warping technique that performs well on local distortions. Afterward, illumination normalization is conducted as stated earlier. Finally, each image matrix is converted to a long () vector described in Eq. (9). Using Eqs. (8) and (9), we compute the latent parameters of shape and texture , each of these two is represented using just eight components. Then, the second PLS is performed on an matrix. Considering the FGNET-AD, . Finally, the sAM parameter is represented by 13 components. We chose the number of components via cross validation. To achieve age estimation, we implemented two regression algorithms as described earlier. In our experiment, the QF is computed in a sparse manner; as a form of regularization, we limited the number of observed powers. Hence, instead of computing the second-order terms of all 13 components, only the second-order terms of the first seven independent variables were used. For age estimation, the vector representing class labels contained individual ages of the training data, while in gender classification and represented male and female genders, respectively. To evaluate the accuracy of both age estimation and gender classification, we employed the leave-one-person-out (LOPO) cross-validation method. Here, the image of one person is used as the test set, and an estimator/classifier is trained using images of the remaining subjects. So, by the end of 82-folds, each subject in the FGNET-AD will have been used for testing. This approach mimics a real-life scenario, where the classifier is tested on an image that has not been seen before. In addition, the LOPO approach, unlike other cross-validation techniques, ensures consistency of results and ease of comparative evaluation of different algorithms. The performance measures used for age estimation are mean absolute error (MAE) and cumulative score (CS), given by where is the ground truth age, is the estimated age, is the number of test images, and denotes the number of images on which the system makes absolute error not higher than yr.Gender classification performance is evaluated by detection rate (DR) also known as sensitivity. This is given by First, we conducted an experiment on FGNET-AD, where we compared the results of sAM estimation and classification to those obtained using the conventional AAM and other state-of-the-art feature extraction techniques. A summary of our initial experiments on FGNET-AD is presented in Tables 2, 3, and Fig. 3. Table 2MAE for state-of-the-art age estimation algorithms on FGNET-AD (LOPO).

Table 3DR for gender classification algorithms on FGNET-AD (LOPO).

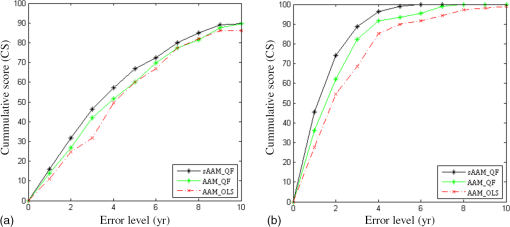

Results show the superiority of the proposed sAM in age estimation and gender classification on a challenging benchmark DB. As shown in Table 2, the sAMs with linear and quadratic fits achieved 5.92 and 5.49 MAEs, respectively, using the LOPO cross-validation technique. Figure 3 shows CSs of algorithms at error levels between 0 and 10 yr. This demonstrates that sAM with quadratic fit has the most accurate estimation at all error levels with over 85% of the test data achieving estimation error below 10 yr. It is worth noting that the sAM based methods also have superior dimensionality reduction capability: while the number of AAM parameters used in most of the literature ranges from 50 to 200, using the sAM methods we were able to compress hundreds of appearance components into only eight variables. The gender classification experiment on the FGNET-AD shown in Table 3, also shows sAM with linear SVM classification achieved the best result with 76.65% DR. Other implementations of the AAM and LBP attained lower DRs. To further evaluate the performance of the proposed framework, three additional experiments were conducted. We compared the performance of the better of our two age estimation implementations, i.e., sAM QF on the two controlled color image DBs (HQFaces and Dartmouth DB). As can be seen in Fig. 4, the CSs for error levels between 0 and 10 yr show that “sAM QF” is evidently better than the two AAM implementations. It is also not surprising that we achieved lower MAEs as compared to the result we attained on FGNET-AD. The reason behind 4.88 and 1.39 MAEs (as shown in Tables 4 and 5) on HQFaces and Dartmouth DB, respectively, was primarily due to the quality of the images. This shows that sAM, such as other feature extraction techniques, performs better under controlled conditions. The fact that the algorithm achieves the lowest estimation error on the Dartmouth DB implies that age discrimination is more apparent in children. Table 4MAE comparison on HQFaces DB (LOPO). Table 5MAE comparison on Dartmouth DB (LOPO). Next, experiments were conducted to assess the performance of our gender classification algorithm. Initially, we tested it in a holistic manner on the three DBs, as shown in Table 6, we achieved the best DR on HQFaces DB. Since HQFaces is made of predominantly adult faces, the result proves that gender discrimination is more evident in adults; consequently the classifier performs worst on children’s only DB (i.e., the Dartmouth DB). To further analyze this evidence, each image DB was split into seven age groups, 0 to 10, 11 to 20, 21 to 30, 31 to 40, 41 to 50, 51 to 60, and 61 to 70. The results presented in Table 7 depict two things: first, best DRs are achieved in the 21 to 30 age group, and second, it has been observed that the worst recorded result was on FGNET-AD’s 61 to 70 age group. This is obviously due to the size of the training data; as shown in Table 7, only seven images were used to train the algorithm at that instance. We therefore presume that sAM being a data-driven algorithm requires a sufficient amount of training data to achieve excellent classification results. If we were to sideline age groups with insufficient training data, it is then obvious that the performance of the gender classification for children’s faces (0 to 10 age group) remains clearly below what was achieved on adult faces where we had a sufficient number of training images. Table 6Gender classification DR on different DBs.

Table 7Gender classification DR according to age groups.

6.ConclusionWe have proposed an sAM, which improves on the traditional AAM. When used for facial feature extraction, the model describes the face with very few components. For instance, we used just 13 components to effectively represent the face on FGNET-AD as opposed to AAM, which requires between 50 and 200 parameters. When used for age estimation, we achieved 5.49 MAE, which is comparable to most state-of-the-art algorithms and better than most algorithms that used AAM for feature extraction. Additionally, when used for gender classification, sAM outperforms most state-of-the-art work. This further proves the predict power and superior dimensionality reduction ability of the sAM. In the future, we hope to investigate the ability to reconstruct the human face using sAM with a view for conducting automatic facial age synthesis. ReferencesG. Guo et al.,

“A study on automatic age estimation using a large database,”

in 2009 IEEE 12th Int. Conf. on Computer Vision,

1986

–1991

(2009). http://dx.doi.org/10.1109/ICCV.2009.5459438 Google Scholar

C.-B. Ng, Y.-H. Tay and B.-M. Goi,

“A review of facial gender recognition,”

Pattern Anal. Appl., 18

(4), 739

–755

(2015). http://dx.doi.org/10.1007/s10044-015-0499-6 Google Scholar

E. Eidinger, R. Enbar and T. Hassner,

“Age and gender estimation of unfiltered faces,”

IEEE Trans. Inf. Forensics Secur., 9

(12), 2170

–2179

(2014). http://dx.doi.org/10.1109/TIFS.2014.2359646 Google Scholar

G. Guo and G. Mu,

“Simultaneous dimensionality reduction and human age estimation via kernel partial least squares regression,”

in 2011 IEEE Conf. on Computer Vision and Pattern Recognition,

657

–664

(2011). http://dx.doi.org/10.1109/CVPR.2011.5995404 Google Scholar

G. Levi and T. Hassner,

“Age and gender classification using convolutional neural networks,”

in 2015 IEEE Conf. on Computer Vision and Pattern Recognition Workshops (CVPRW ‘15),

34

–42

(2015). http://dx.doi.org/10.1109/CVPRW.2015.7301352 Google Scholar

F. Metze et al.,

“Comparison of four approaches to age and gender recognition for telephone applications,”

in IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP ‘07),

IV–1089

–IV–1092

(2007). http://dx.doi.org/10.1109/ICASSP.2007.367263 Google Scholar

M. Li, K. J. Han and S. Narayanan,

“Automatic speaker age and gender recognition using acoustic and prosodic level information fusion,”

Comput. Speech Lang., 27

(1), 151

–167

(2013). http://dx.doi.org/10.1016/j.csl.2012.01.008 Google Scholar

F. Burkhardt et al.,

“A database of age and gender annotated telephone speech,”

in Proc. of the Seventh Int. Conf. on Language Resources and Evaluation (LREC ‘10),

(2010). Google Scholar

Y. Fu, G. Guo and T. Huang,

“Age synthesis and estimation via faces: a survey,”

IEEE Trans. Pattern Anal. Mach. Intell., 32

(11), 1955

–1976

(2010). http://dx.doi.org/10.1109/TPAMI.2010.36 ITPIDJ 0162-8828 Google Scholar

G. Panis et al.,

“Overview of research on facial ageing using the FG-NET ageing database,”

IET Biom., 5

(2), 37

–46

(2015). http://dx.doi.org/10.1049/iet-bmt.2014.0053 Google Scholar

V. Bruce et al.,

“Sex discrimination: how do we tell the difference between male and female faces?,”

Perception, 22

(2), 131

–152

(1993). http://dx.doi.org/10.1068/p220131 PCTNBA 0301-0066 Google Scholar

H. A. Wild et al.,

“Recognition and sex categorization of adults’ and children’s faces: examining performance in the absence of sex-stereotyped cues,”

J. Exp. Child Psychol., 77

(4), 269

–291

(2000). http://dx.doi.org/10.1006/jecp.1999.2554 JECPAE 0022-0965 Google Scholar

C. Chen et al.,

“Face age estimation using model selection,”

in 2010 IEEE Computer Society Conf. on Computer Vision Pattern Recognition—Workshops,

93

–99

(2010). http://dx.doi.org/10.1109/CVPRW.2010.5543820 Google Scholar

G. Guo et al.,

“Human age estimation using bio-inspired features,”

in IEEE Conf. on Computer Vision and Pattern Recognition (CVPR ’09),

112

–119

(2009). http://dx.doi.org/10.1109/CVPR.2009.5206681 Google Scholar

T. F. Cootes, G. J. Edwards and C. J. Taylor,

“Active appearance models,”

in Computer Vision—(ECCV ’98),

484

–498

(1998). Google Scholar

W. Chao, J. Liu and J. Ding,

“Facial age estimation based on label-sensitive learning and age-oriented regression,”

Pattern Recognit., 46

(3), 628

–641

(2013). http://dx.doi.org/10.1016/j.patcog.2012.09.011 Google Scholar

A. Lanitis, C. Taylor and T. Cootes,

“Toward automatic simulation of aging effects on face images,”

IEEE Trans. Pattern Anal. Mach. Intell., 24

(4), 442

–455

(2002). http://dx.doi.org/10.1109/34.993553 ITPIDJ 0162-8828 Google Scholar

W. Yang et al.,

“Gender classification via global-local features fusion,”

Lect. Notes Comput. Sci., 7098 214

–220

(2011). http://dx.doi.org/10.1007/978-3-642-25449-9 LNCSD9 0302-9743 Google Scholar

Y. H. Kwon and V. Lobo,

“Age classification from facial images,”

in Proc. IEEE Conf. Computer Vision Pattern Recognition (CVPR ‘94),

762

–767

(1994). http://dx.doi.org/10.1109/CVPR.1994.323894 Google Scholar

W. B. Horng, C. P. Lee and C. W. Chen,

“Classification of age groups based on facial features,”

Tamkang J. Sci. Eng., 4

(3), 183

–192

(2001). http://dx.doi.org/10.6180/jase.2001.4.3.05 Google Scholar

S. E. Choi et al.,

“Age estimation using a hierarchical classifier based on global and local facial features,”

Pattern Recognit., 44

(6), 1262

–1281

(2011). http://dx.doi.org/10.1016/j.patcog.2010.12.005 Google Scholar

C. R. Babu, E. S. Reddy and B. P. Rao,

“Age group classification of facial images using rank based edge texture unit (RETU),”

Procedia Comput. Sci., 45 215

–225

(2015). http://dx.doi.org/10.1016/j.procs.2015.03.124 Google Scholar

N. Ramanathan and R. Chellappa,

“Modeling age progression in young faces,”

in 2006 IEEE Computer Society Conf. on Computer Vision Pattern Recognition,

387

–394

(2006). http://dx.doi.org/10.1109/CVPR.2006.187 Google Scholar

H. Takimoto et al.,

“Robust gender and age estimation under varying facial pose,”

Electron. Commun. Jpn., 91

(7), 32

–40

(2008). http://dx.doi.org/10.1002/ecj.v91:7 Google Scholar

Y. Fu, Y. Xu and T. S. Huang,

“Estimating human age by manifold analysis of face pictures and regression on aging features,”

in 2007 IEEE Int. Conf. on Multimedia and Expo,

1383

–1386

(2007). http://dx.doi.org/10.1109/ICME.2007.4284917 Google Scholar

G. Guo et al.,

“Image-based human age estimation by manifold learning and locally adjusted robust regression,”

IEEE Trans. Image Process., 17

(7), 1178

–1188

(2008). http://dx.doi.org/10.1109/TIP.2008.924280 IIPRE4 1057-7149 Google Scholar

M. Riesenhuber and T. Poggio,

“Hierarchical models of object recognition in cortex,”

Nat. Neurosci., 2

(11), 1019

–1025

(1999). http://dx.doi.org/10.1038/14819 NANEFN 1097-6256 Google Scholar

M. Y. El Dib and M. El-Saban,

“Human age estimation using enhanced bio-inspired features (EBIF),”

in 2010 IEEE Int. Conf. Image Processing,

1589

–1592

(2010). http://dx.doi.org/10.1109/ICIP.2010.5651440 Google Scholar

T. Wu, P. Turaga and R. Chellappa,

“Age estimation and face verification across aging,”

IEEE Trans. Inf. Forensics Secur., 7

(6), 1780

–1788

(2012). http://dx.doi.org/10.1109/TIFS.2012.2213812 Google Scholar

A. Lanitis, C. Draganova and C. Christodoulou,

“Comparing different classifiers for automatic age estimation,”

IEEE Trans. Syst. Man Cybern. B, 34

(1), 621

–628

(2004). http://dx.doi.org/10.1109/TSMCB.2003.817091 Google Scholar

X. Geng, Z.-H. Zhou and K. Smith-Miles,

“Automatic age estimation based on facial aging patterns,”

IEEE Trans. Pattern Anal. Mach. Intell., 29

(12), 2234

–2240

(2007). http://dx.doi.org/10.1109/TPAMI.2007.70733 Google Scholar

S. Yan et al.,

“Regression from patch-kernel,”

in IEEE Conf. on Computer Vision and Pattern Recognition (CVPR ’08),

1

–8

(2008). http://dx.doi.org/10.1109/CVPR.2008.4587405 Google Scholar

C. Fernández, I. Huerta and A. Prati,

“A comparative evaluation of regression learning algorithms for facial age estimation,”

in FFER Conjunction with ICPR,

(2014). Google Scholar

V. F. Ferrario et al.,

“Sexual dimorphism in the human face assessed by Euclidean distance matrix analysis,”

J. Anat., 183

(Pt 3), 593

(1993). JOANAY 0021-8782 Google Scholar

A. M. Burton, V. Bruce and N. Dench,

“What’s the difference between men and women? Evidence from facial measurement,”

Perception, 22

(2), 153

–176

(1993). http://dx.doi.org/10.1068/p220153 PCTNBA 0301-0066 Google Scholar

J.-M. Fellous,

“Gender discrimination and prediction on the basis of facial metric information,”

Vision Res., 37

(14), 1961

–1973

(1997). http://dx.doi.org/10.1016/S0042-6989(97)00010-2 VISRAM 0042-6989 Google Scholar

B. Moghaddam and M.-H. Yang,

“Learning gender with support faces,”

IEEE Trans. Pattern Anal. Mach. Intell., 24

(5), 707

–711

(2002). http://dx.doi.org/10.1109/34.1000244 Google Scholar

G. Edwards, Learning to Identify Faces in Images and Video Sequences,

(1999). Google Scholar

M. C. Ionita, P. Corcoran and V. Buzuloiu,

“On colour texture normalization for active appearance models,”

IEEE Trans. Image Process., 18

(6), 1372

–1378

(2009). http://dx.doi.org/10.1109/TIP.2009.2017163 IIPRE4 1057-7149 Google Scholar

Y.-I. Ohta, T. Kanade and T. Sakai,

“Colour information for region segmentation,”

Comput. Graphics Image Process., 13

(1), 222

–241

(1980). http://dx.doi.org/10.1016/0146-664X(80)90047-7 Google Scholar

A. M. Bukar, H. Ugail and D. Connah,

“Individualised model of facial age synthesis based on constrained regression,”

in 2015 Int. Conf. on Image Processing Theory, Tools and Applications (IPTA ‘15),

285

–290

(2015). http://dx.doi.org/10.1109/IPTA.2015.7367147 Google Scholar

Y. Sun, S. Chen and B. Yin,

“Colour face recognition based on quaternion matrix representation,”

Pattern Recognit. Lett., 32

(4), 597

–605

(2011). http://dx.doi.org/10.1016/j.patrec.2010.11.004 PRLEDG 0167-8655 Google Scholar

B. Chen et al.,

“Colour image analysis by quaternion-type moments,”

J. Math. Imaging Vision, 51

(1), 124

–144

(2015). http://dx.doi.org/10.1007/s10851-014-0511-6 Google Scholar

L. Deng and D. Yu,

“Deep learning: methods and applications,”

Found. Trends® Signal Process., 7

(3–4), 197

–387

(2013). http://dx.doi.org/10.1561/2000000039 Google Scholar

C. Yan et al.,

“Age estimation based on convolutional neural network,”

Advances in Multimedia Information Processing—PCM 2014, 211

–220 Springer-Verlag, New York

(2014). Google Scholar

Y. LeCun, Y. Bengio and G. Hinton,

“Deep learning,”

Nature, 521

(7553), 436

–444

(2015). http://dx.doi.org/10.1038/nature14539 Google Scholar

D. Huang et al.,

“Local binary patterns and its application to facial image analysis: a survey,”

IEEE Trans. Syst. Man Cybern. C, 41

(6), 765

–781

(2011). http://dx.doi.org/10.1109/TSMCC.2011.2118750 Google Scholar

A. F. Abate et al.,

“2D and 3D face recognition: a survey,”

Pattern Recognit. Lett., 28

(14), 1885

–1906

(2007). http://dx.doi.org/10.1016/j.patrec.2006.12.018 PRLEDG 0167-8655 Google Scholar

L. Shen and L. Bai,

“A review on Gabor wavelets for face recognition,”

Pattern Anal. Appl., 9

(2–3), 273

–292

(2006). http://dx.doi.org/10.1007/s10044-006-0033-y Google Scholar

X. Wen et al.,

“A rapid learning algorithm for vehicle classification,”

Inf. Sci., 295 395

–406

(2015). http://dx.doi.org/10.1016/j.ins.2014.10.040 ISIJBC 0020-0255 Google Scholar

P. Viola and M. Jones,

“Rapid object detection using a boosted cascade of simple features,”

in Proc. 2001 IEEE Computer Society Conf. on Computer Vision Pattern Recognition (CVPR ‘01),

I–511

–I–518

(2001). http://dx.doi.org/10.1109/CVPR.2001.990517 Google Scholar

B. Wu et al.,

“Fast rotation invariant multi-view face detection based on real adaboost,”

in Proc. Sixth IEEE Int. Conf. on Automatic Face and Gesture Recognition,

79

–84

(2004). http://dx.doi.org/10.1109/AFGR.2004.1301512 Google Scholar

G. Tzimiropoulos, S. Zafeiriou and M. Pantic,

“Subspace learning from image gradient orientations,”

IEEE Trans. Pattern Anal. Mach. Intell., 34

(12), 2454

–2466

(2012). http://dx.doi.org/10.1109/TPAMI.2012.40 Google Scholar

X. Gao et al.,

“A review of active appearance models,”

IEEE Trans. Syst. Man Cybern. C, 40

(2), 145

–158

(2010). http://dx.doi.org/10.1109/TSMCC.2009.2035631 Google Scholar

J. Ngiam et al.,

“On optimization methods for deep learning,”

in Int. Conf.on Machine Learning,

265

–272

(2011). Google Scholar

H. Wold,

“Estimation of principal components and related models by iterative least squares,”

Multivariate Analysis, 391

–420 1966). Google Scholar

H. Abdi,

“Partial least squares regression and projection on latent structure regression (PLS Regression),”

Wiley Interdiscip. Rev. Comput. Stat., 2

(1), 97

–106

(2010). http://dx.doi.org/10.1002/wics.51 Google Scholar

S. De Jong,

“SIMPLS: an alternative approach to partial least squares regression,”

Chemom. Intell. Lab. Syst., 18

(3), 251

–263

(1993). http://dx.doi.org/10.1016/0169-7439(93)85002-X Google Scholar

J. C. Gower,

“Generalized procrustes analysis,”

Psychometrika, 40

(1), 33

–51

(1975). http://dx.doi.org/10.1007/BF02291478 0033-3123 Google Scholar

A. J. Smola and B. Schölkopf,

“A tutorial on support vector regression,”

Stat. Comput., 14

(3), 199

–222

(2004). http://dx.doi.org/10.1023/B:STCO.0000035301.49549.88 STACE3 0960-3174 Google Scholar

FG-NET (Face and Gesture Recognition Network), “The Fg-Net aging database,”

(2014) http://wwwprima.inrialpes.fr/FGnet/ ( September ). 2014). Google Scholar

T. F. Vieira et al.,

“Detecting siblings in image pairs,”

Visual Comput., 30

(12), 1333

–1345

(2014). http://dx.doi.org/10.1007/s00371-013-0884-3 VICOE5 0178-2789 Google Scholar

K. A. Dalrymple, J. Gomez and B. Duchaine,

“The Dartmouth database of children’s faces: acquisition and validation of a new face stimulus set,”

PLoS One, 8

(11), e79131

(2013). http://dx.doi.org/10.1371/journal.pone.0079131 POLNCL 1932-6203 Google Scholar

C. A. Glasbey and K. V. Mardia,

“A review of image-warping methods,”

J. Appl. Stat., 25

(2), 155

–171

(1998). http://dx.doi.org/10.1080/02664769823151 Google Scholar

S. Yan et al.,

“Learning auto-structured regressor from uncertain nonnegative labels,”

in 2007 IEEE 11th Int. Conf. on Computer Vision,

1

–8

(2007). http://dx.doi.org/10.1109/ICCV.2007.4409050 Google Scholar

X. Geng, C. Yin and Z.-H. Zhou,

“Facial age estimation by learning from label distributions,”

IEEE Trans. Pattern Anal. Mach. Intell., 35

(10), 2401

–2412

(2013). http://dx.doi.org/10.1109/TPAMI.2013.51 Google Scholar

Y. Wang et al.,

“Gender classification from infants to seniors,”

in 2010 Fourth IEEE Int. Conf. on Biometrics: Theory Applications and Systems (BTAS ‘10),

1

–6

(2010). http://dx.doi.org/10.1109/BTAS.2010.5634518 Google Scholar

BiographyAli Maina Bukar received his MSc degree from the School of Computing Science and Digital Media, Robert Gordon University, Aberdeen, UK, in 2010. He is currently working toward his PhD at the School of Media Design and Technology, University of Bradford, UK. His research interests include pattern recognition, machine learning, computer vision, and signal processing. Hassan Ugail has received a first class BSc Honors degree in mathematics from King’s College London and PhD in the field of geometric design from the School of Mathematics, University of Leeds. He is the director of the Centre for Visual Computing at Bradford. His research interests include geometric and functional design and three-dimensional (3-D) imaging. He has a number of patents on techniques relating to geometry modeling, animation, and 3-D data exchange. David Connah has a multidisciplinary background in biology (BSc), artificial intelligence (MSc), and digital imaging (PhD), and specializes in the role of color in digital imaging and computer vision applications, from both computational and perceptual perspectives. His research interests include multispectral imaging, image fusion, camera characterization, and human perception and performance. He has published over 25 journal and conference papers and is the holder of 3 patents in image processing. |