|

|

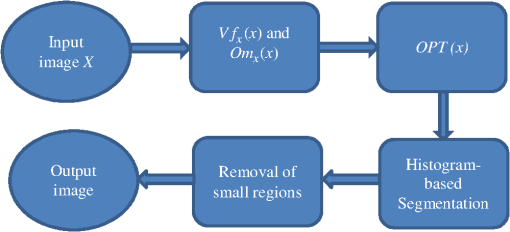

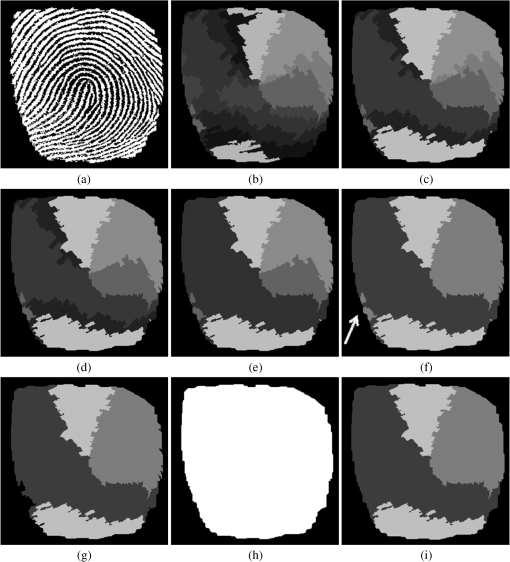

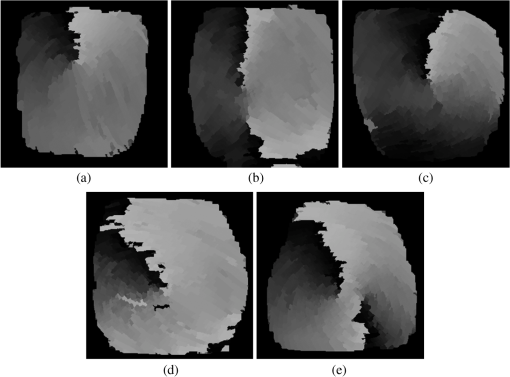

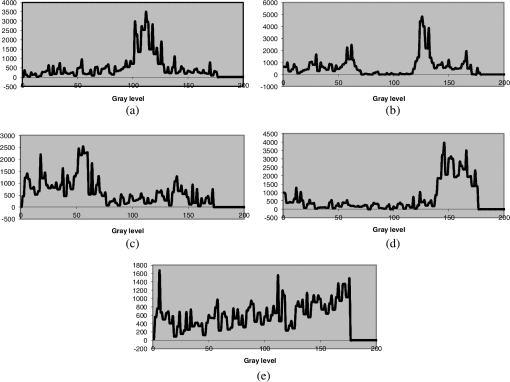

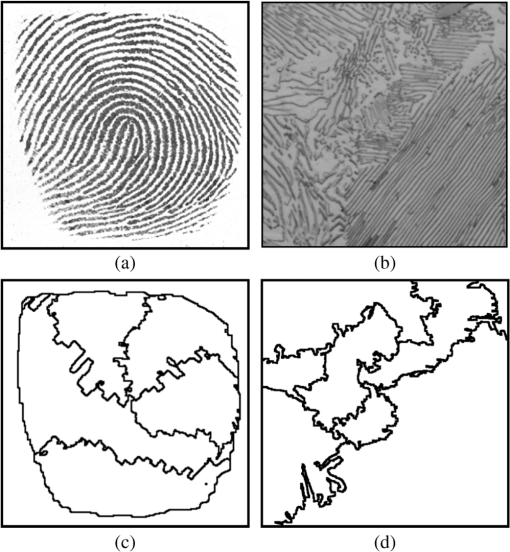

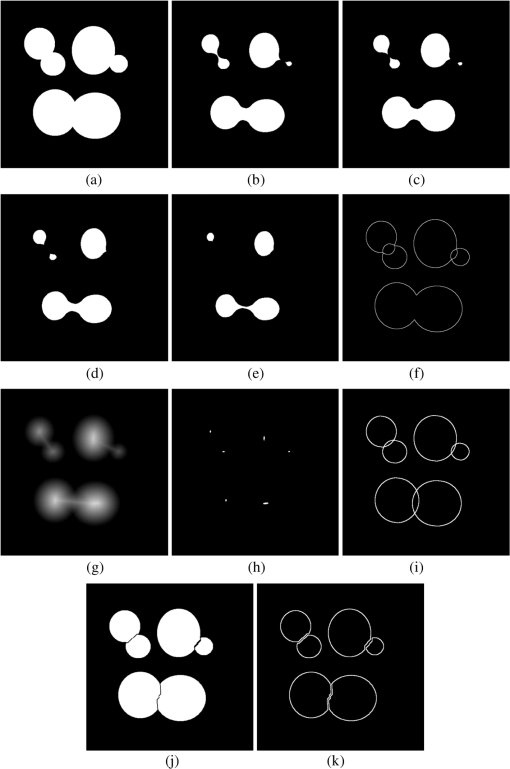

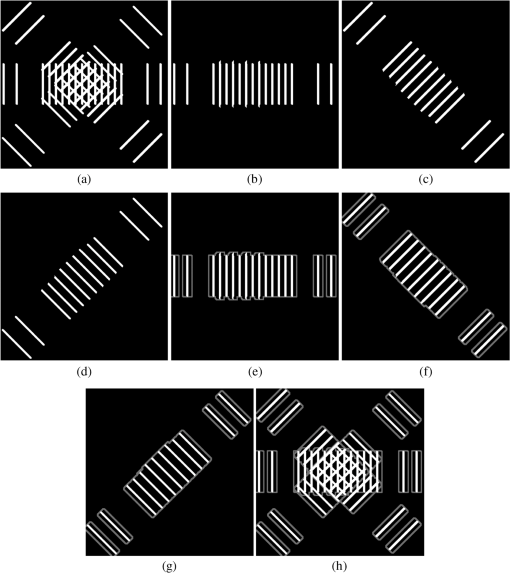

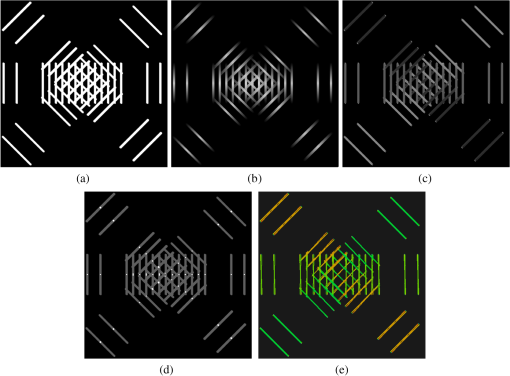

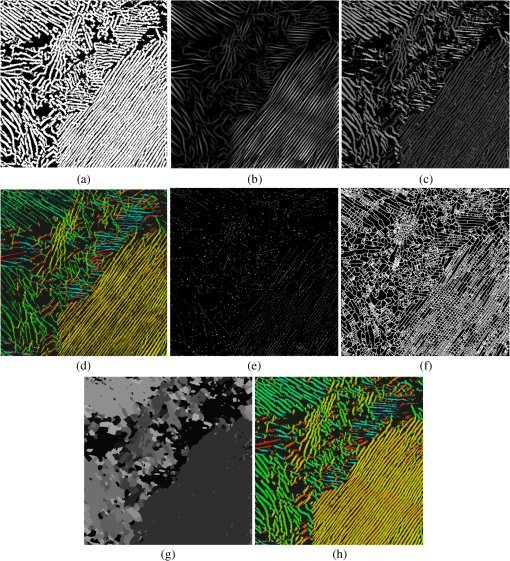

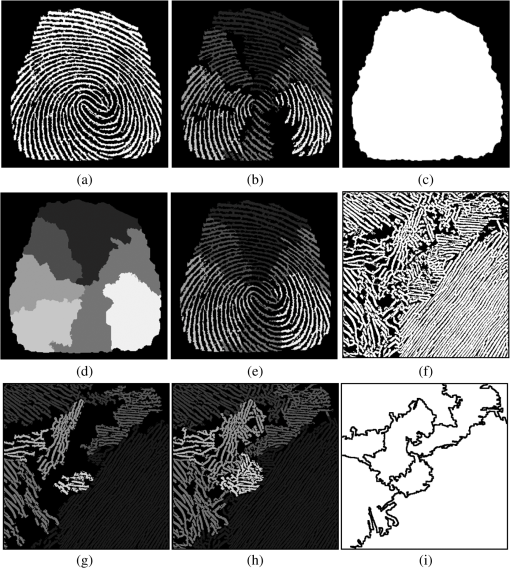

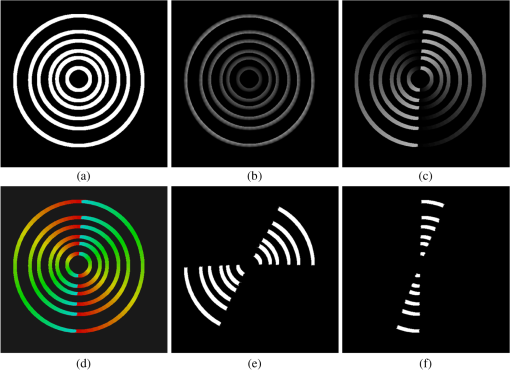

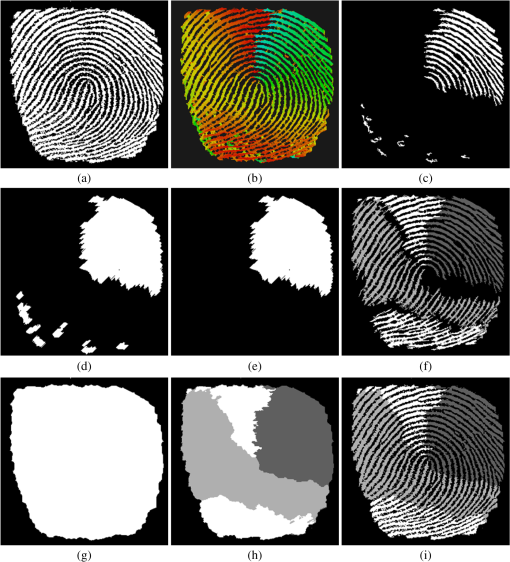

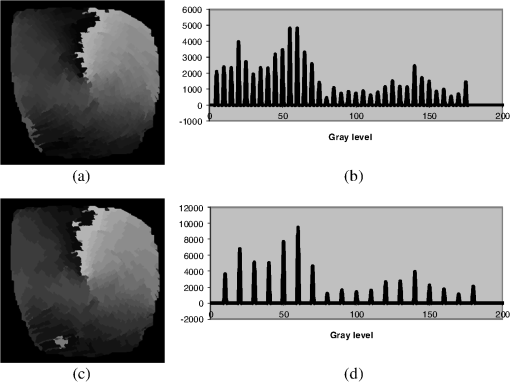

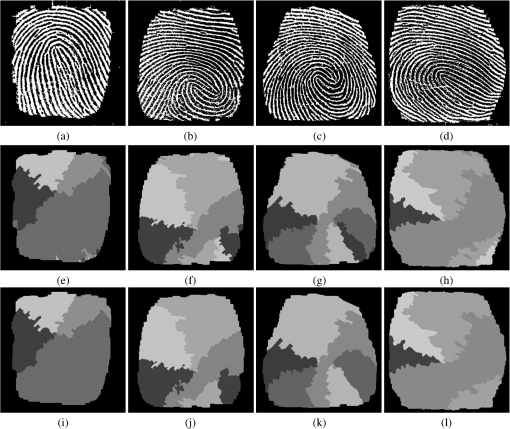

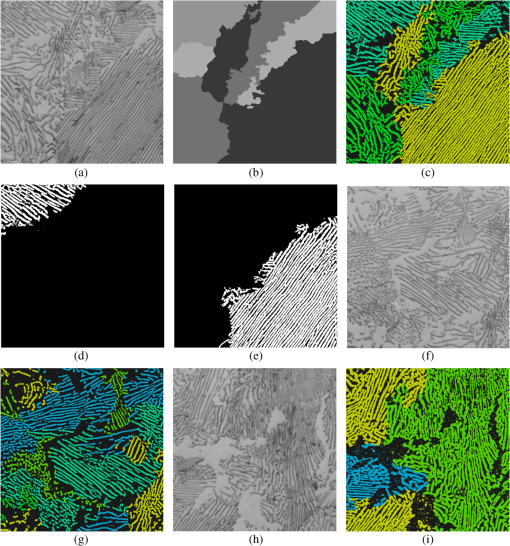

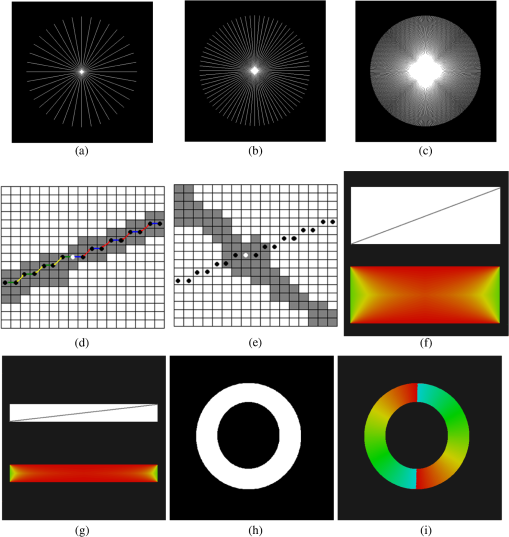

1.IntroductionAlthough anisotropic structures are frequently found in many classes of images (materials, biometry images, biology, remote sensing, among others), few works dealing with directional analysis in morphological image processing have been carried out. There are some studies that treat this subject from an algorithmic point of view,1,2 while application analysis has been addressed elsewhere.3–22 Among these works, the most interesting is that proposed by Soille and Talbot,1 in which the authors carry out a comprehensive study on directional morphological filtering. Despite the numerous applications of anisotropic structures, it is in the domain of fingerprint recognition [see Fig. 1(a)], which is the most widely used biometric features for personal identification today, where the study of directional structures based on field-orientation detection is an active subject of research. Some recent papers on this subject are Refs. 5 and 8 to 13, just to mention a few. In particular, the work of Oliveira and Leite5 is of great interest since there are only few works in the literature that characterize fingerprints based on mathematical morphology. Fig. 1Examples of directional structures. (a) Fingerprint image. (b) Pearlitic phase image. (c) and (d) Segmented images.  However, orientation detection also plays a fundamental role in other domains.14–22 Lee et al.14 proposed a method based on oriented connectivity for segmenting solar loops, while Kass and Witkin16 introduced a method to analyze oriented patterns in wood grain. On the other hand, a new approach has been introduced by Sandberg and Brega17 for segmenting thin structures in electron micrographs. Recently, Truc et al.19 proposed a vessel enhancement framework for angiography images based on directional filter bank, whereas Wang and Tseng20 introduced an adaptive dual-directional filter based on a slope filter. This last work is focused on the filtering of nonground points from point clouds to obtain terrain relief from airborne LiDAR data. Also, directional filtering has been used to remove noise.21,22 In addition, the orientation may play a fundamental role to characterize structures in materials science.3,23,24 For example, the pearlite phase displays a morphology in the form of parallel lines [see Fig. 1(b)], and when further grains are formed, these can change direction. In this case, the extraction of directional structures from an image becomes a useful technique. It is well known that many physical and mechanical properties in materials are closely related to microstructure. Thus, great interest exists in the use of image-processing techniques for determining the relationship between microstructure and material properties. Given the interest in orientation pattern models for characterizing structures, this paper investigates the use of mathematical morphology for modeling orientation structures. In particular, we focus on the problem of segmenting images containing directional structures as those shown in Fig. 1. As in the human vision system, computer image processing of oriented image structures often requires a bank of directional filters or template masks, each of them sensitive to a specific range of orientations. Hence, one investigates the use of a bank of filters based on directional morphology. Particularly, we will focus on the most sensitive filters that can detect the critical elements of the structure. In the literature, several works characterize directional structures based on the computation of gradients, which can be formalized in terms of mathematical morphology. See, for example, Refs. 9, 16, and 18. The problem with gradients is that they work at pixel scale; they are very sensitive to noise and a final stage is required to enhance directional structures. Therefore, the main idea in this paper is motivated by another approach that permits to take into account the whole context of the structures contained in an image. Mainly, two methods for segmenting directional structures are introduced. First, a segmentation method based on a composition of connections generated by openings and dilations using directional structuring elements is introduced. This method enables the correct segmention of this type of structures. The major drawback of this approach is the number of parameters that must be taken into account. Second, to decrease the complexity, an approach based on directional erosions, which takes into account the connectivity in the viscous lattice sense, is proposed. Strictly speaking, this last method requires only one parameter that is linked to the number of regions of the segmented image. This paper is organized as follows. In Sec. 2, the concepts of morphological filter, directional morphology, and the connected class notion are presented. In Sec. 3, a method for segmenting images based on a composition of connections is introduced. Subsequently, in Sec. 4, the notions of viscosity and orientation functions derived from the supremum of directional erosions are proposed. Also, an algorithm for segmenting the images based on the notion of the orientation partition function (OPF) and the histogram of orientations is proposed. The OPF is built using the viscosity function, the orientation function, and the catchment basins transformation. The proposed algorithms are discussed in Sec. 5, and the limits of the approach are addressed as well. 2.Some Basic Concepts of Morphological Filtering and Connections2.1.Basic Notions of Morphological FilteringMathematical morphology is mainly based on the so-called increasing transformations.25–27 A transformation is increasing if for two sets , such that . In the gray-level case, the inclusion is substituted by the usual order, i.e., let and be two functions; then for all . Then, a transformation is increasing if for all pair of functions and , with . In other words, increasing transformations preserve the order. A second important property is the idempotence notion. A transformation is idempotent if and only if . The use of both properties plays a fundamental role in the theory of morphological filtering. In fact, one calls morphological filter to all increasing and idempotent transformations. The basic morphological filters are the morphological opening and the morphological closing with a given structuring element. Here, represents the elementary structuring element containing its origin (for example, a square of ), is the transposed set (), and is a homothetic parameter. Then, the morphological opening and closing are given by where the morphological erosion and dilation are expressed by and , respectively, and is the inf operator and is the sup operator. Henceforth, the set will be suppressed, so the expressions and are equivalent (). When the parameter is equal to one, all parameters are suppressed ().In the present work, we are particularly interested in morphological directional transformations that are characterized by two parameters. A directional structuring element depends on its length (size ) and the slope (angle ) of the element. Thus, the set of points of a line structuring element is computed by two sets of points for . These sets of points are defined by the following expressions: and the set of points . This means that the structuring element is a symmetric set. Similar expressions can be found for . Then, the morphological opening and closing are given by where the morphological erosion and dilation are given by and .2.2.Connected ClassesOne of the most interesting concepts proposed in mathematical morphology is the notion of connected classes introduced by Serra.25 Intensive work has been done on the study of connectivity classes. Recent studies dealing with this subject have been carried out in Refs. 2829.30.31.32.33.34.35.36.37. to 38. In fact, a connection or connected class on is a set family that satisfies the following three axioms: An equivalent definition to the connected class is the connection openings expressed by the following theorem, which provides an operating way to act on connections. Theorem 1 (Connection openings)The datum of a connected class on is equivalent to the family of the so-called connection openings such that Observe that , constitutes a partition of . In the present work, we will focus on three connections: connections generated by extensive dilations and openings, and the connection on viscous lattices. The first one, based on extensive dilations, acts by clustering the arc-wise connected components.25,29 Definition 1.Let , , be a system of connectivity openings on corresponding to a connectivity class . For each , define the operator on by Another connection31 is given by the openings as follows. Definition 2.Let be a connectivity class and an opening on . Let be the subset of consisting of , all singletons , and all elements of . Then, is a connectivity class with associated connectivity openings given by Then, is a connection on , and for any , the connected components according to are the connected components of and the singletons of . Finally, let us introduce the connection on viscous lattices.35,39 In Ref. 35, the usual space is replaced by a viscous lattice structure given by the family ; with , that is both the image under an extensive dilation and under the opening . Now, let be a connected class on . Since dilation is extensive by definition, it preserves the whole class , and the adjoint erosion treats the connected component independent of each other, i.e., . Given that Serra proposes to define connections on before the dilation , thus, one expresses the viscous connected components of a given set as a function of its components (arc-wise connected components) as According to this proposition, the number of connected components depends on the viscosity parameter . Figure 2(a) illustrates the original image composed of three arc-wise connected components or three components at viscosity . Figures 2(b) to 2(e) show the eroded images by disks of sizes 20, 22, 27, and 36, respectively. Then, at viscosity , one has four connected components, whereas at viscosity , the image is composed of five connected components [see Figs. 2(b) and 2(c)]. The image in Fig. 2(f) shows the connected components for viscosity . However, by considering disks as the elementary shapes of the image, it is not possible to detect six connected components for any viscosity. The solution to this problem is to select the connected components at different viscosities (scales). The traditional algorithm is the well-known ultimate erosion, and it consists of selecting the connected components at viscosity such that they are removed for viscosity . Another way to select the connected components is to compute the distance function as shown in Fig. 2(g) and detect its maxima. The image in Fig. 2(h) shows the ultimate eroded components for viscosities (sizes) 25, 34, 45, 64, 66, and 68, whereas Fig. 2(i) illustrates the connected components in the viscous lattice sense. This is a most interesting connection since it exploits the goal in binary image segmentation, which is to split the connected components into a set of elementary shapes. Fig. 2Viscous connectivity. (a) Original image (b), (c), (d), and (e) Eroded connected components at viscosity , , , and , respectively. (f) Connected components at viscosity ,. (g) Distance function. (h) Maxima of distance function (ultimate eroded). (i) Connected components in the viscous lattice sense computed from the maxima. (j) Segmentation by watershed. (k) Connected components.  3.Image Segmentation of Directional Structures Based on a Composition of Connections: Connectivities Generated by Openings and DilationsImage segmentation is one of the most interesting problems in mathematical morphology.40–42 It is well-known that the notion of connectivity is linked to the intuitive idea of a segmentation task, where the objective is to determine a set of elementary shapes (connected components) that will be processed separately. The result of the segmentation depends on the notion of connected components. If one supposes a priori that the elementary shapes of the set in Fig. 2(a) are disks, then the viscous connection gives better results than the watershed transformation [see Figs. 2(j) and 2(k)]. Similarly, for the images shown in Fig. 1, the problem lies in determining what a connected component is. Different approaches can be chosen to introduce such a concept based on the connected classes notion. According to the usual connectivity, the set in Fig. 3(a) is composed of 13 connected components. However, the number of connected components of this set depends on the connection used to analyze it. For example, using the connection generated by openings [Eq. (4)], the connected components of the set are given by all singletons and all elements of . Nevertheless, from a practical point of view, the use of a partial connection of is more interesting as proposed by Ronse.32 In this case, for the partial connection , the connected components of a set are the connected components of , whereas will be the residual. Then, by using the partial connection , this set can be interpreted differently. Consider three-directional openings with and and the derived partial connection openings given by Fig. 3Composition of connections. (a) Original image ,. (b), (c), and (d) Connected components according to , and , respectively. (e), (f), and (g) Connected components according to , and , respectively. (h) Connected components according to the composed connectivity.  For , the original image has 17 connected components as illustrated in Fig. 3(b), whereas for , the image is composed of 13 connected components as shown in Fig. 3(c). Finally, for , there also exist 13 connected components as illustrated in Fig. 3(d). Nevertheless, from a practical point of view [see Figs. 1(c) and (d)], the connected components according to , must be analyzed by a clustering process derived from the connection in Definition 1. One requires a connectivity generated by the composition of the partial connection and the connection . Thus, the connected components are given by By using directional dilations with parameters and applied to Figs. 3(b) to 3(d), respectively, one computes the images in Figs. 3(e) to 3(g). According to this composed connection, the set has five connected components for , , and as illustrated in Figs. 3(e) to 3(g). Then, the set has 15 connected components as shown in Fig. 3(h). In the real case, see Figs. 1(a) and 1(b), the task of extracting the connected components becomes a difficult problem since they are not well separated and belong to different scales (see Fig. 4). For example, the composed connection using two-directional openings of size and and directional dilations using and were applied to the image in Fig. 4(a). Then, the largest connected components, illustrated in Figs. 4(b) and 4(c), were extracted according to and . Now, the main problem lies in deciding which of these connected components represents a region better in the original image. A simple criterion to take this decision is to choose the largest one. Thus, the solution to extract the largest connected component from the set lies in applying to this set the operator with fixed size parameters and , and perpendicular to . Then, one must take the largest connected component for all . As a precision, when the operator is applied, it is preferable to make the intersection with the original image. Figure 4(d) illustrates this component. Next, one computes the residual as shown in Fig. 4(e), and the operator is applied again, by decreasing to compute the largest connected component of . The procedure is iterated until a given number of connected components NC is obtained whose union is a partial partition of the image. The following procedure illustrates the method. Algorithm 1Image segmentation using a composition of connections (partial partition).

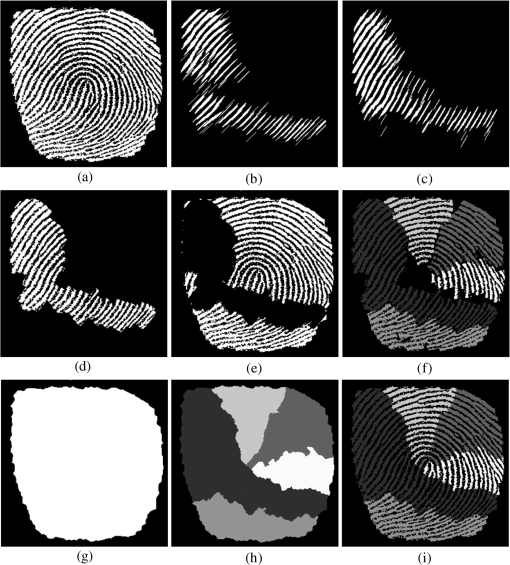

Fig. 4Fingerprint segmentation based on a composition of connections. (a) Original image , (b) and (c) connected components according to and , (d) largest connected component , (e) residue , (f) five connected components, (g) geodesic mask, (h) and (i) segmented image.  Depending on the images (fingerprint or pearlite phase images), the method requires a careful examination of the images to establish the largest size of the directional openings and the amount to decrease the scale .Conversely, the parameter is very robust, and the size works correctly for all images. Figure 4 illustrates the performance of the algorithm. The image in Fig. 4(f) shows five connected components computed by this procedure. This is the partial partition . The idea of computing the final partition comes from the work of Ronse,32 and it consists of building the influence zones of the connected components in Fig. 4(f) inside the image in Fig. 4(g). This last image was computed from the set by applying a morphological closing. The influence zones are illustrated in Fig. 4(h). Figure 4(i) shows the final partition. The main problem of this method is the establishment of paramenters and , which it will be described in Sec. 5. In the next section, a method not requiring these parameters is introduced. 4.Histogram-Based Segmentation Using the Orientation Partition FunctionIn this section, an approach to decrease the complexity of the segmentation problem is introduced. The flow chart in Fig. 5 illustrates the different steps to carry out a segmentation. It consists of codifying the size and orientation of the structures by means of two functions: viscosity and orientation . Then, a method to establish relationships between maxima of the viscosity function is proposed. This information is codified in a gray-level image called OPF. Subsequently, a systematic merging process is performed on this image to obtain the final segmentation based on the histogram of the OPF. Finally, in the last step, small regions are removed. 4.1.Size and Orientation Codification Based on Viscous ConnectivityA method to characterize the orientation field was proposed by Soille and Talbot.1 The directional morphological openings and closings are used to filter bright and dark structures, respectively. Among the different functions proposed by the authors for codifying structures, the following functions were used to extract the orientation information of the images. Particularly in fingerprints, the function is used to extract the orientation of bright ridges, whereas enables the extraction of dark ridges. This approach is general in different aspects: it can be applied to gray-level and binary images, and to all types of images containing directional structures. Here, we are strictly interested in segmenting images that can be considered binary images, as those in Fig. 1. We are looking for an approach where the scale and orientation information of the structures of the image are easily accessible to determine its connected components. First, let us define the directional structures of an image by assigning a viscosity to each pixel in the image. Each point of the structure is described by two quantities: the angle to the horizontal axis and a weight. Orientations have a periodicity of 180 deg in comparison to vectors, which have a periodicity of 360 deg. On the other hand, the weight will be given by the largest viscosity element, i.e., the longest line segment completely included in the structures. Two functions that codify the directional structures, by size and orientation, are introduced below. First, let us define a new function that codifies size information. Definition 3.The viscosity function is a transformation that associates the largest viscosity with each pixel of a set . A way to compute this function is as follows. Let be a given set; one begins with a small structuring element by taking into account all orientations to compute the set . This means that all the points of the image selected are those not removed by at least one of the directional erosions. Next, is increased by one at all points belonging to the set ; this procedure is continued by increasing the size of the structuring element until the structure (the image) is completely removed. This means the procedure is carried on until a value is obtained such that and . As expressed before, the gray levels of the function are the sizes of the longest lines that can be included in the structure. For the structures that can be considered as composed by a set of lines [Figs. 1(a) and (b)], the maxima of the function play a main role since they codify the longest lines that take into account the whole context of the image. In this manner, the position of the largest structuring elements that are completely included in the structure is obtained by means of the maxima of . However, the angles of these structuring elements (line segments) are not accessible from the image . Therefore, a second function associated with the viscosity function needs to be introduced. Definition 4.The orientation function is a transformation that associates the angle of the viscosity in with each pixel of a set . The directions of the line segments are stocked in a second image called orientation function, when the viscosity function is computed. Figure 6 shows an interesting example where the maxima play a fundamental role to characterize the directional information. Figures 6(b) and 6(c) illustrate the viscosity and orientation functions, respectively, computed from the image in Fig. 6(a), whereas the image in Fig. 6(d) displays the original image and the maxima (in white) of the viscosity function. Particularly, observe that there exists only one maximum for each component. The viscosity and orientation functions can be used for extracting the line segments characterizing the structure. To illustrate the information contained in these images, a line segment of size was placed at each maximum point “,” with an angle given by the image . This reconstruction can be observed in Fig. 6(e). It seems that maxima are good features to describe directional structures. Let us illustrate this reconstruction with a real example (pearlitic phase micrograph) that is shown in Fig. 7. The images in Figs. 7(b) and 7(c) illustrate the image of the viscosity function and the image containing the orientation , respectively, computed from the binary image in Fig. 7(a). Finally, the image in Fig. 7(d) has been reconstructed using the respective viscosity and orientation functions. Only the maxima of the viscosity function were used in the reconstruction. Therefore, we can observe that both features, the maxima of the viscosity function and the orientation function, play a main role to extract directional structures. The maxima of and the orientation image will be used to propose a method for segmenting the images. Fig. 6Viscosity and orientation functions. (a) Original image. (b) Viscosity function. (c) Orientation function. (d) Maxima of the viscosity function (in white) and original image. (e) Reconstructed objects.  Fig. 7Orientation partition function. (a) Original image. (b) and (c) Viscosity and orientation functions, respectively. (d) Reconstructed image. (e) and (f) Maxima of the viscosity function and its image partition computed by the watershed transformation. (g) Catchment basins of the partition weighted by the orientation partition function (OPF). (h) Orientation representation of image using the OPF.  4.2.Orientation Partition FunctionSince the maxima of the viscosity function and their orientations codified in the orientation function play a main role to reconstruct the original image, we propose method to establish the relationships among the maxima of the viscosity function. The main idea in this approach consists of building an image that codifies the neighborhood relations between the maxima and their orientations. A transformation that takes into account these relationships among maxima is the watershed, by working with the inverse image of . Therefore, this transformation will be used to find basic relations between the connected components (characterized by maxima). However, instead of using the watershed lines, the catchment basins associated with the watershed will be used. In fact, the catchment basins related to the maxima can be computed by means of geodesic dilations. Figure 7(e) shows the maxima of the image in Fig. 7(b), whereas Fig. 7(f) illustrates the watershed image computed from the inverse image of . Figure 7(g) shows the catchment basins weighted by the values of the angles of the image in Fig. 7(c) at the position of the regional maxima. This new function in Fig. 7(g) is the OPF, whereas the image in Fig. 7(h) is the representation of the orientation of the image in Fig. 7(a) using the OPF. By analyzing a region of the image in Fig. 7(g), one can identify the neighboring regions presenting similar orientations. These regions may belong to the same connected component. To take into account these neighborhood relationships, the histogram of the OPF will be used to carry out the merging process. 4.3.Histogram-Based SegmentationIn this section, we discuss an approach for segmenting directional structures based on the histogram of the OPF. It is well-known that this approach can yield very good results when the objects have distinct ranges of intensity. This is not strictly the case for directional structures; however, in the case of fingerprints, the following hypothesis can be posed: two neighboring regions in the image have neighboring values in the histogram of orientations. Hence, the spatial analysis is practically equivalent to the gray-level analysis in the histogram. In the case of pearlite phase images, one assumes that the intensities have distinct ranges in the OPF. This hypothesis is supported by the fact that the grain structure displays a morphology in the form of parallel lines, and when forming another grain, these change direction. In this case, the histogram segmentation approach can also be used for segmenting the images. Thus, after computing the histogram of the OPF associated with the original image, the histogram is clustered independently by splitting it into several sections corresponding to representative classes of pixels in the image. Therefore, the segmentation problem is reduced to finding the threshold values that dissect the histograms in a finite number of clusterings. Adjacent sections of the histogram will be merged according to the following strategy. A one-dimensional (1-D) adjacency graph of the histogram sections is built, and the two adjacent sections showing the smallest value of a given criterion in the histogram are identified and merged. The procedure of merging adjacent regions is carried out until a number of predefined classes is achieved. This solution that resembles the supervised approach proposed by Lezoray43 enables a reduction in the number of regions of the OPF in an optimal manner. Several criteria for merging adjacent sections were studied. However, the best results were found by using the gray-level variance of the regions represented by two adjacent sections of the histogram. Let be the sections of histogram , with . The variance value is given by where the mean value is is the number of pixels of gray level and [resp. ] is the lowest (resp. highest) gray level in section . The adjacency graph is updated each time two sections are merged and the algorithm is iterated until the number of sections in the histogram is . Figure 8 illustrates some segmented images for different values. The output images in Figs. 8(b) to 8(f) were computed from the original image in Fig. 8(a) using , respectively. The segmented images form a hierarchy of partitions depending on the parameter. Thus, let be the segmented image of . With regard to partitions, one has that for any and with ,; this implies that . It is clear that the number of regions of the segmented images does not correspond to the number of selected clusters as shown in Fig. 8. However, despite this lack of correspondence, these images are practically segmented in the main regions. For example, to compute the image in Fig. 8(f), the selected number of sections of the histogram was , but the segmented image has the four largest regions that are representative of the directional structures of the image in Fig. 8(a).4.4.Removal of Small RegionsEven when the output images computed by the histogram-based segmentation contain large representative regions, the method also generates small classes as those signaled by an arrow in Fig. 8(f). Small classes turn out to be inherent in segmenting techniques. To remove these regions, another approach, such as the use of the regions adjacency graph (RAG) structure, could be used for obtaining the final segmentation. By merging neighboring regions with close values in the gray level (orientation criterion) using the RAG, the order between partitions is preserved. Nevertheless, two main problems arise when removing small regions with the RAG structure: the complexity of the method and the fact that small regions have no correlation with their neighboring ones. For instance, the small regions signaled by an arrow in Fig. 8(f) have a gray level (orientation) that is not close to the gray level of the neighboring region, which means that a large criterion value is required to merge these regions. A large value of the criterion carries the risk of merging representative regions. Thus, we prefer to use a recent and formal approach proposed by Serra,44 which also preserves an order. Serra establishes what he calls grain building ordering. Consider two partitions and . Then, It does not preserve inclusion since some connected classes of the smaller partition, , may lie partly or even completely outside of . Figure 8 illustrates this concept. The image in Fig. 8(g) (partition ) has been computed by removing the small regions of the image in Fig. 8(f) (partition ). Only the four largest regions remain intact. Now, to compute the output image in Fig. 8(i), the influence zones of the connected components in Fig. 8(g) are built inside the mask in Fig. 8(h). This new partition, say , is greater than partition , i.e., the order is preserved (). This is a most interesting method for removing small regions given its simplicity and perfomance, which will be illustrated in the next section. 5.Results and Discussion5.1.Image Segmentation Based on the Connectivities Generated by Openings and DilationsThe first algorithm described in Sec. 3 has been tested on several images with good results. Here, we illustrate other examples and comment on some drawbacks of the approach. Figure 9 shows two examples of segmented images. Figure 9(b) shows the connected components computed from Fig. 9(a) using this procedure, whereas Fig. 9(d) displays the influence zones inside the mask in Fig. 9(c), and Fig. 9(e) shows the segmented image. Another example is illustrated in Fig. 9(f). Figure 9(g) shows seven connected components computed by the mentioned algorithm, whereas Figs. 9(h) and 9(i) display the segmented image. In this last example, the influence zones of the connected components in Fig. 9(g) are built using the whole image as a mask. However, there are some drawbacks in this method. One problem is the need for determining the values of parameters and , and a second one is the large computation time. With regard to the former, it requires to carry out some experiments to select the largest scale and the decrease . This can be avoided by computing all the scales as in the viscosity function; nevertheless, it risks to increase considerably the computation time. Fig. 9Examples of segmentation based on a composition of connections. (a) Original image . (b) Connected components. (c) Mask image. (d) Influence zones of connected components in (b) inside the mask image in (c). (e) Segmented image. (f) Original image. (g) Connected components. (h) and (i) Segmented image.  Now, concerning the latter problem, even computing few connected components, the method is slow when compared with the other one that uses the OPF and the histogram-based segmentation. Let us illustrate the number of operations requiered by this algorithm. We express the computational time in terms of basic erosions and dilations (size 1), and one considers that the contribution of the basic erosion and dilation is the same. Principally, two steps of the algorithm involve a considerable amount of computational time: the use of openings and dilations to compute the connected components at each orientation, and the selection of the largest connected component. To determine the first connected component, one needs to apply erosions and dilations (directional openings of size ), followed by dilations. These transformations are applied at each point of the image and for all direction ; this means basic operations for each point of the image, where is the angle step ( for the examples in Fig. 9). Moreover, this is the number of operations for each connected component; then operations are necessary to obtain the segmented image. Regarding the second step—the selection of the largest connected components—it also requires scannings of the image. Therefore, the number of scannings required to compute the segmented image makes the algorithm very slow. 5.2.Some Comments About the Codification of Size and OrientationIn Sec. 4, when the viscosity and orientation functions were introduced, we claimed that the pair of data, maxima of the viscosity function and their orientations, contains the main information of the original structures. This hypothesis was later used to build the OPF to segment the images. Before establishing this hypothesis, several experiments were carried out with a set of images. Figures 10(f) to 10(j) illustrate some examples of reconstructed images. The reconstruction percentage is , which seems small. However, by comparing the images in Figs. 10(f) to 10(j) and those of Figs. 10(a) to 10(e), we appreciate that the reconstructed images are representative of the original images since they contain the main shapes. The remaining information is redundant. Then, the maxima of the viscosity function play a crucial role in the characterization of directional structures. Moreover, the orientation function faithfully represents the shape of the structures. Let us illustrate this assertion with examples. Figures 11(b) and 11(c) show the viscosity function and its associated orientation function of the original image in Fig. 11(a). In addition, a color representation of the orientation function is also shown in Fig. 11(d). The gray-level orientation image enables the introduction of a connectivity criterion. Figures 11(e) and 11(f) illustrate the connectivity introduced by the orientation of the structures. The image in Fig. 11(e) was computed from the image in Fig. 11(c) using a threshold between 160 and 172 deg (gray levels in the image). Then, by using the arc-wise connectivity, the number of connected components between these angles can be determined. Similarly, for the image in Fig. 11(f), the number of connected components with an orientation lying between 92 and 152 deg can also be computed. Fig. 10Examples of reconstructed images using the máxima of the viscosity function. (a) to (e) Original images. (f) to (j) Reconstructed images.  Fig. 11Extraction of connected components using the orientation function. (a) Binary image. (b) Viscosity function. (c) Orientation function. (d) Color representation of (c). (e) Threshold between 160 and 178 gray levels computed from the image in (c). (f) Threshold between 92 and 152 gray levels computed from the image in (c).  Now, Fig. 12(a) illustrates a real example having similar directional structures as those of the image in Fig. 11(a). In Fig. 12(b), the orientation function in color representation is shown. To extract directional structures, a threshold in a given interval is applied to this last image. For instance, the image in Fig. 12(c) was computed using a threshold between 100 and 180 deg (gray levels in the orientation function). A main structure and some small ones are observed in this image. In order to extract the principal structure, a directional dilation at 45 deg (more or less perpendicular to the main structure) is applied to the image in Fig. 12(c) to compute the image in Fig. 12(d). Next, the largest connected component is extracted as shown in Fig. 12(e). A similar procedure is carried out for computing other directional structures, as displayed in Fig. 12(f). Finally, one builds the influence zones of the regions in Fig. 12(f) inside the image in Fig. 12(g). Figures 12(h) and 12(i) show the segmented image. Even if this algorithm is very fast, particularly when the method proposed below in Sec. 5.5 to compute the viscosity and orientation functions is applied, the main problem lies in the fact that it is not easy to compute an optimal segmentation. Fig. 12Example of segmentation using the orientation function. (a) Binary image. (b) Orientation function. (c) Threshold between 100 and 180 gray levels computed from the image in (b). (d) Directional dilation at 45 deg. (e) Largest connected component. (f) Four connected components. (g) Mask image. (h) and (i) Segmented image.  5.3.Some Comments About the Histogram-Based SegmentationIn the proposed algorithm for the histogram-based segmentation in Sec. 4, all the sections of the histogram were used for segmenting the images. However, in a first solution, one may suppose that the representative sections (clusters) of the histogram are located around the regional maxima. Let us illustrate some problems using this assertion. Figures 13(a) to 13(e) show the OPFs of the original images in Figs. 10(a) to 10(e), whereas in Figs. 14(a) to 14(e), the histograms of the OPFs are illustrated. Several comments about the behavior of the histograms can be made. First, observe that a main representative section in the histogram, linked to a principal directional structure, is always present and this behavior is reflected in the histogram by a wide section, such as those of Figs. 14(a) and 14(d). Even in the case of the histogram shown in Fig. 14(e), corresponding to the OPF image in Fig. 10(e), this main structure is present and can be detected. Nevertheless, small representative directional regions are also included and can be also detected by a clustering process carried out in the histogram. Now, as expressed above, a first solution for dissecting the histogram can be achieved by computing a 1-D watershed of the complement (). Then, maxima of (minima of ) are supposed to mark the main regions of the images. Nevertheless, one observes that maxima are numerous in the histograms of Fig. 14; this may cause an oversegmentation problem. Instead of using the minima of , one can use the so-called h-minima as seeds for the 1-D watershed. This avoids oversegmentation. This idea of segmenting images comes from the work of Lezoray.43 However, the major drawback of this approach for selecting the main regions of the structure does not always yield good results, that is, the maxima of the histogram are not strictly representative of the principal structures. Moreover, when an angle step different from 1 deg is used for computing the OPF as illustrated in Fig. 15, all the values in the histogram, multiple of are maxima. This is the reason why in the algorithm proposed in Sec. 4 we take all the possible regions in the histogram with the same gray level for segmenting the images. This means, 180 sections for , or 90 sections for , and so on. Fig. 15Examples of histograms of OPFs. (a) and (b) OPF using a five-degree step and its corresponding histogram. (c) and (d) OPF using a 10-degree step and its corresponding histogram.  To illustrate the performance of the histogram-based segmentation approach using the OPF, several examples are displayed in Figs. 16 and 17. First, we show the segmentation process in the fingerprint case. The images in Figs. 16(e) to 16(h) were computed from those in Figs. 16(a) to 16(d) using the histogram-based segmentation. All segmented images present small regions. For removing these regions, the method described in Sec. 4.4, based on the concept of grain building ordering, was applied to the images in Figs. 16(e) to 16(h). The images in Figs. 16(i) to 16(l) illustrate the final segmentations. In addition, Fig. 17 shows the examples of pearlite phase images. They have been segmented using the histogram-based segmentation approach followed by the algorithm for removing small regions. Figures 17(b) and 17(c) illustrate the segmented images computed from the image in Fig. 17(a), whereas in Figs. 17(d) and 17(e) two grains are shown. Finally, Figs. 17(f) and 17(h) show two last examples of pearlite phase images, and in Figs. 17(g) and 17(i) their respective segmented images in color representation are shown as well. Fig. 16Examples of the histogram-based segmentation. (a) to (d) Original images. (e) to (h) Segmented images using variance criteria . (i) to (l) Segmented images corresponding to those of (e) to (h) without small regions.  Fig. 17Pearlitic phase image segmentation. (a) Original image. (b) Segmented image using variance criterion . (c) Color representation. (d) and (e) Two connected components. (f) and (g) Original and segmented images using variance criterion . (h) and (i) Original and segmented images using variance criterion .  Now, let us make some comments concerning the computation time of the method. Four steps are required to obtain the segmented image as shown in Fig. 5. Three of them are determined very fast: OPF determination, histogram-based segmentation, and small regions removal. The step that involves a considerable amount of operations is the computation of the viscosity and orientation functions. Unlike the method based on the composed connectivities (Sec. 3) that uses openings and dilations, this step requires only erosions. If these functions are computed using Eq. (9) directly, the method becomes very slow, erosions at each point of the image for every scale . However, by using the algorithm described below in Sec. 5.5, the computation time is considerably reduced. The idea in the algorithm described below consists of determining the critical line segments at each point of the image, i.e., the longest line segments that can be placed inside the structure. Once the viscosity and orientation functions are calculated, they are used for the computation of the OPF, which needs only one scanning to obtain the catchment basins associated to the watershed. The existence of an efficient algorithm to compute the watershed is well-known. Regarding the histogram-based segmentation, the computation time is negligible since this step involves the calculation of Eqs. (10) and (11) on a vector of length 180. Finally, the removal of small regions is also a fast algorithm given that two procedures are only required: computation of the largest regions and the influence zones determination. 5.4.Comparison of the Method with Manual Segmentation of Pearlitic Phase ImagesTo test the performance of the method based on the OPF and histogram-based segmentation, the segmented images from this algorithm were compared with manual segmentations carried out by a materials science specialist. To our knowledge, the work of Jeulin and Kurdy3 is the only article addressing the segmentation of pearlite phase images. Since in their work there is no comparison of their method, we decided to evaluate the performance with the ground truths provided by an expert. There are several works in the literature focused on testing segmented images with ground truths (see Refs. 4546. to 47). Here, we will focus on the use of the index proposed by Martin et al.45 Let and be the partition computed from image using the proposed method and the ground truth, respectively. Let denote the set difference and the cardinality of set . If is a region (class or element of the partition) at point in the segmentation , the local refinement error between the classes and is defined as Note that this local error encodes a measure of refinement in one direction only. When is a refinement of the error is zero. Given this local refinement error at each direction for each pixel , a way to combine the values into an error measure for the entire image is the local consistency error. Let be the number of pixels of the image. Then Now, the images were manually segmented by the specialist as illustrated in Figs. 18(a) to 18(c). The error was computed at each point of the image between the ground truth images and those segmented by the proposed method [Figs. 17(c), 17(g), and 17(i)]. The images in Figs. 18(d) to 18(f) illustrate this error. We observe that the error is greater for the border pixels [white color in Figs. 18(d) to 18(f)], whereas it is smaller (dark regions) for larger well-defined grains. See, for example, the image in Fig. 18(d). Another source of error is not well-defined grains. See, for example, the region (red ellipse) of the image in Fig. 18(b) and its corresponding error image in Fig. 18(e). In this region, the pearlite phase does not display a morphology in the form of parallel lines. Similarly, when one has small grains, neighboring of a larger one, it is not possible to carry out a proper segmentation as shown in Fig. 18(f). However, from a practical point of view, the pearlite phase images are, in general, segmented correctly. Finally, the mean error was computed on a set of images using Eq. (12), finding a value of 0.19. 5.5.Nonparametric Algorithm to Build the Viscosity and Orientation FunctionsThe number of operations needed for computing the viscosity and the orientation functions is considerable, making this a very expensive method in computation time. Moreover, the building of these functions seems to require a size parameter (the largest structuring element) and an angle step to compute them. Let us illustrate an algorithm to build these functions, which does not require any parameter and is not expensive in computation time. First, the size parameter (largest structuring element) can be fixed to the size of the image diagonal, that is, the size of the largest structure that can be included in the image. Let and be the dimensions of the images in the horizontal and vertical axes, respectively. For example, for a VGA image, one has . Then the largest structuring element has a size of 400 since one uses symmetrical structuring elements. It is clear that few cases of images containing such structure characteristics can be found in real images. Next, to compute the line segments, the step in degrees must be fixed. In practice, a 5-deg step () is sufficient, but let us fix a smaller value (1 deg) to show the limiting case in computation time. Figures 19(a) to 19(c) show the line segments of size 200 for angle steps of 10, 5, and 1 deg, respectively. Next, 180 structuring elements of size L are computed and stocked in a structure data (list of lists). Since symmetrical structuring elements are used, only half of the straight lines are stocked and centered at the origin . In fact, only the Freeman codes are stocked. Let with be the lists containing the Freeman codes required to build half of the structuring elements, and let be a given list. The structuring element is built using the list with and its symmetrical data with . Fig. 19Algorithm to build and images and some geometrical examples. (a) to (c) Line segments size 200, with step angles of 10, 5, and 1 deg, respectively. (d) and (e) Directional erosions. (f) and (g) Rectangular structures with their orientation functions. (h) and (i) Original structure and its orientation function.  To illustrate the algorithm, let us consider the example in Fig. 19(d), where an erosion by a line segment is applied to the structure in gray color. The structuring element is obtained from the list (blue 0 and red 1) and (green 4 and yellow 5). Now, to calculate the erosion at point of an image marked by a white dot, one begins by computing the smallest value among the points , , and . Then, the erosion size 1 is given by the infimum (the intersection of sets) . Next, the erosion size 2 is obtained by using the following two points of the structuring element and and the erosion size 1 . Hence, . The procedure continues until the last pair of points of the structuring element is taken into account. In this example, a longer structuring element is required to remove the point of the image. Nevertheless, in the example in Fig. 19(e), after applying the third erosion, the point is removed; i.e., . Hence, the procedure is stopped. This process is applied to each point of the image. It is clear that the fact of using the infimum (AND operation in a computer) to compute the erosion and to stop the procedure when it is no longer required allows a faster calculation of the erosion of the image. Then, instead of calculating , one computes at each point of the image, the longest structuring element that cannot remove this point. Subsequently, the length of this structuring element is used to affect the function at point . Now, in order to know approximately the time complexity order for computing the viscosity and orientation functions, let us consider the limit case of a structure composed of a line segment at direction , 1 pixel thick and pixels in length, with . To compute the critical elements of the supremum of erosions at the center of the line segment, directional erosions are required at direction , plus (approximately) erosions for the other angles. The value , depends on the angle of the directional erosion. It is 1 for an angle perpendicular to and is a maximum for an angle close to , according to a given angle step . For example, for , one has more or less . For the other points of the line segment, the number of operations depend on the point position, and their values are between 1 and at direction and erosions for the other angles. Hence, the number of directional erosions required to test all points is . Let us illustrate an example. For a line segment of length and , 10,000 erosions are required at the direction , whereas for other angles (term ) between 100 and 500 erosions. Now, for a line segment of thickness 2 pixels, one has approximately . By increasing the thickness of the line segment, the determination of the time computation order becomes more complex. Then, for thin ( pixels) and long segments, a good value for the order is . Finally, let us show the computation time of the present approach. For instance, in the example of the pearlitic phase image of size , 5 s are required to compute the viscosity and orientation images using a 1-deg angle step, whereas when working with a 5-deg step in the interval [0,180], only 1 s is required. For the fingerprint image (), are required using a 1-deg angle step. The computer used for these experiments was a laptop with 1.59 GHz processor and 256 MB RAM. 5.6.Limits of the ApproachLet us take some geometrical examples to illustrate certain limitations of the approach proposed above using the OPF to extract the directional characteristics. The first example is the case of a rectangle of length and width shown in Figs. 19(f) and 19(g). The longest line segments that can be placed inside the rectangle are the diagonal lines of length , and their angle is given by . It is clear that the larger is, the smaller is, and the closer is to 0 deg (; or ). Consider an example where the rectangle has pixels. Then, for , ; for , ; and for , . Another source of error is the rectangle extremes since, at the limits, the angle of the longest line (in this example) has an angle value of 90 deg. Moreover, the points remaining in the line that cuts the rectangle along the horizontal at through its center can vary between and 90 deg. Figures 19(f) and 19(g) show two examples in color representation. This drawback can be avoided by applying the supremum of directional openings; however, the computation time to determine the longest lines included in the structure will increase considerably. To illustrate this, let us remember the traditional geometrical interpretations of the erosion and the opening. In the erosion case, one selects the center of the structuring element that is completely included in the structure, whereas in the opening case, all the points hitting the structuring element are chosen. This means that when an opening is applied, the points in the image are analyzed several times, and it is not possible to apply the algorithm proposed above. Now, let us analyze another geometrical example given by a shape formed by two concentric circles of different radii (annulus or ring of width ) as illustrated in Fig. 19(h). In the case of a disk, the maximum symmetrical distance lies in the middle point of the segment joining any two points of the circle (chord); the longest chord goes through the center of the disk (diameter of the disk). In the case of a ring, the longest line segment is the tangent to the inner circle, and the smallest line is zero, a point on the outer circle. Between these two extremes, different chords exist, all at the same angle. Then, when working with structures with a given curvature, the error in the direction is smaller than in the case of a straight line (or without error when the region takes a shape resembling a ring) as illustrated in Fig. 19(i). However, the maxima of the viscosity function will remain close to the contour (inner contour) of the structure. The limits of the approach shown in this section are attenuated when the image is composed of thin structures as those of the pearlitic phase micrograph or when the structures have curvatures as in fingerprint images. 6.Conclusion and Future WorksThis paper shows the possibilities of applying the connectivity class notion to segment images containing directional structures. Initially, the composition of connections generated by the openings and dilations was investigated. This composition of connectivities is used to describe a class of hierarchical data structures, which allows the classification of the various orientations at different scales. The approach enables the correct segmentation of images containing directional structures; however, its computation time is large. Subsequently, a second local approach was considered. This approach involves a local analysis using the concepts of viscosity and orientation functions proposed in this paper. The maxima of the viscosity function were used for computing the loci of maximal structuring elements, and the orientation function to obtain the angles of the line segments. These pairs of local parameters yield a good description of the image by means of line segments. Subsequently, an OPF of the image was computed by means of the catchment basins associated with the watershed transform weighted by the orientation function. This method allows the segmentation of the image based on a supervised approach using the clustering of the OPF histogram. The results based on the algorithms presented in this paper show the good performance of the approach. Future work will consist of searching for segmenting images in the gray-level case, and we will focus on a new lattice approach for morphological image segmentation recently proposed by Serra.48 AcknowledgmentsWe would like to thank the anonymous reviewers for their valuable comments and precisions. C. A. Paredes-Orta thanks the government agency CONACyT for the financial support. Also, the authors thank the material science specialists Julieta Torres-Gonzalez and Federico Castañeda for their help in the analysis of the pearlite phase segmented images. I. R. Terol-Villalobos would like to thank Diego Rodrigo and Darío T.G. for their great encouragement. This work was funded by the government agency CONACyT (133697), Mexico. ReferencesP. SoilleH. Talbot,

“Directional morphological filtering,”

IEEE Trans. Pattern Anal. Mach. Intell., 23

(11), 1313

–1329

(2001). http://dx.doi.org/10.1109/34.969120 ITPIDJ 0162-8828 Google Scholar

P. SoilleE. J. BreenR. Jones,

“Recursive implementation of erosions and dilations along discrete lines at arbitrary angles,”

IEEE Trans. Pattern Anal. Mach. Intell., 18

(5), 562

–567

(1996). http://dx.doi.org/10.1109/34.494646 ITPIDJ 0162-8828 Google Scholar

D. JeulinM. Kurdy,

“Directional mathematical morphology for oriented image restoration and segmentation,”

Acta Stereologica, 11

(11), 545

–550

(1992). ASTLDL 0351-580X Google Scholar

A. Tuzikovet al.,

“Extraction of grid patterns on stamped metal sheets using mathematical morphology,”

in Proc. of the Eleventh Int. Conf. on Pattern Recognition,

425

–428

(1992). Google Scholar

M. A. OliveiraN. J. Leite,

“A multiscale directional operator and morphological tools for reconnecting broken ridges in fingerprint images,”

Pattern Recognit., 41

(1), 367

–377

(2008). http://dx.doi.org/10.1016/j.patcog.2007.05.019 PTNRA8 0031-3203 Google Scholar

I. R. Terol-VillalobosL. A. Morales-HernándezG. Herrera-Ruiz,

“Directional structures detection based on morphological line-segment and orientation functions,”

Lec. Notes Comput. Sci., 4992 458

–469

(2008). http://dx.doi.org/10.1007/978-3-540-79126-3 LNCSD9 0302-9743 Google Scholar

L. A. Morales-HernándezI. R. Terol-VillalobosF. Manriquez-Guerrero,

“Two approaches for orientation segmentation based on directional morphology,”

in Proc. of the Eighth International Symp. of Mathematical Morphology and Its Applications to Image and Signal Processing,

325

–336

(2007). Google Scholar

C. H. Parket al.,

“Singular point detection by shape analysis of directional fields in fingerprints,”

Pattern Recognit., 39

(5), 839

–855

(2006). http://dx.doi.org/10.1016/j.patcog.2005.10.021 PTNRA8 0031-3203 Google Scholar

J. LiW. Y. YauH. Wang,

“Constrained nonlinear models of fingerprint orientations with prediction,”

Pattern Recognit., 39

(1), 102

–114

(2006). http://dx.doi.org/10.1016/j.patcog.2005.08.010 PTNRA8 0031-3203 Google Scholar

X. Chens,

“PDE-based regularization of orientation field for low-quality fingerprint image,”

in Proc. of the Eleventh IEEE Int. Conf. on Signal Processing,

1006

–1011

(2012). Google Scholar

B. M. Popovicet al.,

“Different methods for fingerprint image orientation,”

in Proc. of the 20th Telecommunications Forum,

662

–665

(2012). Google Scholar

S. BharkadM. Kokare,

“Fingerprint identification—ideas, influences, and trends of new age,”

Pattern Recognition, Machine Intelligence and Biometrics, 411

–446 Springer, Berlin, Heidelberg

(2011). Google Scholar

H. HasanS. Abdul-Kareem,

“Fingerprint image enhancement and recognition algorithms: a survey,”

Neural Comput. Appl., 23

(6), 1605

–1610

(2013). http://dx.doi.org/10.1007/s00521-012-1113-0 0941-0643 Google Scholar

J. K. LeeT. S. NewmanG. A. Gary,

“Oriented connectivity-based method for segmenting solar loops,”

Pattern Recognit., 39

(2), 246

–259

(2006). http://dx.doi.org/10.1016/j.patcog.2005.07.004 PTNRA8 0031-3203 Google Scholar

C. Bahlmann,

“Directional features in online handwriting recognition,”

Pattern Recognit., 39

(1), 115

–125

(2006). http://dx.doi.org/10.1016/j.patcog.2005.05.012 PTNRA8 0031-3203 Google Scholar

M. KassA. Witkin,

“Analyzing oriented pattern,”

Comput. Vis., Graph., Image Process., 37

(3), 362

–385

(1987). http://dx.doi.org/10.1016/0734-189X(87)90043-0 CVGPDB 0734-189X Google Scholar

K. SandbergM. Brega,

“Segmentation of thin structures in electron micrographs using orientation fields,”

J. Struct. Biol., 157

(2), 403

–415

(2007). http://dx.doi.org/10.1016/j.jsb.2006.09.007 JSBIEM 1047-8477 Google Scholar

A. M. BazenS. H. Gerez,

“Systematic methods for the computation of the directional fields and singular points of fingerprints,”

IEEE Trans. Pattern Anal. Mach. Intell., 24

(7), 905

–919

(2002). http://dx.doi.org/10.1109/TPAMI.2002.1017618 ITPIDJ 0162-8828 Google Scholar

P. T. H. Trucet al.,

“Vessel enhancement filter using directional filter bank,”

Comput. Vis. Image Underst., 113 101

–112

(2009). http://dx.doi.org/10.1016/j.cviu.2008.07.009 CVIUF4 1077-3142 Google Scholar

C. K. WangY. H. Tseng,

“DEM generation from airborne lidar data by an adaptive dual directional slope filter,”

in Proc. of the Int. Society for Photogrammetry and Remote Sensing VII Symp.,

628

–632

(2010). Google Scholar

L. Zhonget al.,

“Handling noise in single image deblurring using directional filters,”

in Proc. of the 26th IEEE Conf. on Computer Vision and Pattern Recognition,

612

–619

(2013). Google Scholar

R. Rijiet al.,

“Fuzzy based directional weighted median filter for impulse noise detection and reduction,”

Fuzzy Inf. Eng., 4

(4), 351

–369

(2012). http://dx.doi.org/10.1007/s12543-012-0120-2 1616-8658 Google Scholar

E. Parra-DenisM. BilodeauD. Jeulin,

“Multistep detection of oriented structure in complex textures,”

(2013). Google Scholar

S. KimJ. KoH. Chung,

“Steel grade classification based on Gabor filters and K-means clustering,”

in SERSC Int. Conf. on Circuits, Control, Communication, Electricity, Electronics, Energy, System, Signal and Simulation,

54

–57

(2013). Google Scholar

J. Serra, Image Analysis and Mathematical Morphology, Vol. II, Theoretical Advances, Academic Press, New York

(1988). Google Scholar

H. J. A. M. Heijmans, Morphological Image Operators, Academic Press, New York

(1994). Google Scholar

P. Soille, Morphological Image Analysis: Principles and Applications, 2nd ed.Springer-Verlag, Heidelberg, Berlin

(2003). Google Scholar

U. Braga-NetoJ. Goutsias,

“Connectivity on complete lattices: new results,”

Comput. Vis. Image Underst., 85

(1), 23

–53

(2002). http://dx.doi.org/10.1006/cviu.2002.0961 CVIUF4 1077-3142 Google Scholar

U. Braga-Neto,

“Multiscale connected operators,”

J. Math. Imaging Vis., 22

(2–3), 199

–216

(2005). http://dx.doi.org/10.1007/s10851-005-4890-6 JIMVEC 0924-9907 Google Scholar

H. Heijmans,

“Connected morphological operators for binary images,”

Comput. Vis. Image Underst., 73

(1), 99

–120

(1999). http://dx.doi.org/10.1006/cviu.1998.0703 CVIUF4 1077-3142 Google Scholar

Ch. Ronse,

“Set-theoretical algebraic approaches to connectivity in continuous or digital spaces,”

J. Math. Imaging Vis., 8

(1), 41

–58

(1998). http://dx.doi.org/10.1023/A:1008210216583 JIMVEC 0924-9907 Google Scholar

Ch. Ronse,

“Partial partitions, partial connections and connective segmentation,”

J. Math. Imaging Vis., 32

(2), 97

–125

(2008). http://dx.doi.org/10.1007/s10851-008-0090-5 JIMVEC 0924-9907 Google Scholar

C. RonseJ. Serra,

“Geodesy and connectivity in lattices,”

Fundadenta Informaticae, 46

(4), 349

–395

(2001). Google Scholar

J. Serra,

“Connectivity on complete lattices,”

J. Math. Imaging Vis., 9

(3), 231

–251

(1998). http://dx.doi.org/10.1023/A:1008324520475 JIMVEC 0924-9907 Google Scholar

J. Serra,

“Viscous lattices,”

J. Math. Imaging Vis., 22

(2–3), 254

–269

(2005). http://dx.doi.org/10.1007/s10851-005-4894-2 JIMVEC 0924-9907 Google Scholar

C. S. TzafestasP. Maragos,

“Shape connectivity: multiscale analysis and application to generalized granulometries,”

J. Math. Imaging Vis., 17

(2), 109

–129

(2002). http://dx.doi.org/10.1023/A:1020629402912 JIMVEC 0924-9907 Google Scholar

G. K. OuzounisM. H. F. Wilkinson,

“Mask-based second-generation connectivity and attribute filters,”

IEEE Trans. Pattern Anal. Mach. Intell., 29

(6), 990

–1004

(2007). http://dx.doi.org/10.1109/TPAMI.2007.1045 ITPIDJ 0162-8828 Google Scholar

M. H. F. Wilkinson,

“Attribute-space connectivity and connected filters,”

Image Vis. Comput., 25

(4), 426

–435

(2007). http://dx.doi.org/10.1016/j.imavis.2006.04.015 IVCODK 0262-8856 Google Scholar

I. Santillanet al.,

“Morphological connected filtering on viscous lattices,”

J. Math. Imaging Vis., 36

(3), 254

–269

(2010). http://dx.doi.org/10.1007/s10851-009-0184-8 JIMVEC 0924-9907 Google Scholar

J. Crespoet al.,

“A flat zone approach: a general low-level region merging segmentation method,”

Signal Process., 62

(1), 37

–60

(1997). http://dx.doi.org/10.1016/S0165-1684(97)00114-X SPRODR 0165-1684 Google Scholar

F. MeyerS. Beucher,

“Morphological segmentation,”

J. Vis. Commun. Image Represent., 1

(1), 21

–46

(1990). http://dx.doi.org/10.1016/1047-3203(90)90014-M JVCRE7 1047-3203 Google Scholar

Ph. SalembierJ. Serra,

“Morphological multiscale image segmentation,”

Proc. SPIE, 1818 620

–631

(1992). http://dx.doi.org/10.1117/12.131477 PSISDG 0277-786X Google Scholar

O. Lezoray,

“Supervised automatic histogram clustering and watershed segmentation. application to microscopic medical color image,”

Image Anal. Stereol., 22

(2), 113

–120

(2003). http://dx.doi.org/10.5566/ias.v22.p113-120 IASMCE 1580-3139 Google Scholar

J. Serra,

“Grain building ordering,”

Lec. Notes Comput. Sci., 6671 37

–48

(2011). http://dx.doi.org/10.1007/978-3-642-21569-8 LNCSD9 0302-9743 Google Scholar

D. Martinet al.,

“A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics,”

in Proc. of the Int. Conf. on Computer Vision,

416

–423

(2001). Google Scholar

R. UnnikrishnanC. PantofaruM. Hebert,

“Toward objective evaluation of image segmentation algorithms,”

IEEE Trans. Pattern Anal. Mach. Intell., 29

(6), 929

–944

(2007). http://dx.doi.org/10.1109/TPAMI.2007.1046 ITPIDJ 0162-8828 Google Scholar

J. Pont-TusetF. Marques,

“Supervised assessment of segmentation hierarchies,”

Lec. Notes Comput. Sci., 7575 814

–827

(2012). http://dx.doi.org/10.1007/978-3-642-33765-9 LNCSD9 0302-9743 Google Scholar

J. Serra,

“A lattice approach to image segmentation,”

J. Math. Imaging Vis., 24

(1), 83

–130

(2006). http://dx.doi.org/10.1007/s10851-005-3616-0 JIMVEC 0924-9907 Google Scholar

BiographyCarlos A. Paredes-Orta received his BSc degree in computer engineering from the Instituto Tecnológico de Querétaro and his MSc degree in mechatronics from CIDESI, Querétaro, México. He is currently a PhD student at the CIDESI. His research interests include morphological image processing and computer vision. Jorge D. Mendiola-Santibáñez received his BSc degree in electronic engineering from the Benemérita Universidad Autónoma de Puebla, México, and his MSc degree in electronics from INAOE, México. He received his PhD degree from the Universidad Autónoma de Querétaro, México. He is currently a professor/researcher at the Universidad Autónoma de Querétaro. His research interests include morphological image processing and computer vision. Ana M. Herrera-Navarro received her BSc degree in computer engineering and her MSc degree in computer science from Facultad de Informática de la Universidad Autónoma de Querétaro, México. She is currently a professor/researcher at the Universidad Autónoma de Querétaro. Her research interests include morphological image processing and computer vision. Luis A. Morales-Hernández received his BS degree in electromechanics engineering and his MSc degree instrumentation and control automatic from the Facultad de Ingeniería de la Universidad Autónoma de Querétaro, México. He received his PhD degree from the Universidad Autónoma de Querétaro. He is a professor/researcher at the Universidad Autónoma de Querétaro. His research interests include morphological image processing, computer vision, and measured microstructure in metals. Iván R. Terol-Villalobos received his BSc degree from Instituto Politécnico Nacional (I.P.N.), México and his MSc degree in electrical engineering from Centro de Investigación y Estudios Avanzados del I.P.N., México. He received his PhD degree from the Centre de Morphologie Mathématique, Ecole des Mines de Paris, France. He is currently a researcher at Centro de Investigación y Desarrollo Tecnológico en Electroquímica, Querétaro, México. His main current research interests include morphological image processing, models, and computer vision. |