|

|

1.IntroductionFrom rapid advances in autonomous vehicle technology to more ubiquitous driver assistance features in modern automobiles, the vehicle of the future is increasingly relying on advances in computer vision for greater safety and convenience. At the same time, providers of transportation infrastructure and services are expanding their reliance on computer vision to improve safety and efficiency in transportation. Computer vision is thus helping to solve critical problems at both ends of the transportation spectrum—at the consumer level as well as at the level of the infrastructure provider. The ever-increasing demand on a limited transportation infrastructure leads to traffic congestion, freight transportation delays, and accidents, with vast negative economic consequences. Advances in computer vision are playing a crucial role in solving these problems in ever more effective ways—in traffic monitoring and control, in incident detection and management, in road use charging, in road condition monitoring, and in many more. Attractiveness of computer vision for these uses primarily stems from the cost-efficiency of these technologies as well as the wide range of applications that computer vision can support. Advanced driver assistance systems (ADAS) are being deployed in ever-increasing numbers, but as the in-vehicle compute power increases and as vehicle-to-infrastructure communication becomes more robust, these systems will begin to change their role from one of providing assistance to one of facilitating decision-making as it relates to safety. This article provides a comprehensive survey of methods and systems that use computer vision technology to address transportation problems in three key problem domains: safety, efficiency, and security and law enforcement. We have chosen this taxonomy and these key areas based on our own survey of the literature and from conversations with transportation agencies and authorities. In each of the problem domains, the main approaches are first introduced at a cursory level, and a few representative techniques are then presented in greater detail along with selected results demonstrating their performance. Where possible, the technology descriptions are interspersed with the authors’ own perspectives on challenges, opportunities, and trends. Table 1 presents the taxonomy by which the survey is organized. Table 1Taxonomy of problem domains, applications, and associated references presented in this survey. The remainder of this paper is organized as follows. In Sec. 2, computer vision technologies applied to safety applications are discussed. Technologies relevant to efficiency improvement in transportation are presented in Sec. 3. Section 4 reviews advances in computer vision technologies in the areas of security and law enforcement applications. Future trends and challenges are summarized in Sec. 5. 2.Safety and Driver AssistanceIntelligent transportation systems (ITS) are being developed in many countries with the aim of improving safety and road traffic efficiency. A key focus of ITS is the technology within the so-called ADAS. Most ADAS utilize some form of sensing the surrounding environment of a vehicle. Computer vision plays a crucial role in sensing the environment, extracting relevant analytics, and acting on the extracted information. In this section, we focus on several of the many within-vehicle applications of video technology and computer vision that offer the potential for significant improvements in roadway safety and driver assistance. 2.1.Lane Departure Warning and Lane Change AssistantResearchers from the Insurance Institute for Highway Safety (IIHS) estimated that if all vehicles had lane departure warning systems, the potential exists to prevent or mitigate as many as 37,000 nonfatal injury crashes, 7,529 fatal crashes, and about 179,000 crashes a year overall.1 It has also been reported that a significant cause of such mishaps is driver distraction, inattention, or drowsiness.2 Currently, the most common approach for preventing unwanted lane departure is to employ roadside rumble strips, which vibrate as a vehicle veers in certain undesirable directions. However, this roadway alert system is present on only a very limited set of highways. One approach to preventing this type of accident is through the use of lane departure warning (LDW) systems.3 LDW systems typically track roadway markings using a video camera mounted near the rear-view mirror or on the dash board of a vehicle so the area in front of the vehicle may be viewed. A warning signal is given to the driver if a vehicle unintentionally approaches a lane marking (i.e., without activating a turn signal). Typical warning signals utilize sound, haptics, such as a steering wheel or seat vibration, or lights and display systems. The prevalence of LDW systems is expected to rapidly increase. Various tax incentives are proposed in the United States for vehicles with LDW systems, and legislation in Europe mandates that new truck models will be fitted with LDW systems from November 2013, and all new vehicles from November 2015. The forums creating standards for LDW systems are the International Organization for Standardization and the Federal Motor Carrier Safety Administration. There are two key areas for standardization to consider: a warning threshold, which determines when a warning is issued, and a speed threshold and road curvature, which are both used to classify the LDW systems. A summary of the standards is given in Tables 2 and 3.4 Table 2Summary of standards for lane departure warning.

Table 3Location of earliest warning threshold.

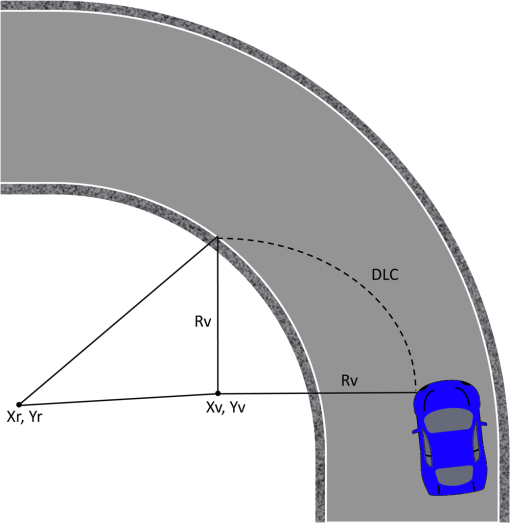

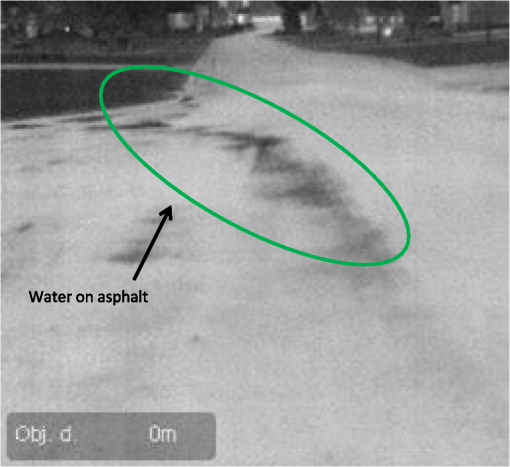

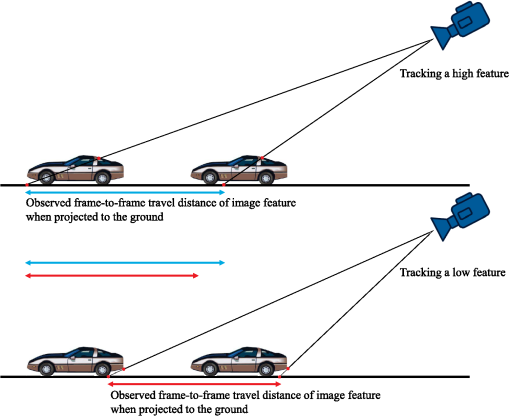

LDW cameras are typically positioned to view a distance of in front of the vehicle with roughly a 50-deg viewing angle. Roadway marking detection algorithms face the daunting task of operating in real time and under multifarious weather conditions to detect and decipher within this limited field of view a wide assortment of markings including broken lines, unbroken lines, double lines, writing in lane (e.g., car pool, arrows), only a center line, only an edge line, Bott’s dots, hatched line, highway entrance and exit markings, white lines, and lines of varying hues of yellow.5 Environmental conditions that further complicate the recognition task include rain and wet asphalt, nighttime lighting conditions, sun on the horizon, shadows, snow, fog, light-colored roadways (e.g., cement), tar seams, unmarked roads, road damage or regions of repair, legacy lines, and nearby vehicles. An LDW system should give as much warning time as possible, while triggering few, if any, false alarms. Some implementations provide the warning when the system observes a lane crossing, which requires very little frame-to-frame processing and storage. Other implementations provide a prediction that a vehicle will cross a lane boundary within a time threshold, which incorporates the vehicle speed, trajectory, kinematic data from nonimaging sensors, and a model of the lane boundary to determine a time-to-lane crossing (TLC).6 Many vision-based lane detection methods use an edge detection algorithm in conjunction with other tools such as morphological filtering and frame-to-frame correlation to create an edge image of the painted lines, and a Hough transform applied to the edge image to define potential lane boundaries. The potential lane boundaries can be fitted to a geometric model for a roadway lane to eliminate the effects of spurious visual signals. An example of a simple road model is a straight line proposed in 1989.7 As research in this area has progressed, more sophisticated techniques such as snakes and splines have been proposed for modeling lane boundary shapes.8,9 Many algorithms have assumed that pavement edges and lane markings can be approximated by circular arcs on a flat ground plane,10–13 and several investigators have found it beneficial to use three-dimensional (3-D) models of lane boundaries.14,15 We now describe in more detail the algorithm proposed in Ref. 6 as one of the more sophisticated and recent techniques addressing this problem using a combination of computer vision, data fusion, and vehicle models. Their system comprises two modules: a data acquisition and elaboration module that analyzes the video frames to estimate TLC and a warning generation module that generates an alarm based on the TLC estimate. The primary focus of the paper is on the first module, wherein the lane geometry and vehicle position relative to the lane are estimated from camera frames. Referring to Fig. 1, the goal is to track and estimate the distance to lane crossing from estimates of lane geometry parameters (Xr, Yr) and vehicle trajectory parameters (Xv, Yv). Such a task is of course crucial to detect a lane departure because it provides unique information not derivable by other on-board sensors. This module includes two main phases: lane detection and lane tracking. Lane detection relies solely on video data and comprises five steps: (1) frame acquisition, (2) inverse perspective mapping (IPM), (3) edge detection, (4) line identification, and (5) line fitting. The second step, IPM, is a geometrical transformation technique that remaps each pixel of the two-dimensional (2-D) perspective view of a 3-D object to a new planar image corresponding to a bird’s eye view (see Fig. 2). In the third step, edge points are defined as the zero crossing of the Laplacian of the IPM smoothed image. Among all edge pixels, only stripes need to be detected, so an additional phase using steerable filters is employed in the fourth step. To further define the edges, a threshold is applied to binarize high-contrast transitions. In the fifth and final step, a parabolic model is used to fit curved lines to the edges, where the fit is performed using the random sample consensus procedure. In the second phase of lane tracking, Kalman filtering is used to update coefficients to the parabolic model. Nonimaging kinematic data are acquired from a steering angle sensor and an angular speed sensor mounted on a rear wheel. The kinematics are then combined into a vehicle model by using a data fusion algorithm. Given the nonlinear nature of the problem, Cario et al.6 employ both the extended and unscented versions of the Kalman filter. Roughly 5% of accidents in the United States occur when making intended lane changes. This type of intended lane departure is being addressed with an ADAS technology referred to as lane change assistant (LCA), which monitors adjacent lanes for the presence of vehicles; an alert is issued to the driver if a lane change is initiated and the neighboring lane is occupied.3 Many of the technical methods of LDW are employed in LCA, with the additional function of monitoring lateral views for the presence of vehicles. Computer-vision-based lane detection has been an active research topic for both LDW and LCA for at least the past decade. However, the numerous challenging problems encountered in addressing the totality of this important application, coupled with an aging population that would benefit from assistance, indicates that research and development will be needed in this field for some time. 2.2.Pedestrian DetectionMore than 39,000 pedestrians are killed and 430,000 are injured worldwide each year.16 A key goal of ADAS is the detection and avoidance of pedestrians. Pedestrian detection from the perspective of video surveillance with fixed cameras has been extensively studied, but many new problems arise when a camera is mounted on a common moving vehicle. The detection must comprehend a wide range of lighting conditions, a continuously varying background, changes in pose, occlusion, and variation in scale due to the changing distance. Many approaches have been proposed to address the detection problem. As the technology evolves, the various methods are being considered in concert to arrive at more robust solutions. Shape-, texture-, and template-based methods17–20 individually have difficulties with different appearances of pedestrians due to causes such as unknown distance, varying pose, clothes, and illumination changes. Motion detection is well suited for a pedestrian laterally crossing the visual field. Motion detection via a method such as optical flow21 can indicate a region of interest (ROI) that can be further analyzed for size, shape, and gait. The periodicity of the human gait is a strong indicator of a pedestrian and can be analyzed by clustering regions of pixels within the ROI as an image feature and tracking corresponding clusters frame to frame.22 Motion detection methods require multiple frames to be acquired and analyzed, do not comprehend stationary pedestrians, and can be confounded by changing background, changing lighting conditions, and longitudinal motion. Stereo vision addresses the problem of range and size ambiguity that occurs with monocular vision. Disparity maps are derived from the two views. The disparity provides information on distance that when coupled with detected features, such as size, edges, and bounding box dimensions, can be used to identify pedestrians.23 Recently, the more general problem of object detection and recognition has become a focus of attention in the computer vision community due to its widespread applications. Much progress has been made in this area in the past decade partly due to the introduction of various hand-designed features, such as scale invariant feature transform (SIFT),24 histogram of oriented gradients (HOG),25 local binary patterns (LBP),26 and maximally stable extremal regions (MSER),27 coupled with advanced machine learning techniques. Many state-of-the-art object recognition methods follow a process of first scanning the image at multiple scales with an object detection module and then applying a technique such as nonmaximal suppression to recognize objects of interest in the image. In the context of pedestrian detection, the work of Dalal and Triggs25 has made a significant contribution by introduction of the HOG feature. This was improved upon by Felzenszwalb et al.,28 wherein the authors describe a framework including detection of object parts and a statistically learned deformable model that relates these parts. The result was a significant improvement in both the accuracy of pedestrian detection and the extension to more general object recognition. Although computer vision approaches have made notable progress in pedestrian detection and recognition, there is still room for improvement in this arena, especially for critical applications that require very accurate responses in real time. Recent work29,30 suggests two major future research directions for pedestrian detection, namely incorporating context information for accuracy improvement and improving computational efficiency. By properly incorporating context information into the object recognition algorithm, the size of the search space and the false positive rate can be greatly reduced. In Ref. 29, local and neighborhood windows are first combined to construct a multiscale image context descriptor. This descriptor represents the contextual cues in spatial, scaling, and color spaces. An iterative classification algorithm called contextual boost is then applied to incorporate the designed contextual cues from the neighborhood into pedestrian detection. Other methods that incorporate scene context into pedestrian detection are also discussed in Ref. 29. Work in the area of improving computational efficiency includes Ref. 30, which presents a method that can perform pedestrian detection at 135 frames per second without degradation in detection accuracy. Two aspects are explored here. The first is in handling different scales needed for object recognition. Typical methods train a single (scale) object model at training time and apply it to resampled versions of the test image at all scales of interest at testing time. The computational burden heavily resides in the testing time and includes both the image resampling process and the recalculation of features at each scale. Instead, in Ref. 30, several object models (a fraction of all scales of interest) are generated during training. At test time, no image resampling is required, and only a part of the feature needs to be recalculated at each scale. In particular, the gradient of each pixel is the same since there is no image resampling. Only the histograms need to be recalculated at each scale. This shifts some computational burden from the testing to the training stage. Since only a fraction of all scales of interest is trained, the scales that match one of the trained models will be processed directly. The scales that do not match one of the trained models will be processed efficiently through interpolation between scales. As a result, it is possible to make a trade-off between the amount of computational burden allocated to the testing and training stages and the detection accuracy degradation due to interpolation. The other aspect explored in Ref. 30 is akin to incorporating scene context into pedestrian detection and uses an efficient stereo-vision method (stixel world model31) to detect the ground plane and limit the search space for pedestrians to only regions near the ground plane. An evaluation of the state of the art in monocular pedestrian detection was performed in Ref. 32. Key findings are that, despite significant progress in this area, performance still has significant room for improvement. The studies in Ref. 32 indicate that detection rate was low for partially occluded pedestrians and low image resolutions. Reference 32 provides several key elements that will aid in advancing this technology: a comprehensive richly annotated publically available data set, improved metrics and evaluation methods. Many recent systems use video sensing beyond the visible spectrum. Infrared (IR) sensors are finding civilian applications due to the decreasing cost of uncooled sensors. Far-IR (FIR) methods are based on the assumption that human body temperature is higher than that of the environment, resulting in pedestrians appearing sufficiently different in thermal brightness compared to their background in IR imagery, thereby aiding the step of pedestrian localization (see Fig. 3). Other objects that actively radiate heat (cars, trucks, etc.) can have a similar IR signature; however shape-, template-, and motion-based methods previously developed for visible spectrum imagery can be incorporated so as to aid in distinguishing people from warm objects. Another factor to consider is that IR imagery is less sensitive to lighting changes and to shadows than visible imagery.33 The topic of pedestrian detection is not strictly limited to walking humans. Reference 34 estimated that 1.5 million deer–vehicle collisions occur annually in the United States at a cost of nearly $1 billion in damages and resulting in over 200 human fatalities. Detection of animals such as deer, moose,35 and camels36 are all areas of active research, given the potential to prevent many serious accidents. Specialized bicycle detection methods37 are also a component of pedestrian detection. 2.3.Driver MonitoringAccording to the U.S. Department of Transportation’s National Highway Traffic Safety Administration (NHTSA), over 3,000 fatalities from automobile accidents are caused by distraction, and 100,000 crashes, resulting in 40,000 injuries and 1,500 deaths are caused every year by driver drowsiness.38 Government and private sectors have begun to invest significant effort to reduce such incidents. One example is the Driver Distraction Program Plan published by NHTSA in 2010, which serves as a guiding framework to eliminate accidents related to distraction.39 Computer vision and video processing technology can be leveraged to monitor driver behavior and attention and to alert the driver of the presence of unsafe conditions. Video capture is typically accomplished via cameras mounted or built into the vehicle. The use of mobile (e.g., smartphone) cameras as a portable monitoring system has also garnered recent attention. Approaches in the literature fall in three broad categories: (1) analysis of road conditions via a road-facing video camera, (2) analysis of the driver’s face from a driver-facing video camera, and (3) joint analysis and fusion of driver-facing and road-facing views. The previous sections have described prime examples in the first category of road-facing video analytics, namely LDW and detection of objects and pedestrians on the road. In this section, we complete the discussion by elaborating on driver-facing analytics and systems employing joint observations. Analysis of driver-facing video footage has engendered two threads of exploration, the first being drowsiness detection and the second being detection of driver attention by estimating the direction of the driver’s gaze. Drowsiness detection relies upon the fundamental ability to locate the driver’s eyes in a video frame and to determine the eye state, i.e., open versus closed. Eye localization is a form of object detection and conceptually follows the approaches of Sec. 2.2 but is tailored to detect the characteristics of human eyes. A common approach for determining eye state is to extract relevant features and train a binary classifier on closed-eye and open-eye samples. In Ref. 40, an active shape model helps localize the eyes, and speeded up robust features (SURF) features are used to train a binary support vector machine (SVM) classifier. A classification accuracy of 92% is reported on a data set of 1355 open-eye and 425 closed-eye samples gathered by the authors. In Ref. 40, a near-infrared (NIR) camera is used to capture driver-facing videos, thus enabling drowsiness detection under low light levels (e.g., at night) and in situations where the driver is wearing sunglasses. In that work, eye localization is followed by the extraction of four shape-based features: compactness, eccentricity, Hu’s seventh moment, and the ratio of the number of white pixels in the top hat transform of the eye region to that in the bottom hat transform. In addition, two texture-based features are calculated, namely histogram energy and contrast on the gray level co-occurrence matrix. A binary SVM classifier with a Gaussian radial basis function kernel is trained with this seven-dimensional feature representation. The authors report between 83 and 95% classification accuracy across seven video sets. Once eye state is determined, drowsiness indicators are computed such as percentage of eye closure over time40,41 or blink rate, which can be correlated with micro-sleep episodes.40 The second type of analysis performed on driver-facing video is monitoring driver attention by estimating eye gaze direction. Reference 42 addresses the problem by performing head pose estimation. A windshield-mounted camera sensitive to both visible and NIR light captures video footage of the driver. Head pose estimation is accomplished in three stages. First, Adaboost cascade detectors trained for frontal, left-, and right-profile images are used to localize the head and face region. Next, a localized gradient orientation (LGO) histogram is calculated as a facial feature descriptor, which is robust to scale, geometry, and lighting. Finally, support vector regression is used to learn a mapping from LGO features to two pose dimensions: pitch and yaw. Ground truth labels for training samples are gathered via an elaborate experimental setup with optical sensors attached to the driver’s head. Authors report mean absolute errors between 6 and 9 deg in pitch and yaw across different experimental conditions and demonstrate that these results are state-of-the-art. Note that head pose provides only an approximate indicator of driver attention and that a more accurate estimate needs to also consider eye gaze direction. Reference 43 reports an excellent general survey of vision-based eye gaze estimation techniques, grouping the approaches into three categories of feature-based (by far the most popular), appearance-based, and natural light methods. The application of gaze tracking to the specific problem of driver monitoring is indeed a fertile area for research. The ultimate goal in driver monitoring is to determine if the driver is paying attention to relevant objects and incidents on the road. Thus, while many research efforts to date have independently analyzed road-facing versus driver-facing video, we believe the next major advancement in driver monitoring is to be achieved via joint analysis and fusion of interior and exterior observations. A recent example of an effort in this vein is the CARSAFE mobile application.40 The authors propose dual video capture from the driver-facing (or front) and road-facing (or rear) cameras of a smartphone. Since current hardware limitations do not permit simultaneous capture on smartphones, the authors propose a system that automatically switches between front and rear cameras based on detection of various events. For example, detection of lane departure from the rear camera will prevent switching to the front camera. Conversely, if the system detects driver drowsiness from the front camera, it will force this camera to be the active sensor. The authors report overall precision and recall rates of 0.83 and 0.75, respectively, for detecting dangerous driving conditions. There have been efforts to employ nonvision sensing modalities for driver monitoring. Reference 44 proposes using the inertial sensors in a smartphone, namely the accelerometer, gyroscope, and magnetometer, to obtain position, speed, acceleration, and deflection angle and relate these to driver behavior. Researchers have also explored biosensors that measure photoplethysmographic,45 electrocardiographic,46 and electroencelographic47 data to predict drowsiness. When compared to vision sensors, these modalities have the benefit of reduced data bandwidth and processing requirements, and are arguably more direct measurements of a driver’s physiological state. However, they are expensive and require contact with the driver, which can be an inconvenience. In the future, we can expect to see research efforts that intelligently integrate input from multiple heterogeneous vision and nonvision sensors, global positioning system (GPS), and telematics to continuously monitor and alert the driver of dangerous conditions. Vehicle-to-vehicle and vehicle-to-infrastructure communication technologies will also play an important role in monitoring, predicting, and alerting drivers of unsafe situations. 2.4.Sensing for Other Adaptive and Warning SystemsComputer vision is contributing to a number of other adaptive vehicle driving systems and warning systems:

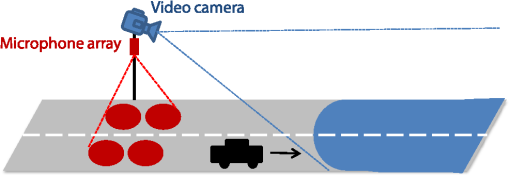

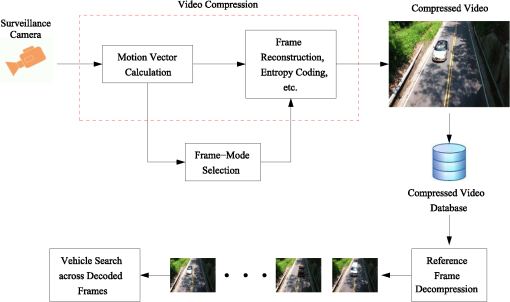

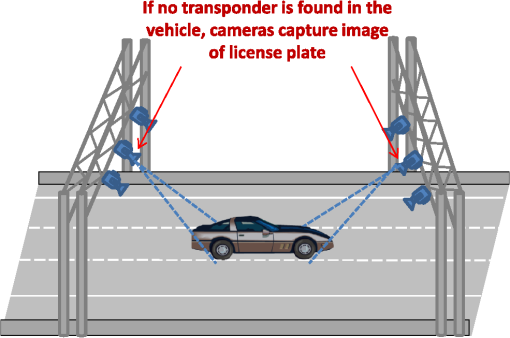

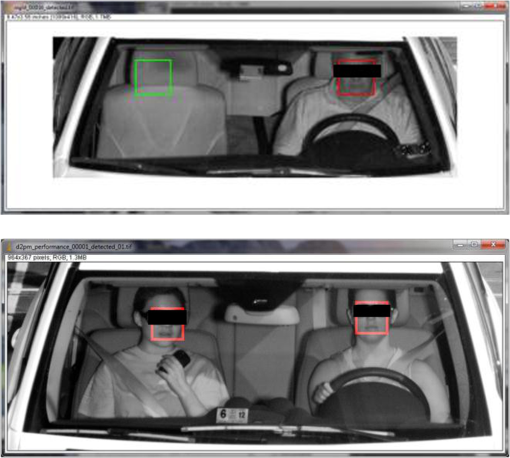

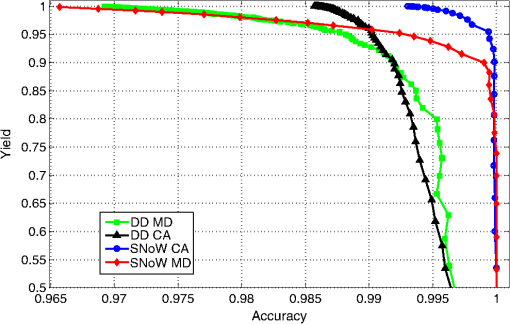

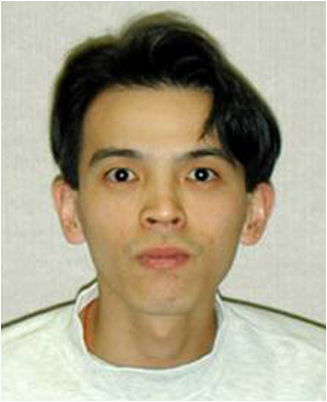

Other applications include blind angle monitoring, parking assistance, rear-view cameras, weather detection, autobending headlights, tunnel detection, and collision mitigation systems. A level of sensing and automation beyond driver assistance described thus far is embodied in the systems that enable autonomous vehicles, such as the much publicized Google driverless car. To date, Google’s fleet of autonomous vehicles have logged 450,000 driverless road miles. A key technology used in Google’s implementation is a roof-mounted Velodyne 64-beam laser, which creates a 3-D map of everything in the immediate area of feet. The 3-D image is combined with high-resolution maps that have been programmed into the vehicle’s control system. The laser system can differentiate between other cars, pedestrians, cyclists, and small and large stationary objects. Four radars (one for front, back, left, and right) sense any fast-moving objects from farther out than the laser can detect and are used to give the car far-sighted vision for handling high speeds on freeways. A front-mounted camera handles traffic controls and observes road signs and stop lights for information that a human driver typically uses. Other sensors include a GPS, an inertial measurement unit, and wheel encoder. While autonomous vehicle technology poses many challenges to current roadway legislation, it does offer great potential to mobilize citizens with impairments and could make driving safer due to comprehensive sensing and rapid decision making. For further details, the reader is referred to the recent IEEE Spectrum Online article.78 3.EfficiencyData derived from traffic volume studies can help local governments estimate road usage, volume trends, critical flow time periods, optimal maintenance schedules, as well as optimal traffic enforcement time periods. Real-time traffic flow data can also enable efficient incident management, which consists of incident detection, verification, and response. 3.1.Traffic FlowTraditional approaches to automated vehicle counting and traffic flow estimation include roadway sensors such as pressure hoses, piezoelectric sensors, and induction coils. These methods are typically inaccurate as well as difficult and sometimes expensive to deploy and maintain, as they have to be physically laid out on the target road or highway. Less intrusive roadway sensor systems such as sonar, microwave, and laser-based systems are sometimes employed, but they are expensive and highly sensitive to environmental conditions. Additionally, all roadway sensors have difficulty in detecting slow and stationary vehicles. However, the main downside of roadway sensors is that they provide limited information, namely vehicle count or traffic speed and/or volume alone. The application of computer vision techniques to the analysis of video sequences to assess traffic conditions, on the other hand, can be provided as a value-added option to already deployed traffic or surveillance camera network systems devoted to tolling and law enforcement (speed, red light, stop sign, etc.).79 Application of real-time processing of images and videos to perform road traffic data collection dates back to the early and mid-1970s at the University of Tokyo,80,81 where the authors developed a system to measure traffic flow and vehicle speed and length from real-time processing of videos. In England in the 1980s,82–88 members of the Traffic Research using Image Processing group developed a pipeline-based system for off-line measurement of vehicle count and speed. Belgian researchers89 developed the camera and computer-aided traffic sensor system, which estimated average traffic speed, vehicle length, and intervehicle gaps and performed vehicle classification. The Advanced Computing Research Centre at the University of Bristol devised a system capable of multilane vehicle counting, speed measurement, and tracking in complex traffic scenarios.90–93 Researchers at the Swedish Royal Institute developed a system that performed vehicle count and approximate speed estimation on roads with up to three lanes.94 A system developed at the Iran University of Science and Technology95 enabled automatic estimation of traffic queue parameters in real time. The capabilities of the system were later extended to enable real-time calculation of traffic parameters96 and traffic monitoring at intersections.97 A research group at University of California, Berkeley, produced a system capable of detecting and tracking multiple vehicles with robustness to occlusion while, at the same time, outputting vehicle shape parameters that can be used in vehicle classification.98–100 The University of Minnesota developed Autoscope,101,102 a system capable of measuring real-time traffic parameters. The outputs of the traffic analysis modules are input to a computer, which produces a virtual view of the road conditions. Autoscope also has a traffic controller module capable of manipulating traffic lights and managing variable message signs. Reference 103 proposed a hybrid method based on background subtraction and edge detection for vehicle detection and shadow rejection, based on which vehicle counting and classification, as well as speed estimation in multilane highways, is achieved. The MODEST European consortium introduced Monitorix,104 a video-based traffic surveillance multiagent system where agents are grouped in tiers according to the function they perform. The authors of Ref. 105 introduced a model-based approach for detecting vehicles in images of complex road scenarios. They attempt to match image features with deformable geometric models of vehicles in order to extract vehicle position, pose, and dimensions from which they can extract vehicle speed, count, and junction entry/exit statistics. In order to minimize the number of cameras used to monitor traffic within a city, Ref. 106 presented a novel strategy for vehicle reidentification, which matches vehicles leaving one monitored region with those entering another one based on color, appearance, and spatial dimensions of the vehicles. Reference 107 presented a prototype of a smart camera with embedded DSP implementations for traffic surveillance. An algorithm that calculated queue length and flow across intersections via a back-propagation neural network was proposed in Ref. 108. Reference 109 proposed a video analysis method for vehicle counting that relies on an adaptive bounding box size to detect and track vehicles according to their estimated distance from the camera, given the geometrical setup of the camera. The authors of Ref. 110 proposed a vehicle counting method based on blob analysis of traffic surveillance video. A three-step approach consisting of moving object segmentation, blob analysis, and tracking is described. Reference 111 proposed a video-based vehicle counting method based on invariant moments and shadow-aware foreground masks. Background estimation for foreground segmentation is performed with a mixture of Gaussian models, as well as with an improved version of the group-based histogram. Shadow-aware foreground detection is achieved by performing background subtraction with shadow removal in the hue, saturation, and value color space. Vehicle classification is performed by computing moment invariants of the foreground mask; moment invariants are properties of connected components in binary images that are invariant to translation, rotation, and scaling, and can be used for shape classification and coarse object recognition. More recently, Ref. 112 proposed a video-based vehicle counting scheme that operates on the compressed domain by analyzing the magnitude, stability, and coherence of clusters of motion vectors associated with vehicles passing by a virtual sensor. Given that video compression is usually performed in real time on embedded camera hardware, and since building the vehicle counting capabilities into the compression step only adds a small amount of computation, the proposed approach is conducive to real-time performance. The authors report achieving a false positive rate of 3.4% and a false negative rate of 2.3% with the proposed method applied to a variety of videos shot under different conditions, frame rates and resolutions. Traffic flow estimation was one of the problems first addressed by automated video analytics, and, as such, it garnered significant attention in the early years of the technology. Recently, however, the scholarly literature on this topic has diminished. A possible explanation is that the field has evolved from a phase of basic research to one of commercialization in practical applications, such as congestion management and prediction, and that most of the attention is now being devoted toward enabling real-time implementations that achieve levels of performance matching or exceeding industry requirements. 3.2.Incident ManagementAn incident is defined as “an event that causes blockage of traffic lanes or any kind of restriction of the free movement of traffic.”113 Examples of incidents include stalled vehicles, accidents, road debris, and chemical spills. Reports estimate that in 2005, traffic incidents incurred a cost of $6.9 million vehicle-hours and 7.3 million of gallons of fuel.101 Studies show that accidents and disabled cars account for 20% of all causes of congestion on metropolitan expressways.114 Effective incident management from detection through response is required. Incidents are classified into two categories: primary and secondary. Secondary incidents are those caused by preceding or primary incidents. It is estimated that anywhere between 20 and 50% of all freeway accidents are secondary,115 which highlights the importance of early discovery and clearing of primary, abnormal incidents. Research on incident detection and incident prediction dates back to the early 1990s when a system that detected extraneous objects obstructing tunnels and tight curves in roads was introduced.116 Two years later, a method based on image processing and fuzzy techniques that attempted to predict an incident before its occurrence was proposed.117 Guidelines for incident detection were presented in the framework of the DRIVE V2022 Euro-Triangle project.118 Reference 119 proposed breaking down incident detection into micro- and macroscopic detection; the former related to incidents that occur within the field of view of the camera, while the latter is inferred from aggregated traffic flow parameters. A novel architecture for developing distributed video networks for incident detection and management was introduced in Ref. 120. A camera-based incident detection system to automatically detect slow-moving or stopped traffic on traffic lanes and shoulders was described in Ref. 121. More recently, a video-based incident detection system was deployed and tested on cameras monitoring the San Mateo Bridge in the San Francisco Bay Area.122 The system was effective in detecting incidents on both traffic lanes and shoulders, regardless of traffic and weather conditions and time of day. Recognizing that the accuracy of video-based automatic incident detection is heavily affected by environmental factors such as shadows, snow, rain, and glare,123 reviewed existing compensation techniques and highlighted potential research directions to address the lack of robustness of existing systems to such factors. Reference 124 presented a framework for real-time automated recognition of traffic accidents based on statistical logistic regression analysis, which records the trajectories of vehicles involved in accidents as guidelines for investigators. Reference 125 presented robust incident detection systems based on the fusion of audio and visual data captured by hybrid systems that included video cameras and microphone arrays, as illustrated in Fig. 5. While traditional video-based vehicle detection and tracking was implemented, improved performance was achieved by introducing sophisticated algorithms that support fusion of multiple, asynchronous sources of data. This resulted in a system that offered improved robustness to varying environmental conditions relative to traditional vision-based systems, as well as increased area coverage relative to traditional microphone arrays. The authors present exemplary vehicle detection outputs achieved by the system under extreme weather conditions such as heavy fog, which would render a traditional vision-based system inoperable. The asynchronous nature of the data sources resulted from the nonoverlapping operating areas of each of the systems (see Fig. 5); synchronization of data sources was achieved by predicting the time it would take a vehicle to traverse the blind area between the two disjoint coverage areas via video-based speed estimation and knowledge of relevant sensor geometry parameters and scene dimensions. The system was successfully applied to the detection of stranded vehicles, traffic jams, and wrong-way driving episodes on Austrian highways. We expect the ever-increasing sophistication of low-level video analytic algorithms devoted to motion detection and object tracking coupled with advanced machine learning techniques to contribute to the increased automation, robustness, and self-sufficiency of incident detection systems. This, in turn, should be reflected in shorter response times and improved efficiency and performance of first-responder entities and personnel. 3.3.Video-Based TollingAutomated open road tolling (ORT) or free-flow tolling refers to the collection of road use fees without the need for physical toll booths. The idea of using wayside electronic transponders to record vehicles passing specific points on roads for the purpose of tolling was first proposed in 1959.126 The advantages of automated tolling technology include improved customer service, network operations, congestion management, and pricing.127 An advantage of video-based tolling systems is that, since toll fees typically vary across different vehicle types, such systems can seamlessly be extended to perform vehicle classification tasks. Video-based tolling is based on high-speed image capture and license plate recognition technologies, and is typically implemented in conjunction with other vehicle classification systems, typically based on the use of transponders such as radio frequency identification (RFID) devices. The downside of RFID-based systems is that they require compliance from drivers, as they require specifically designed tags or transponders to be carried or installed in the vehicles. The first video-based tolling system deployed in North America (along Highway 407 in the greater Toronto area) uses a video module to determine the entry and exit points of a vehicle and performs license plate recognition and billing based on time, distance travelled, and vehicle type. A database of video accounts is then built by matching the recognized license plate numbers with the driver and vehicle information.128 A similar system is in place in the state of Virginia, where a license plate recognition system is used to capture images of license plates of vehicles without a valid transponder that pass through a toll point, as illustrated in Fig. 6.129 Starting in February of 2003, the city of London has charged a fee for driving privately owned vehicles in its central area during weekdays as a way to reduce congestion and raise revenue.130 A network of video cameras records license plate numbers and matches it with a list of customers who have made a payment in advance. The owners of vehicles that have not paid the congestion fee are sent a fine. Similar camera-enforced pricing systems for congestion avoidance exist in Stockholm131 and Singapore.132 The Texas Department of Transportation deployed the pay-by-mail system in the fall of 2006, which allows drivers who do not possess a Texas toll tag to use electronic toll collection facilities that perform tolling based on license plate recognition systems. The drivers receive a monthly bill in the mail via the vehicle registration data linked to their license plate.133 Systems that require the driver to call in advance and register his/her license plate number into a database exist in Melbourne, Australia,134 and Santiago, Chile.135 The driver gets billed when the system’s video cameras pick out their license plate number while in transit. Video analytics technologies have also been used in Austria since 2004 and in Germany since 2005 to enforce road-usage fees for freight vehicles.136 Fig. 6License plate recognition system used to identify vehicles without a radio frequency identification transponder.129  Video-based toll collection has driven the expansion of high-occupancy vehicle (HOV) lanes to high-occupancy toll (HOT) lanes in certain U.S. states such as California, Texas, Georgia, and Minnesota.137 In HOT, the assessed toll is a function of the number of occupants in the vehicle. For both HOV and HOT applications, enforcement is very difficult and is typically performed by police officers detaining and visually inspecting vehicles. Enforcement rates of 10% are often quoted where actual violation rates can be of the vehicles using the HOV lane. Computer vision techniques are being introduced to automate or semiautomate the enforcement process.138–140 Reference 140 recently proposed a classifier-based imaging and face detection method of enforcement. Successive mean quantization transform (SMQT) features are chosen due to their relative insensitivity to illumination variations. SMQT consists of a series of simple operations. First, the mean of the pixels in a pixel region surrounding the pixel of interest at location () is calculated. Next, those pixels with gray values above the mean are set to 1 and all others to 0. The result is a 9-bit pattern of zeros and ones (one bit per pixel in the local region) with 512 possible patterns associated with the pixel of interest. This 9-bit pattern can be described as a binary nine-dimensional feature vector corresponding to pixel location (). This process is repeated for all pixels within an frame where image classification is to be performed. The features are then fed to a classification technique referred to as sparse network of winnows (SNoW) that classifies “face” versus “no-face” regions in the passenger position of the front seat. SNoW employs linear classification in a very high-dimensional sparse feature space and can be described mathematically as where is the ’th element (0 or 1) of feature vector , is a weighting factor that is learned during the training phase using an iterative update rule, and is the class label. Using SMQT and SNoW, Ref. 140 was able to achieve a 3% error rate (false violator) and a 90% true positive rate on a data set where passengers were generally facing forward. Figure 7 shows sample output images illustrating the classification performance of the proposed approach.Fig. 7Face classification in seat passenger positions: red—classified as face, green—classified as no face.140  Given their flexibility and scalability, we expect video-based toll collection technologies to gradually supersede RFID and tag-based solutions as the technology of choice to satisfy the ever-increasing need for ORT. First, the transition would eliminate the need for voluntary enrollment at the customer end, thus significantly enhancing the penetration rate and pervasiveness of the technology; note that participation level is an important parameter in transponder-based tolling because processing costs of nontransponder transactions are significantly higher than the cost of transponder transactions. By migrating to video-based solutions, this level of dependence on the willingness of the drivers to ascribe to a given technology is ameliorated. Operational and maintenance costs of automated tolling technologies should also decrease as there would be no need to distribute and manage transponders, manually process payments and disputes, pursue nonpayers, etc. Lastly, current and future applications such as vehicle classification for efficient routing and automated rate selection from vehicle type, automatic collection of evidentiary imagery and footage, as well as variable toll rates (based, for example, on level congestion, occupancy, time of day, day of week, etc.) would be readily supported. 4.Security and Law EnforcementComputer vision technologies are playing key roles in the areas of transportation security and law enforcement. There are several common elements shared by computer vision systems aiming to meet security or law enforcement needs. For example, the detection and identification of events of interest is an important capability common to both security and law enforcement. On the other hand, there are also several distinct characteristics that separate a security application from law enforcement. For example, prediction and prevention are important for security applications while accuracy and evidence are important for law enforcement. In many cases, a security system can serve as a front-end of a law enforcement system. For example, in order to enforce certain traffic violations, it is necessary to detect and identify the occurrence of such an event. Below, we focus on several example applications within these two broad categories to elucidate the general principles. 4.1.SecurityVideo cameras have been widely used for security and surveillance for quite some time due to their low cost, ease of installation and maintenance, and ability to provide rich and direct visual information to operators. The use of video cameras enables centralized operations, making it possible for an operator to coexist at multiple locations. It is also possible to go back in time and review events of interest. Many additional benefits can be gained with a video sensing and recording modality using computer vision technologies. Consider that, traditionally, the output of these cameras has been viewed and analyzed in real time by human operators and is archived for later use if certain events have occurred. The former is error prone and costly, while the latter has lost some critical capabilities such as prediction and prevention. Computer vision and assistance, and extraction of video analytics, are of great research interest to fully reap the benefits of video sensing. 4.1.1.Alert and warning systemsIn some applications, very rapid analysis of large video databases can aid a critical life-or-death situation. Amber Alert is an emergency alert system to promptly inform the public when a child has been abducted. It has been successfully implemented in several countries throughout the world. When sufficient information is available about the incident (e.g., description of captor’s vehicle, plate number, color, etc.), a search can be conducted across large databases of video that have been acquired from highway, local road, traffic light, and stop sign monitoring, to track and find the child. Similar to Amber Alert and much more common is Silver Alert, which is a notification issued by local authorities when a senior citizen or mentally impaired person is missing. Statistics indicate that it is highly desirable that an Amber/Silver Alert–related search is conducted in a very fast and efficient manner, as 75% of the abducted are murdered within the first three hours. Consider a statement from the West Virginia code on Amber Alert 15-3A-7: “the use of traffic video recording and monitoring devices for the purpose of surveillance of a suspect vehicle adds yet another set of eyes to assist law enforcement and aid in the safe recovery of the child.” Recent advances have been made in efficiently searching for vehicles in large video databases through an adaptive compression and compatible decompression method.112 Rather than selecting reference compression frames in a conventional manner at fixed time intervals, Ref. 112 selects reference frames as those where vehicles are in an optimal viewing position. Thus, the vehicles captured across many hours of video can be viewed by only decompressing the reference frames, as illustrated in Fig. 8. By performing reference frame selection based on the particular video content, the algorithm from Ref. 112 narrows down the search space relative to conventional approaches. Although this reduction in search space size is dependent on traffic conditions, it works best in low- and medium-traffic volume scenarios; the boost in efficiency can be significant in subsequent search tasks relative to the naïve approach where reference frames are inserted at fixed rates. Beyond the specific application of efficient video search and retrieval, Ref. 112 introduces a potential paradigm shift away from the traditional approach whereby computer vision–related tasks are performed on uncompressed images and video. By exploiting the feature-rich nature of compressed data, the proposed framework incurs negligible amounts of additional computational expenses on top of what is required for video compression. Since motion-compensated video compression can be performed in real time on embedded hardware found in most internet protocol (IP) cameras, the gap to real-time performance of computer vision tasks is significantly bridged by operating in the compressed domain. 4.1.2.Traffic surveillanceComputer vision in the context of traffic surveillance addresses problems such as vehicle/pedestrian detection, tracking, traffic flow measurements, and the representation, understanding, and prediction of human behavior (e.g., anomalous incident detection, illegal turns, aggressive driving patterns, etc.). Several of these applications have been discussed in detail in earlier sections. Here, we focus on the overall system through the sampling of several survey papers in human and/or traffic surveillance79,141–146 with an emphasis on research efforts in behavior representation, understanding, and prediction. A general discussion framework used by papers on human and traffic surveillance is to categorize vision tasks into three stages:

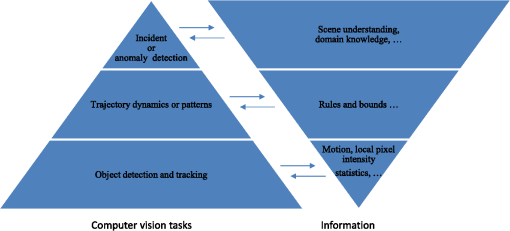

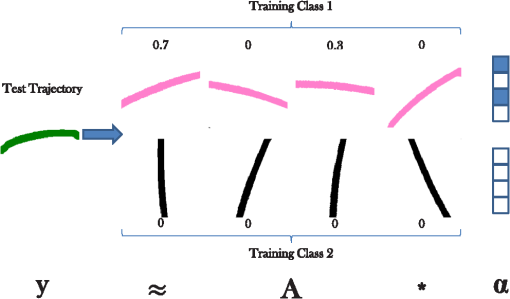

However, in the literature, different categorization boundaries, different focuses, different methods of grouping these technologies, and different levels of detail are presented. In this paper, our discussion follows a three-level framework similar to that used in Ref. 144. Figure 9 shows an illustration of an exemplary hierarchy of three-level computer vision tasks and the corresponding information needed in traffic surveillance applications. A typical traffic surveillance system would start with object detection and tracking, i.e., the low-level computer vision task, as described in Sec. 2.2. At this level, the amount of information needed is smaller compared to other levels. Detecting an object of interest can often be performed via pixel intensity changes over time such as frame-to-frame differences, statistics of local pixel intensity over time such as a Gaussian mixture model for background subtraction, or motion analysis using motion vectors or optical flow. Other machine learning and pattern recognition methods can be used to directly detect a specific type of object such as a vehicle (see Sec. 4.1.3), but the computation of these methods tends to be more costly. Once the object of interest is detected, many tracking methods, such as mean-shift, feature or template matching, etc., can be applied to follow the object of interest. Tracking yields trajectories of the objects of interest. For interested readers, an excellent review of computer vision technologies on object detection and tracking can be found in Ref. 147. At this level, many traffic flow measurements such as vehicle count, average traffic speed, etc., become readily available. However, for further traffic surveillance needs such as access control, illegal turn detection, and incident or anomaly detection, we need to move to the middle level where the dynamics or patterns of these trajectories are understood and analyzed. Understanding trajectory dynamics or patterns often involves grouping and classification. Machine learning techniques are well suited for this task. A common approach is to first perform clustering in the training phase based on some form of distance or similarity measure. At the highest level of the hierarchy, the resulting clusters are then modeled to represent the behavior of each cluster. As new trajectories are identified from the traffic scene, they are compared to these models for discovery of events of interest such as incidents, anomalies, etc. For more complicated behavior, the interaction among the behavior of a group of individuals may also need to be examined and modeled. Note that this does not mean that one can blindly apply known machine learning techniques and have success; additional domain knowledge and contextual knowledge about the scene and rules are fairly critical for traffic surveillance. For example, not all vehicles would travel at the exact same speed within the field of view of the surveillance camera. As a result, the number of samples of the different trajectories may not be the same. Hence a normalization step is generally needed prior to the estimation of the traveled distance in the clustering step. For another example, a stop-and-go trajectory pattern is considered normal at an intersection with a stop sign while the same pattern is an indication of traffic congestion at a highway segment. In this case, some rule-based reasoning or additional contextual information about the scene needs to be provided and taken into account in the clustering or behavior modeling. Fig. 9Illustration of an example of three-level computer vision tasks and the corresponding information needed in traffic surveillance applications. Note that this figure is a modification of Fig. 1 in Ref. 144.  It is clear that trajectory analysis lies at the heart of many of the approaches in the middle and higher levels of the surveillance hierarchy. A general discussion on vision-based trajectory learning for surveillance can be found in Ref. 144. Furthermore, in the broader context of surveillance, many existing methods such as dynamic time warping, finite-state machine, hidden Markov models, time-delay neural network, syntactic techniques, nondeterministic finite automaton, self-organizing neural network, etc., have been applied for behavior understanding.145 Thus, advances in the high-level vision tasks for traffic surveillance are promising. Recently, Ref. 146 presented a thorough review on computer vision techniques for urban traffic surveillance, where commercial systems in use and computer vision techniques used in traffic analysis systems are reviewed; the state of the art for prototype and academic systems is analyzed, and detailed discussions and an outlook to future research are provided. The survey highlights key differences between urban traffic surveillance and highway traffic surveillance. As would be expected, urban traffic surveillance is much more challenging than highway surveillance due to the complexity of scene and road usage. For example, for a highway scene as compared to an urban scene, vehicle trajectories have fewer and simpler patterns, pedestrian detection (see Ref. 148 for a survey in this area) and analysis of vehicle–pedestrian interactions are generally not necessary or critical, and occlusion is not as challenging. Another interesting discussion in Ref. 146 is the comparison between two surveillance system methodologies. Earlier systems are likely to follow a framework where object tracking is done without knowledge of object classes (thus, being simpler and faster). With the advances in computer vision and computation, a framework where knowledge of object classes is available prior to tracking has become more promising in solving more challenging tasks. The latter framework has more potential for better performance (accuracy, robustness) at the expense of more processing. Since these systems need to operate in real time or near real time in practice, the choice between the two frameworks is not straightforward and is likely to be application dependent. We elaborate now on the problem of anomalous incident detection, which is at the highest level of the hierarchy. Examples of transportation anomalies include traffic violations, accidents, dangerous pedestrian–driver behavior, unattended baggage at public transportation sites, etc. An excellent survey of video anomaly detection techniques can be found in Ref. 149. The approaches can be broadly categorized into supervised methods, where both normal and anomalous events are classified, and the more realistic unsupervised scenario, where class labels are available only for normal events. In the supervised case, anomaly detection therefore reduces to a classification problem, while in the unsupervised case, the problem is one of outlier detection. In the transportation domain, many anomaly detection approaches leverage the aforementioned advances in object tracking to define normal and anomalous events in terms of vehicle trajectories. A recent development in this area is the use of sparse reconstruction techniques for detecting anomalous vehicle trajectories.149,150 An over-complete dictionary comprising normal trajectory classes is first constructed in a training phase. The trajectory classes can either be labeled manually using semantic categories or derived via an automatic unsupervised (e.g., clustering) technique. The hypothesis is that any new normal trajectory should lie within the linear span of other normal trajectories within the same class and thus can be reconstructed by combining a relatively small number of dictionary elements. Equivalently, the reconstruction coefficient vector is sparse, as is illustrated in Fig. 10. Fig. 10Sparse reconstruction for anomaly detection: is a test trajectory vector; is the training dictionary; and is the sparse reconstruction coefficient vector.  Conversely, reconstruction of anomalous trajectories is likely to involve a larger number of dictionary elements, potentially across multiple classes, and therefore the reconstruction vector would not be sparse. Anomaly detection therefore reduces to sparse reconstruction of a test trajectory with respect to the training dictionary, followed by a method of measuring sparsity. Reference 149 presents a formulation for single-object events. Reference 150 extends the sparsity framework to joint modeling of multiobject events and furthermore introduces a kernel to improve class separability. Both techniques accomplish sparse reconstruction via L1 norm minimization. Since anomaly detection algorithms reduce to a binary decision (i.e., is the event an anomaly or not?), a standard method of evaluating algorithm performance is to report the confusion matrix of false- versus true- positive and negative samples. Table 4 compares confusion matrices obtained from the joint sparsity model150 with another well-recognized algorithm in Ref. 151 that employs a one-class SVM classifier on vehicle trajectories. The data set used to train and test the approaches is a collection of vehicle trajectories obtained at a stop sign intersection. Vehicles coming to a complete stop at the intersection are labeled as normal events. The goal is thus to flag stop sign violations as anomalous events. The sparsity-based method is shown to significantly outperform that of Ref. 151, especially in the presence of occlusions. Table 4Confusion matrices for the joint sparsity and single-class support vector machine anomaly detection techniques on stop sign intersection data. Columns indicate ground truth, and rows indicate detected outputs.