|

|

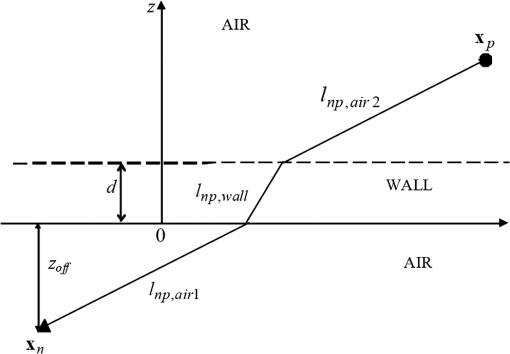

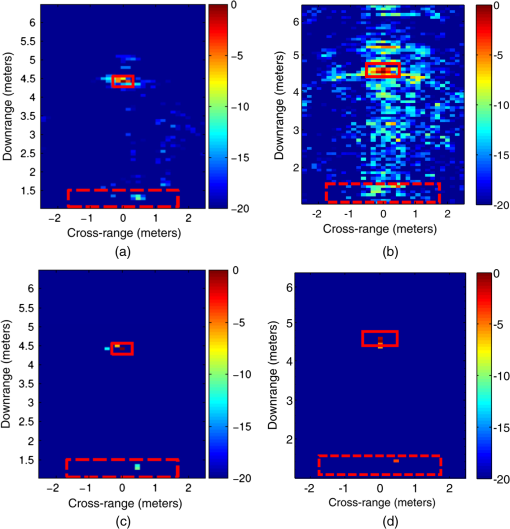

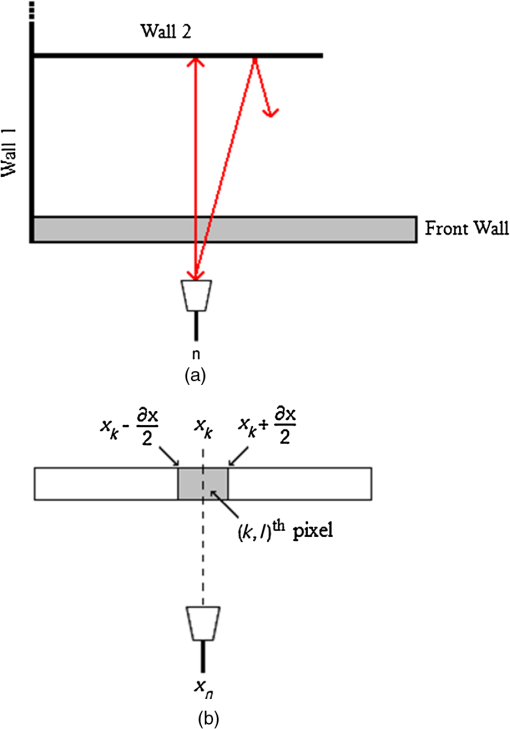

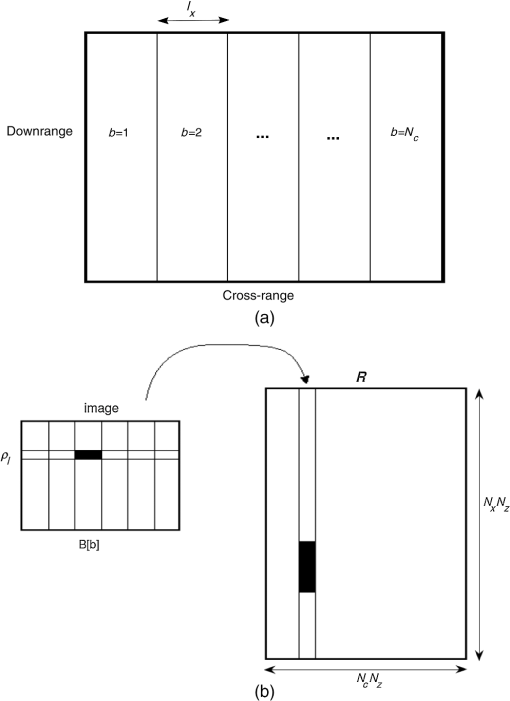

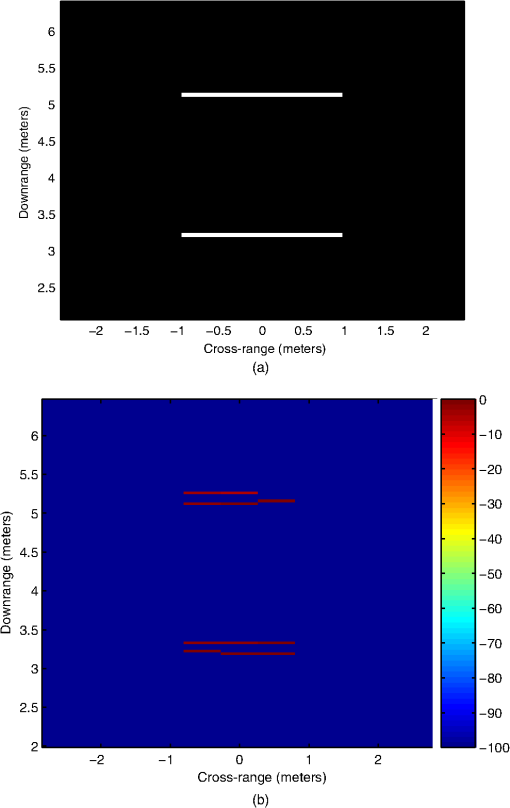

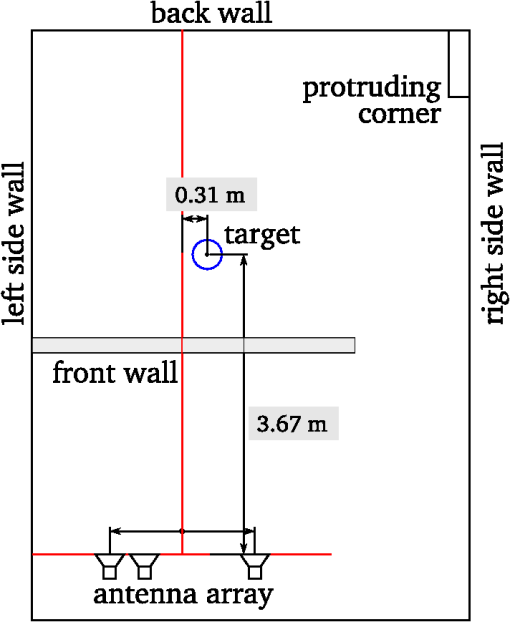

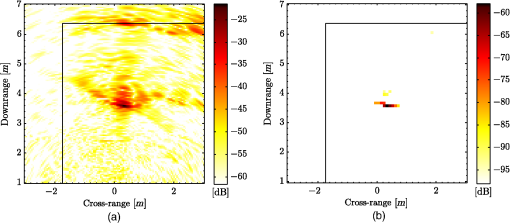

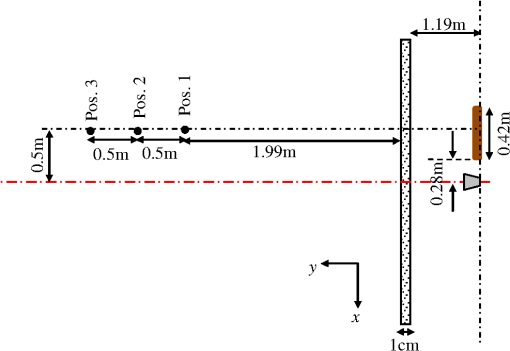

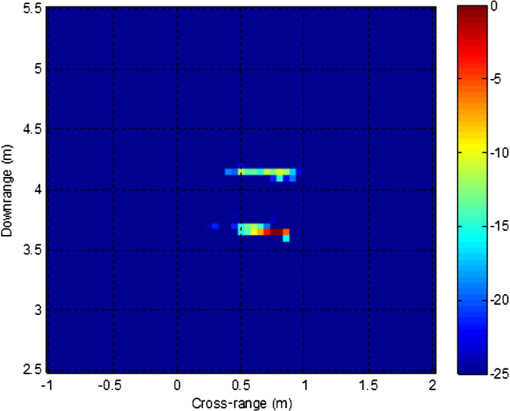

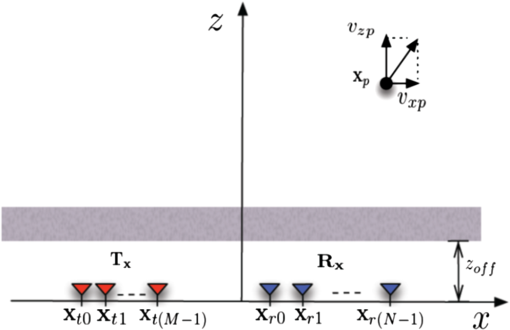

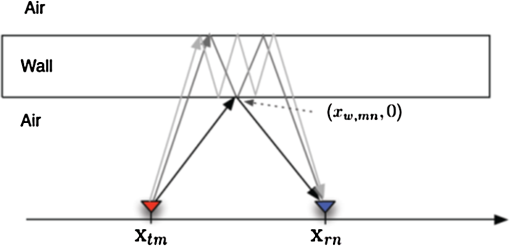

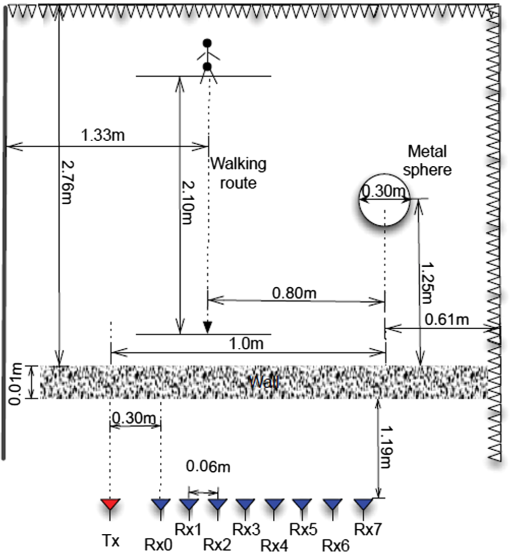

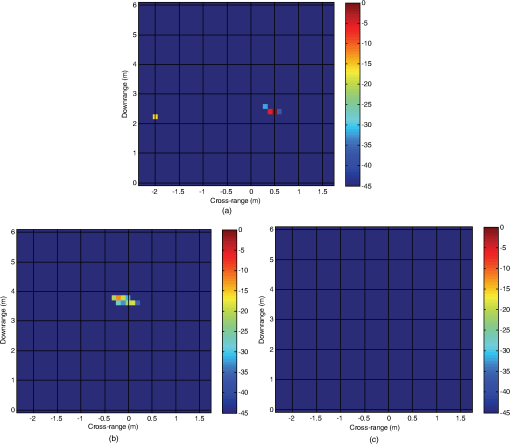

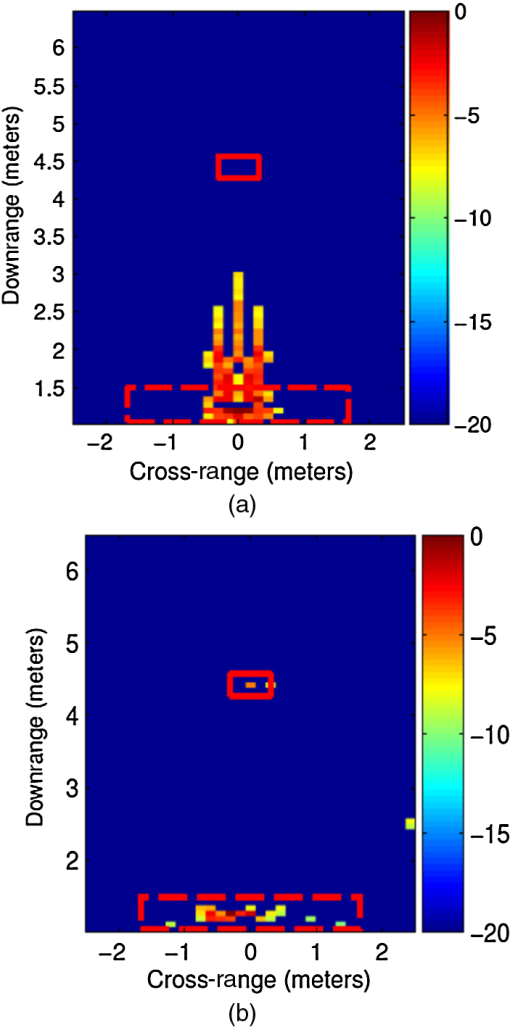

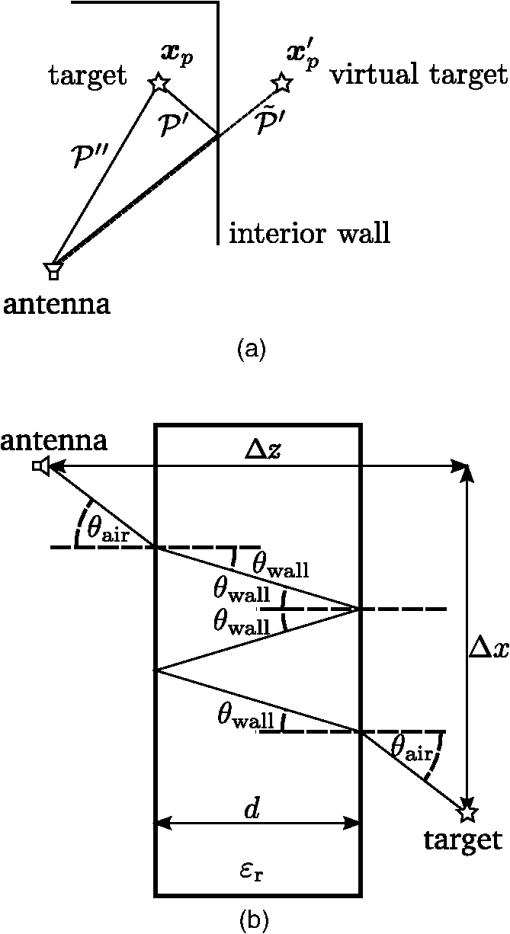

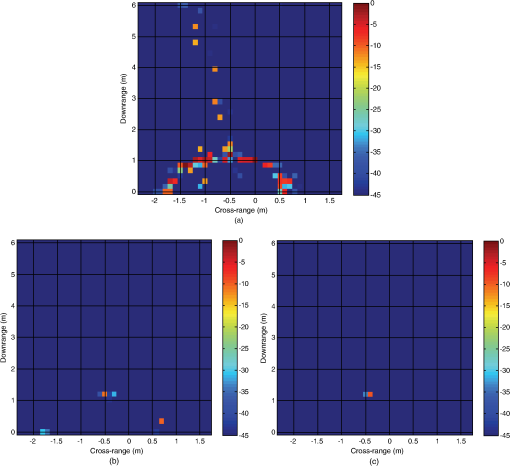

1.IntroductionThrough-the-wall radar imaging (TWRI) is an emerging technology that addresses the desire to see inside buildings using electromagnetic (EM) waves for various purposes, including determining the building layout, discerning the building intent and nature of activities, locating and tracking the occupants, and even identifying and classifying inanimate objects of interest within the building. TWRI is highly desirable for law enforcement, fire and rescue, and emergency relief, and military operations.1–6 Applications primarily driving TWRI development can be divided based on whether information on motions within a structure or on imaging the structure and its stationary contents is sought out. The need to detect motion is highly desirable to discern about the building intent and in many fire and hostage situations. Discrimination of movements from background clutter can be achieved through change detection (CD) or exploitation of Doppler.7–24 One-dimensional (1-D) motion detection and localization systems employ a single transmitter and receiver and can only provide range-to-motion, whereas two- and three-dimensional (2-D and 3-D) multi-antenna systems can provide more accurate localization of moving targets. The 3-D systems have higher processing requirements compared with 2-D systems. However, the third dimension provides height information, which permits distinguishing people from animals, such as household pets. This is important since radar cross-section alone for behind-the-wall targets can be unreliable. Imaging of structural features and stationary targets inside buildings requires at least 2-D and preferably 3-D systems.25–43 Because of the lack of any type of motion, these systems cannot rely on Doppler processing or CD for target detection and separation. Synthetic aperture radar (SAR) based approaches have been the most commonly used algorithms for this purpose. Most of the conventional SAR techniques usually neglect propagation distortions such as those encountered by signals passing through walls.44 Distortions degrade the performance and can lead to ambiguities in target and wall localizations. Free-space assumptions no longer apply after the EM waves propagate through the first wall. Without factoring in propagation effects, such as attenuation, reflection, refraction, diffraction, and dispersion, imaging of contents within buildings will be severely distorted. As such, image formation methods, array processing techniques, target detection, and image sharpening paradigms must work in concert and be reexamined in view of the nature and specificities of the underlying sensing problem. In addition to exterior walls, the presence of multipath and clutter can significantly contaminate the radar data leading to reduced system capabilities for imaging of building interiors and localization and tracking of targets behind walls. The multiple reflections within the wall result in wall residuals along the range dimension. These wall reverberations can be stronger than target reflections, leading to its masking and undetectability, especially for weak targets close to the wall.45 Multipath stemming from multiple reflections of EM waves off the targets in conjunction with the walls may result in the power being focused at pixels different than those corresponding to the target. This gives rise to ghosts, which can be confused with the real targets inside buildings.46–49 Further, uncompensated refraction through walls can lead to localization or focusing errors, causing offsets and blurring of imaged targets.26,39 SAR techniques and tomographic algorithms, specifically tailored for TWRI, are capable of making some of the adjustments for wave propagation through solid materials.26–30,36–41,50–57 While such approaches are well suited for shadowing, attenuation, and refraction effects, they do not account for multipath as well as strong reflections from the front wall. The problems caused by the front wall reflections can be successfully tackled through wall clutter mitigation techniques. Several approaches have been devised, which can be categorized into those based on estimating the wall parameters and others incorporating either wall backscattering strength or invariance with antenna location.39,45,58–61 In Refs. 39 and 58, a method to extract the dielectric constant and thickness of the nonfrequency dependent wall from the time-domain scattered field was presented. The time-domain response of the wall was then analytically modeled and removed from the data. In Ref. 45, a spatial filtering method was applied to remove the DC component corresponding with the constant-type radar return, typically associated with the front wall. The third method, presented in Refs. 59–61, was based not only on the wall scattering invariance along the array but also on the fact that wall reflections are relatively stronger than target reflections. As a result, the wall subspace is usually captured in the most dominant singular values when applying singular value decomposition (SVD) to the measured data matrix. The wall contribution can then be removed by orthogonal subspace projection. Several methods have also been devised for dealing with multipath ghosts in order to provide proper representation of the ground truth. Earlier work attempted to mitigate the adverse effects stemming from multipath propagation.27 Subsequently, research has been conducted to utilize the additional information carried by the multipath returns. The work in Ref. 49 considered multipath exploitation in TWRI, assuming prior knowledge of the building layout. A scheme taking advantage of the additional energy residing in the target ghosts was devised. An image was first formed, the ghost locations for each target were calculated, and then the ghosts were mapped back onto the corresponding target. In this way, the image became ghost-free with increased signal-to-clutter ratio (SCR). More recently, the focus of the TWRI research has shifted toward addressing constraints on cost and acquisition time in order to achieve the ultimate objective of providing reliable situational awareness through high-resolution imaging in a fast and efficient manner. This goal is primarily challenged due to use of wideband signals and large array apertures. Most radar imaging systems acquire samples in frequency (or time) and space and then apply compression to reduce the amount of stored information. This approach has three inherent inefficiencies. First, as the demands for high resolution and more accurate information increase, so does the number of data samples to be recorded, stored, and subsequently processed. Second, there are significant data redundancies not exploited by the traditional sampling process. Third, it is wasteful to acquire and process data samples that will be discarded later. Further, producing an image of the indoor scene using few observations can be logistically important, as some of the measurements in space and frequency or time can be difficult, unavailable, or impossible to attain. Toward the objective of providing timely actionable intelligence in urban environments, the emerging compressive sensing (CS) techniques have been shown to yield reduced cost and efficient sensing operations that allow super-resolution imaging of sparse behind-the-wall scenes.10,62–76 Compressive sensing is an effective technique for scene reconstruction from a relatively small number of data samples without compromising the imaging quality.77–89 In general, the minimum number of data samples or sampling rate that is required for scene image formation is governed by the Nyquist theorem. However, when the scene is sparse, CS provides very efficient sampling, thereby significantly decreasing the required volume of data collected. In this paper, we focus on CS for TWRI and present a review of norm reconstruction techniques that address the unique challenges associated with fast and efficient imaging in urban operations. Sections 234–5 deal with imaging of stationary scenes, whereas moving target localization is discussed in Sec. 6 and 7. More specifically, Sec. 2 deals with CS based strategies for stepped-frequency based radar imaging of sparse stationary scenes with reduced data volume in spatial and frequency domains. Prior and complete removal of clutter is assumed, which renders the scene sparse. Section 3 presents CS solutions in the presence of front wall clutter. Wall mitigation in conjunction with application of CS is presented for the case when the same reduced frequency set is used from all of the employed antennas. Section 4 considers imaging of the building interior structures using a CS-based approach, which exploits prior information of building construction practices to form an appropriate sparse representation of the building interior layout. Section 5 presents CS based multipath exploitation technique to achieve good image reconstruction in rich multipath indoor environments from few spatial and frequency measurements. Section 6 deals with joint localization of stationary and moving targets using CS based approaches, provided that the indoor scene is sparse in both stationary and moving targets. Section 7 discusses a sparsity-based CD approach to moving target indication for TWRI applications, and deals with cases when the heavy clutter caused by strong reflections from exterior and interior walls reduces the sparsity of the scene. Concluding remarks are provided in Sec. 8. It is noted that for the sake of not overcomplicating the notation, some symbols are used to indicate different variables over different sections of the paper. However, for those cases, these variables are redefined to reflect the change. The progress reported in this paper is substantial and noteworthy. However, many challenging scenarios and situations remain unresolved using the current techniques and, as such, further research and development are required. However, with the advent of technology that brings about better hardware and improved system architectures, opportunities for handling more complex building scenarios will definitely increase. 2.CS Strategies in Frequency and Spatial Domains for TWRIIn this section, we apply CS to through-the-wall imaging of stationary scenes, assuming prior and complete removal of the front wall clutter.62,63 For example, if the reference scene is known, then background subtraction can be performed for removal of wall clutter, thereby improving the sparsity of the behind-the-wall stationary scene. We assume stepped-frequency-based SAR operation. We first present the through-the-wall signal model, followed by a description of the sparsity-based scene reconstruction, highlighting the key equations. It is noted that the problem formulation can be modified in a straightforward manner for pulsed operation and multistatic systems. 2.1.Through-the-Wall Signal ModelConsider a homogeneous wall of thickness and dielectric constant located along the -axis, and the region to be imaged located beyond the wall along the positive -axis. Assume that an -element line array of transceivers is located parallel to the wall at a standoff distance , as shown in Fig. 1. Let the ’th transceiver, located at , illuminate the scene with a stepped-frequency signal of frequencies, which are equispaced over the desired bandwidth , where is the lowest frequency in the desired frequency band and is the frequency step size. The reflections from any targets in the scene are measured only at the same transceiver location. Assuming the scene contains point targets and the wall return has been completely removed, the output of the ’th transceiver corresponding to the ’th frequency is given by where is the complex reflectivity of the ’th target, and is the two-way traveling time between the ’th antenna and the target. It is noted that the complex amplitude due to free-space path loss, wall reflection/transmission coefficients and wall losses, is assumed to be absorbed into the target reflectivity. The propagation delay is given by27–28,40 where is the speed of light in free-space, is the speed through the wall, and the variables , , and represent the traveling distances of the signal before, through, and beyond the wall, respectively, from the ’th transceiver to the ’th target.An equivalent matrix-vector representation of the received signals in Eq. (2) can be obtained as follows. Assume that the region of interest is divided into a finite number of pixels in cross-range and downrange, and the point targets occupy no more than pixels. Let , , , be a weighted indicator function, which takes the value if the ’th point target exists at the ’th pixel; otherwise, it is zero. With the values lexicographically ordered into a column vector of length , the received signal corresponding to the ’th antenna can be expressed in matrix-vector form as where is a matrix of dimensions , and its ’th row is given by Considering the measurement vector corresponding to all antennas, defined as the relationship between and is given by where The matrix is a linear mapping between the full data and the sparse vector .2.2.Sparsity-Based Data Acquisition and Scene ReconstructionThe expression in Eq. (7) involves the full set of measurements made at the array locations using the frequencies. For a sparse scene, it is possible to recover from a reduced set of measurements. Consider , which is a vector of length consisting of elements chosen from as follows: where is a matrix of the form, In Eq. (10), kron denotes the Kronecker product, is a identity matrix, is a measurement matrix constructed by randomly selecting rows of an identity matrix, and , , is a measurement matrix constructed by randomly selecting rows of an identity matrix. We note that determines the reduced antenna locations, whereas determines the reduced set of frequencies corresponding to the ’th antenna location. The number of measurements required to achieve successful CS reconstruction highly depends on the coherence between and . For the problem at hand, is the canonical basis and is similar to the Fourier basis, which have been shown to exhibit maximal incoherence.80 Given , we can recover by solving the following equation (ideally, minimization of the norm would provide the sparsest solution. Unfortunately, it is NP-hard to solve the resulting minimization problem. The norm has been shown to serve as a good surrogate for norm.90 The minimization problem is convex, which can be solved in polynomial time):We note that the problem in Eq. (11) can be solved using convex relaxation, greedy pursuit, or combinatorial algorithms.91–96 In this section, we consider orthogonal matching pursuit (OMP), which is known to provide a fast and easy to implement solution. Moreover, OMP is better suited when frequency measurements are used.95 It is noted that the number of iterations of the OMP is usually associated with the level of sparsity of the scene. In practice, this piece of information is often unavailable a priori, and the stopping condition is heuristic. Underestimating the sparsity would result in the image not being completely reconstructed (underfitting), while overestimation would cause some of the noise being treated as signal (overfitting). Use of cross-validation (CV) has been also proposed to determine the stopping condition for the greedy algorithms.97–99 Cross-validation is a statistical technique that separates a data set into a training set and a CV set. The training set is used to detect the optimal stopping iteration. There is, however, a tradeoff between allocating the measurements for reconstruction or CV. More details can be found in Refs. 97 and 98. 2.3.Illustrative ResultsA through-the-wall wideband SAR system was set up in the Radar Imaging Lab at Villanova University. A 67-element line array with an inter-element spacing of 0.0187 m, located along the -axis, was synthesized parallel to a 0.14-m-thick solid concrete wall of length 3.05 m and at a standoff distance equal to 1.24 m. A stepped-frequency signal covering the 1 to 3 GHz frequency band with a step size of 2.75 MHz was employed. Thus, at each scan position, the radar collects 728 frequency measurements. A vertical metal dihedral was used as the target and was placed at (0, 4.4) m on the other side of the front wall. The size of each face of the dihedral is . The back and the side walls of the room were covered with RF absorbing material to reduce clutter. The empty scene without the dihedral target present was also measured to enable background subtraction for wall clutter removal. The region to be imaged is chosen to be centered at (0, 3.7) m and divided into , respectively. For CS, 20% of the frequencies and 51% of the array locations were used, which collectively represent 10.2% of the total data volume. Figure 2(a) and 2(c) depict the images corresponding to the full dataset obtained with back-projection and norm reconstruction, respectively. Figure 2(b) and 2(d) show the images corresponding to the measured scene obtained with back-projection and norm reconstruction, respectively, applied to the reduced background subtracted dataset. In Fig. 2 and all subsequent figures in this paper, we plot the image intensity with the maximum intensity value in each image normalized to 0 dB. The true target position is indicated with a solid red rectangle. We observe that, with the availability of the empty scene measurements, background subtraction renders the scene sparse, and thus a CS-based approach generates an image using reduced data where the target can be easily identified. On the other hand, back-projection applied to reduced dataset results in performance degradation, indicated by the presence of many artifacts in the corresponding image. OMP was used to generate the CS images. For this particular example, the number of OMP iterations was set to five. 3.Effects of Walls on Compressive Sensing SolutionsThe application of CS for TWRI as presented in Sec. 2 assumed prior and complete removal of front wall EM returns. Without this assumption, strong wall clutter, which extends along the range dimension, reduces the sparsity of the scene and, as such, impedes the application of CS.71–73 Having access to the background scene is not always possible in practical applications. In this section, we apply joint CS and wall mitigation techniques using reduced data measurements. In essence, we address wall clutter mitigations in the context of CS. There are several approaches, which successfully mitigate the front wall contribution to the received signal.39,45,58–61 These approaches were originally introduced to work on the full data volume and did not account for reduced data measurements especially randomly. We examine the performance of the subspace projection wall mitigation technique60 in conjunction with sparse image reconstruction. Only a small subset of measurements is employed for both wall clutter reduction and image formation. We consider the case where the same subset of frequencies is used for each employed antenna. Wall clutter mitigation under use of different frequencies across the employed antennas is discussed in Refs. 68 and 73. It is noted that, although not reported in this paper, the spatial filtering based wall mitigation scheme45 in conjunction with CS provides a similar performance to the subspace projection scheme.73 3.1.Wall Clutter MitigationWe first extend the through-the-wall signal model of Eq. (2) to include the front wall return. Without the assumption of prior wall return removal, the output of the ’th transceiver corresponding to the ’th frequency for a scene of point targets is given by where is the complex reflectivity of the wall, and is the two-way traveling time of the signal from the ’th antenna to the wall, and is given by It is noted that both the target and wall reflectivities in Eq. (12) are assumed to be independent of frequency and aspect angle. Many of the walls and indoor targets, including humans, have dependency of their reflection coefficients on frequency, which could also be a function of angle and polarization. This dependency, if neglected, could be a source of error. The latter, however, can be tolerated for relatively limited aperture and bandwidth. Further note that we assume a simple scene of point targets behind a front wall. The model can be extended to incorporate returns from more complex scenes involving multiple walls and room corners. These extensions are discussed in later sections.From Eq. (12), we note that does not vary with the antenna location since the array is parallel to the wall. Furthermore, as the wall is homogeneous and assumed to be much larger than the beamwidth of the antenna, the first term in Eq. (12) assumes the same value across the array aperture. Unlike , the time delay , given by Eq. (3), is different for each antenna location, since the signal path from the antenna to the target is different from one antenna to the other. The signals received by the antennas at the frequencies are arranged into an matrix, , where is the vector containing the stepped-frequency signal received by the ’th antenna, with given by Eq. (12). The eigen-structure of the imaged scene is obtained by performing the SVD of , where denotes the Hermitian transpose, and are unitary matrices containing the left and right singular vectors, respectively, and is a diagonal matrix and are the singular values. Without loss of generality, the number of frequencies are assumed to exceed the number of antenna locations, i.e., . The subspace projection method assumes that the wall returns and the target reflections lie in different subspaces. Therefore, the first dominant singular vectors of the matrix are used to construct the wall subspace, Methods for determining the dimensionality of the wall subspace have been reported in Refs. 59 and 60. The subspace orthogonal to the wall subspace is where is the identity matrix. To mitigate the wall returns, the data matrix is projected on the orthogonal subspace,60 The resulting data matrix has little or no contribution from the front wall.3.2.Joint Wall Mitigation and CSSubspace projection method for wall clutter reduction relies on the fact that the wall reflections are strong and assume very close values at the different antenna locations. When the same set of frequencies is employed for all employed antennas, the condition of spatial invariance of the wall reflections is maintained.72,73 This permits direct application of the subspace projection method as a preprocessing step to the norm based scene reconstruction of Eq. (11). 3.3.Illustrative ResultsWe consider the same experimental setup as in Sec. 2.3. Figure 3(a) shows the result obtained with norm reconstruction using 10.2% of the raw data volume without background subtraction. The number of OMP iterations was set to 100. Comparing Fig. 3(a) and the corresponding background subtracted image of Fig. 2(d), it is evident that in the absence of access to the background scene, the wall mitigation techniques must be applied, as a preprocessing step, prior to CS in order to detect the targets behind the wall. Fig. 3CS-based imaging result (a) using full data volume without background subtraction; (b) using 10% data volume with the same frequency set at each antenna.  First, we consider the case when the same set of reduced frequencies is used for a reduced set of antenna locations. We employ only 10.2% of the data volume, i.e., 20% of the available frequencies and 51% of the antenna locations. The subspace projection method is applied to a matrix of reduced dimension . The corresponding norm reconstructed image obtained with OMP is depicted in Fig. 3(b). It is clear that, even when both spatial and frequency observations are reduced, the joint application of wall clutter mitigation and CS techniques successfully provides front wall clutter suppression and unmasking of the target. 4.Designated Dictionary for Wall DetectionIn this section, we address the problem of imaging building interior structures using a reduced set of measurements. We consider interior walls as targets of interest and attempt to reveal the building interior layout based on CS techniques. We note that construction practices suggest the exterior and interior walls to be parallel or perpendicular to each other. This enables sparse scene representations using a dictionary of possible wall orientations and locations.76 Conventional CS recovery algorithms can then be applied to reduced number of observations to recover the positions of various walls, which is a primary goal in TWRI. 4.1.Signal Model Under Multiple Parallel WallsConsidering a monostatic stepped-frequency SAR system with antenna positions located parallel to the front wall, as shown in Fig. 1, we extend the signal model in Eq. (12) to include reflections from multiple parallel interior walls, in addition to the returns from the front wall and the point targets. That is, the received signal at the ’th antenna location corresponding to the ’th frequency can be expressed as where is the number of interior walls parallel to the array axis, represents the two-way traveling time of the signal from the ’th antenna to the ’th interior wall and is the complex reflectivity of the ’th interior wall. Similar to the front wall, the delays are independent of the variable , as evident in the subscripts.Note that the above model contains contributions only from interior walls parallel to the front wall and the antenna array. This is because, due to the specular nature of the wall reflections, a SAR system located parallel to the front wall will only be able to receive direct returns from walls, which are parallel to the front wall. The detection of perpendicular walls is possible by concurrently detecting and locating the canonical scattering mechanism of corner features created by the junction of walls of a room or by having access to another side of the building. Extension of the signal model to incorporate corner returns is reported in Ref. 76. Instead of the point-target based sensing matrix described in Eq. (7), where each antenna accumulates the contributions of all the pixels, we use an alternate sensing matrix, proposed in Ref. 68, to relate the scene vector, , and the observation vector, . This matrix underlines the specular reflections produced by the walls. Due to wall specular reflections, and since the array is assumed parallel to the front wall and, thus, parallel to interior walls, the rays collected at the ’th antenna will be produced by portions of the walls that are only in front of this antenna [see Fig. 4(a)]. The alternate matrix, therefore, only considers the contributions of the pixels that are located in front of each antenna. In so doing, the returns of the walls located parallel to the array axis are emphasized. As such, it is most suited to the specific building structure imaging problem, wherein the signal returns are mainly caused by EM reflections of exterior and interior walls. The alternate linear model can be expressed as where with defined as In Eq. (24), is the two-way signal propagation time associated with the downrange of the ’th pixel, and the function works as an indicator function in the following way: That is, if and represent the cross-range coordinates of the ’th pixel and the ’th antenna location, respectively, and is the cross-range sampling step, then provided that [see Fig. 4(b)].4.2.Sparsifying Dictionary for Wall DetectionSince the number of parallel walls is typically much smaller compared with the downrange extent of the building, the decomposition of the image into parallel walls can be considered as sparse. Note that although other indoor targets, such as furniture and humans, may be present, their projections onto the horizontal lines are expected to be negligible compared to those of the walls. In order to obtain a linear matrix-vector relation between the scene and the horizontal projections, we define a sparsifying matrix composed of possible wall locations. Specifically, each column of the dictionary represents an image containing a single wall of length pixels, located at a specific cross-range and at a specific downrange in the image. Consider the cross-range to be divided into nonoverlapping blocks of pixels each [see Fig. 5(a)], and the downrange division defined by the pixel grid. The number of blocks is determined by the value of , which is the minimum expected wall length in the scene. Therefore, the dimension of is ,where the product denotes the number of possible wall locations. Figure 5(b) shows a simplified scheme of the sparsifying dictionary generation. The projection associated with each wall location is given by where indicates the ’th cross-range block and . Defining the linear system of equations relating the observed data and the sparse vector is given by In practice and by the virtue of collecting signal reflections corresponding to the zero aspect angle, any interior wall outside the synthetic array extent will not be visible to the system. Finally, the CS image in this case is obtained by first recovering the projection vector using norm minimization with a reduced set of measurements and then forming the product .It is noted that we are implicitly assuming that the extents of the walls in the scene are integer multiples of the block of pixels. In case this condition is not satisfied, the maximum error in determining the wall extent will be at most equal to the chosen block size. Note that incorporation of the corner effects will help resolve this issue, since the localization of corners will identify the wall extent.76 4.3.Illustrative ResultsA through-the-wall SAR system was set up in the Radar Imaging Lab, Villanova University. A stepped-frequency signal consisting of 335 frequencies covering the 1 to 2 GHz frequency band was used for interrogating the scene. A monostatic synthetic aperture array, consisting of 71-element locations with an inter-element spacing of 2.2 cm, was employed. The scene consisted of two parallel plywood walls, each 2.25 cm thick, 1.83 m wide, and 2.43 m high. Both walls were centered at 0 m in cross-range. The first and the second walls were located at respective distances of 3.25 and 5.1 m from the antenna baseline. Figure 6(a) depicts the geometry of the experimental scene. The region to be imaged is chosen to be , centered at (0, 4.23) m, and is divided into . For the CS approach, we use a uniform subset of only 84 frequencies at each of the 18 uniformly spaced antenna locations, which represent 6.4% of the full data volume. The CS reconstructed image is shown in Fig. 6(b). We note that the proposed algorithm was able to reconstruct both walls. However, it can be observed in Fig. 6(b) that ghost walls appear immediately behind each true wall position. These ghosts are attributed to the dihedral-type reflections from the wall-floor junctions. 5.CS and Multipath ExploitationIn this section, we consider the problem of multipath in view of the requirements of fast data acquisition and reduced measurements. Multipath ghosts may cast a sparse scene as a populated scene, and at minimum will render the scene less sparse, degrading the performance of CS-based reconstruction. A CS method that directly incorporates multipath exploitation into sparse signal reconstruction for imaging of stationary scenes with a stepped-frequency monostatic SAR is presented. Assuming prior knowledge of the building layout, the propagation delays corresponding to different multipath returns for each assumed target position are calculated, and the multipath returns associated with reflections from the same wall are grouped together and represented by one measurement matrix. This allows CS solutions to focus the returns on the true target positions without ghosting. Although not considered in this section, it is noted that the clutter due to front wall reverberations can be mitigated by adapting a similar multipath formulation, which maps back multiple reflections within the wall after separating wall and target returns.100 5.1.Multipath Propagation ModelWe refer to the signal that propagates from the antenna through the front wall to the target and back to the antenna as the direct target return. Multipath propagation corresponds with indirect paths, which involve reflections at one or more interior walls by which the signal may reach the target. Multipath can also occur due to reflections from the floor and ceiling and interactions among different targets. In considering wall reflections and assuming diffuse target scattering, there are two typical cases for multipath. In the first case, the wave traverses a path that consists of two parts—one part is the propagation path to the target and back to the receiver, and the other part is a round trip path from the target to an interior wall. As the signal weakens at each secondary wall reflection, this case can usually be neglected. Furthermore, except when the target is close to an interior wall, the corresponding propagation delay is high and, most likely, would be equivalent to the direct-path delay of a target that lies outside the perimeter of the room being imaged. Thus, if necessary, this type of multipath can be gated out. The second case is a bistatic scattering scenario, where the signal propagation on transmit and receive takes place along different paths. This is the dominant case of multipath with one of the paths being the direct propagation, to or from the target, and the other involving a secondary reflection at an interior wall. Other higher-order multipath returns are possible as well. Signals reaching the target can undergo multiple reflections within the front wall. We refer to such signals as wall ringing multipaths. Also the reflection at the interior wall can occur at the outer wall-air interface. This will result, however, in additional attenuation and, therefore, can be neglected. In order to derive the multipath signal model, we assume perfect knowledge of the front wall, i.e., location, thickness, and dielectric constant, as well as the location of the interior walls. 5.1.1.Interior wall multipathConsider the antenna-target geometry illustrated in Fig. 7(a), where the front wall has been ignored for simplicity. The ’th target is located at , and the interior wall is parallel to the -axis and located at . Multipath propagation consists of the forward propagation from the ’th antenna to the target along the path and the return from the target via a reflection at the interior wall along the path . Assuming specular reflection at the wall interface, we observe from Fig. 7(a) that reflecting the return path about the interior wall yields an alternative antenna-target geometry. We obtain a virtual target located at , and the delay associated with path is the same as that of the path from the virtual target to the antenna. This correspondence simplifies the calculation of the one-way propagation delay associated with path . It is noted that this principle can be used for multipath via any interior wall. Fig. 7(a) Multipath propagation via reflection at an interior wall; (b) wall ringing propagation with internal bounces.  From the position of the virtual target of an assumed target location, we can calculate the propagation delay along path as follows. Under the assumption of free space propagation, the delay can be simply calculated as the Euclidean distance from the virtual target to the receiver divided by the propagation speed of the wave. In the TWRI scenario, however, the wave has to pass through the front wall on its way from the virtual target to the receiver. As the front wall parameters are assumed to be known, the delay can be readily calculated from geometric considerations using Snell’s law.28 5.1.2.Wall ringing multipathThe effect of wall ringing on the target image can be delineated through Fig. 7(b), which depicts the wall and the incident, reflected, and refracted waves. The distance between the target and the array element in cross-range direction, , can be expressed as where is the distance between target and array element in downrange direction, and and are the angles in the air and in the wall medium, respectively. The integer denotes the number of internal reflections within the wall. The case =0 describes the direct path as derived in Ref. 28. From Snell’s law, Equations (29) and (30) form a nonlinear system of equations that can be solved numerically for the unknown angles, e.g., using the Newton method. Having the solution for the incidence and refraction angles, we can express the one-way propagation delay associated with the wall ringing multipath as1015.2.Received Signal ModelHaving described the two principal multipath mechanisms in TWRI, namely the interior wall and wall ringing types of multipath, we are now in a position to develop a multipath model for the received signal. We assume that the front wall returns have been suppressed and the measured data contains only the target returns. The case with the wall returns present in the measurements is discussed in Ref. 100. Each path from the transmitter to a target and back to receiver can be divided into two parts, and where denotes the partial path from the transmitter to the scattering target and is the return path back to the receiver. For each target-transceiver combination, there exist a number of partial paths due to the interior wall and wall ringing multipath phenomena. Let , , and , , denote the feasible partial paths. Any combination of and results in a round-trip path , . We can establish a function that maps the index of the round-trip path to a pair of indices of the partial paths, . Hence we can determine the maximum number of possible paths for each target-transceiver pair. Note that, in practice, , as some round-trip paths may be equal due to symmetry while some others could be strongly attenuated and thereby can be neglected. We follow the convention that refers to the direct round-trip path. The round-trip delay of the signal along path , consisting of the partial parts and ,can be calculated as We also associate a complex amplitude for each possible path corresponding to the ’th target, with the direct path, which is typically the strongest in TWRI, having .Without loss of generality, we assume the same number of propagation paths for each target. The unavailability of a path for a particular target is reflected by a corresponding path amplitude of zero. The received signal at the ’th antenna due to the ’th frequency can, therefore, be expressed as As the bistatic radar cross-section (RCS) of a target could be different from its monostatic RCS, the target reflectivity is considered to be dependent on the propagation path. For convenience, the path amplitude in Eq. (33) can be absorbed into the target reflectivity , leading to Note that Eq. (34) is a generalization of the non-multipath propagation model in Eq. (2). If the number of propagation paths is set to 1, then the two models are equivalent.The matrix-vector form for the received signal under multipath propagation is given by where The term , , takes the value if the ’th point target exists at the ’th pixel; otherwise, it is zero. Finally, the reduced measurement vector can be obtained from Eq. (35) as , where the matrix is defined in Eq. (10).5.3.Sparse Scene Reconstruction with Multipath ExploitationWithin the CS framework, we aim at undoing the ghosts, i.e., inverting the multipath measurement model and achieving a reconstruction, wherein only the true targets remain. In practice, any prior knowledge about the exact relationship between the various subimages of the sparse scene is either limited or nonexistent. However, we know with certainty that the sub-images describe the same underlying scene. That is, the support of the images is the same, or at least approximately the same. The common structure property of the sparse scene suggests the application of a group sparse reconstruction. All unknown vectors in Eq. (35) can be stacked to form a tall vector of length The reduced measurement vector can then be expressed as where has dimensions .We proceed to reconstruct the images from under measurement model in Eq. (38). It has been shown that a group sparse reconstruction can be obtained by a mixed norm regularization.102–105 Thus we solve where is the so-called regularization parameter and is the mixed norm. As defined in Eq. (40), the mixed norm behaves like an norm on the vector , and therefore induces group sparsity. In other words, each , and equivalently each , are encouraged to be set to zero. On the other hand, within the groups, the norm does not promote sparsity.106 The convex optimization problem in Eq. (39) can be solved using SparSA,102 YALL group,103 or other available schemes.105,107Once a solution is obtained, the subimages can be noncoherently combined to form an overall image with an improved signal-to-noise-and-clutter ratio (SCNR), with the elements of the composite image defined as 5.4.Illustrative ResultsAn experiment was conducted in a semi-controlled environment at the Radar Imaging Lab, Villanova University. A single aluminum pipe (61 cm long, 7.6 cm diameter) was placed upright on a 1.2-m-high foam pedestal at 3.67 m downrange and 0.31 m cross-range, as shown in Fig. 8. A 77-element uniform linear monostatic array with an inter-element spacing of 1.9 cm was used for imaging. The origin of the coordinate system is chosen to be at the center of the array. The 0.2-m-thick concrete front wall was located parallel to the array at 2.44 m downrange. The left sidewall was at a cross-range of , whereas the back wall was at 6.37 m downrange (see Fig. 8). Also there was a protruding corner on the right at 3.4 m cross-range and 4.57 m downrange. A stepped-frequency signal, consisting of 801 equally spaced frequency steps covering the 1 to 3 GHz band was employed. The left and right side walls were covered with RF absorbing material, but the protruding right corner and the back wall were left uncovered. We consider background-subtracted data to focus only on target multipath. Figure 9(a) depicts the backprojection image using all available data. Apparently, only the multipath ghosts due to the back wall, and the protruding corner in the back right are visible. Hence we only consider these two multipath propagation cases for the group sparse CS scheme. We use 25% of the array elements and 50% of the frequencies. The corresponding CS reconstruction is shown in Fig. 9(b). The multipath ghosts have been clearly suppressed. 6.CS-Based Change Detection for Moving Target LocalizationIn this section, we consider sparsity-driven CD for human motion indication in TWRI applications. CD can be used in lieu of Doppler processing, wherein motion detection is accomplished by subtraction of data frames acquired over successive probing of the scene. In so doing, CD mitigates the heavy clutter that is caused by strong reflections from exterior and interior walls and also removes stationary objects present in the enclosed structure, thereby rendering a densely populated scene sparse.7,9,10 As a result, it becomes possible to exploit CS techniques for achieving reduction in the data volume. We assume a multistatic imaging system with physical transmit and receive apertures and a wideband transmit pulse. We establish an appropriate CD model for translational motion that permits formulation of linear modeling with sensing matrices, so as to apply CS for scene reconstruction. Other types of human motions involving sudden short movements of the limbs, head, and/or torso are discussed in Ref. 70. 6.1.Signal ModelConsider wideband radar operation with transmitters and receivers. A sequential multiplexing of the transmitters with simultaneous reception at multiple receivers is assumed. As such, a signal model can be developed based on single active transmitters. We note that the timing interval for each data frame is assumed to be a fraction of a second so that the moving target appears stationary during each data collection interval. Let be the wideband baseband signal used for interrogating the scene. For the case of a single point target with reflectivity , located at behind a wall, the pulse emitted by the ’th transmitter with phase center at is received at the ’th receiver with phase center at in the form where is the carrier frequency, is the propagation delay for the signal to travel between the ’th transmitter, the target at , and the ’th receiver, and represents the contribution of the stationary background at the ’th receiver with the ’th transmitter active. The delay consists of the components corresponding to traveling distances before, through, and after the wall, similar to Eq. (3).In its simplest form, CD is achieved by coherent subtraction of the data corresponding to two data frames, which may be consecutive or separated by one or more data frames. This subtraction operation is performed for each range bin. CD results in the set of difference signals, where denotes the number of frames between the two time acquisitions. The component of the radar return from the stationary background is the same over the two time intervals and is thus removed from the difference signal. Using Eqs. (42) and (43), the ’th difference signal can be expressed as where and are the respective two-way propagation delays for the signal to travel between the ’th transmitter, the target, and the ’th receiver, during the first and the second data acquisitions, respectively.6.2.Sparsity-Driven Change Detection under Translational MotionConsider the difference signal in Eq. (44) for the case where the target is undergoing translational motion. Two nonconsecutive data frames with relatively long time difference are used, i.e., (Ref. 108). In this case, the target will change its range gate position during the time elapsed between the two data acquisitions. As seen from Eq. (44), the moving target will present itself as two targets, one corresponding to the target position during the first time interval, and the other corresponding to the target location during the second data frame. It is noted that the imaged target at the reference position corresponding to the first data frame cannot be suppressed for the coherent CD approach. On the other hand, the noncoherent CD approach that deals with differences of image magnitudes corresponding to the two data frames, allows suppression of the reference image through a zero-thresholding operation.23 However, as the noncoherent approach requires the scene reconstruction to be performed prior to CD, it is not a feasible option for sparsity-based imaging, which relies on coherent CD to render the scene sparse. Therefore, we rewrite Eq. (44) as with If we sample the difference signal at times to obtain the vector and form the concatenated scene reflectivity vector , then using the developed signal model in Eq. (45), we obtain the linear system of equations The ’th column of consists of the received signal corresponding to a target at pixel and the ’th element of the ’th column can be written as70,83 where is the two-way signal traveling time from the ’th transmitter to the ’th pixel and back to the ’th receiver. Note that the ’th element of the vector is , which implies that the denominator in the R.H.S. of Eq. (48) is the energy in the time signal. Therefore, each column of has unit norm. Further note that if there is a target at the ’th pixel, the value of the ’th element of should be ; otherwise, it is zero.The CD model described in Eqs. (47) and (48) permits the scene reconstruction within the CS framework. We measure a dimensional vector of elements randomly chosen from . The new measurements can be expressed as where is a measurement matrix. Several types of measurement matrices have been reported in the literature83,86,109 and the references therein. To name a few, a measurement matrix whose elements are drawn from a Gaussian distribution, a measurement matrix having random entries with probability of 0.5, or a random matrix whose entries can be constructed by randomly selecting rows of a identity matrix. It was shown in Ref. 83 that the measurement matrix with random elements requires the least amount of compressive measurements for the same radar imaging performance, and permits a relatively straight forward data acquisition implementation. Therefore, we choose to use such a measurement matrix in image reconstructions.Given for , , , , we can recover by solving the following equation: where Equations (50) and (51) represent one strategy that can be adopted for sparsity-based CD approach, wherein a reduced number of time samples are chosen randomly for all the transmitter-receiver pairs constituting the array apertures. The above two equations can also be extended so that the reduction in data measurements includes both spatial and time samples. The latter strategy is not considered in this section.6.3.Illustrative ResultsA through-the-wall wideband pulsed radar system was used for data collection in the Radar Imaging Lab at Villanova University. The system uses a 0.7 ns Gaussian pulse for scene interrogation. The pulse is up-converted to 3 GHz for transmission and down-converted to baseband through in-phase and quadrature demodulation on reception. The system operational bandwidth from 1.5 to 4.5 GHz provides a range resolution of 5 cm. The peak transmit power is 25 dBm. Transmission is through a single horn antenna, which is mounted on a tripod. An eight-element line array with an inter-element spacing of 0.06 m, is used as the receiver and is placed to the right of the transmit antenna. The center-to-center separation between the transmitter and the leftmost receive antenna is 0.28 m, as shown in Fig. 10. A wall segment was constructed utilizing 1-cm-thick cement board on a 2-x-4 wood stud frame. The transmit antenna and the receive array were at a standoff distance of 1.19 m from the wall. The system refresh rate is 100 Hz. In the experiment, a person walked away from the wall in an empty room (the back and the side walls were covered with RF absorbing material) along a straight line path. The path is located 0.5 m to the right of the center of the scene, as shown in Fig. 10. The data collection started with the target at position 1 and ended after the target reached position 3, with the target pausing at each position along the trajectory for a second. Consider the data frames corresponding to the target at positions 2 and 3. Each frame consists of 20 pulses, which are coherently integrated to improve the signal-to-noise ratio. The imaging region (target space) is chosen to be , centered at (0.5 m, 4 m), and divided into grid points in cross-range and downrange, resulting in 3721 unknowns. The space-time response of the target space consists of space-time measurements. For sparsity-based CD, only 5% of the 1536 time samples are randomly selected at each of the eight receive antenna locations, resulting in space-time measured data. Figure 11 depicts the corresponding result. We observe that, as the human changed its range gate position during the time elapsed between the two acquisitions, it presents itself as two targets in the image, and is correctly localized at both of its positions. 7.CS General Formulation for Stationary and Moving TargetsAs seen in the previous sections, the presence of the front wall renders the target detection problem very difficult and challenging and has an adverse effect on the scene reconstruction performance when employing CS. Different strategies have been devised for suppression of the wall clutter to enable target detection behind walls. Change detection enables detection and localization of moving targets. Clutter cancellation filtering provides another option.87,110 However, along with the wall clutter, both of these methods also suppress the returns from the stationary targets of interest in the scene, and as such, allow subsequent application of CS to recover only the moving targets. Wall clutter mitigation methods can be applied to remove the wall and enable joint detection of stationary and moving targets. However, these methods assume monostatic operation with the array located parallel to the front wall and exploit the strength and invariance of the wall return across the array under such a deployment for mitigating the wall return. As such, they may not perform as well under other situations. For multistatic imaging radar systems using ultra-wideband (UWB) pulses, an alternate option is to employ time gating, in lieu of the aforementioned clutter cancellation methods. The compact temporal support of the signal renders time gating a viable option for suppressing the wall returns. This enhances the SCR and maintains the sparsity of the scene, thereby permitting the application of CS techniques for simultaneous localization of stationary and moving targets with few observations.74 7.1.Signal ModelConsider the scene layout depicted in Fig. 12. Note that although the -element transmit and -element receive arrays are assumed to be parallel to the front wall for notational simplicity, this is not a requirement. Let be the pulse repetition interval. Consider a coherent processing interval of pulses per transmitter and a single point target moving slowly away from the origin with constant horizontal and vertical velocity components , as depicted in Fig. 12. Let the target position be at time . Assume that the timing interval for sequencing through the transmitters is short enough so that the target appears stationary during each data collection interval of length . This implies that the target position corresponding to the ’th pulse is given by The baseband target return measured by the ’th receiver corresponding to the pulse emitted by the ’th transmitter is given by74 where is the propagation delay for the ’th pulse to travel from the ’th transmitter to the target at , and back to the ’th receiver. In the presence of point targets, the received signal component corresponding to the targets will be a superposition of the individual target returns in (53) with . Interactions between the targets and multipath returns are ignored in this model. Note that any stationary targets behind the wall are included in this model and would correspond to the motion parameter pair . Further note that the slowly moving targets are assumed to remain within the same range cell over the coherent processing interval.On the other hand, as the wall is a specular reflector, the baseband wall return received at the ’th receiver corresponding to the ’th pulse emitted by the ’th transmitter can be expressed as where is the propagation delay from the ’th transmitter to the wall and back to the ’th receiver, and represents the wall reverberations of decaying amplitudes resulting from multiple reflections within the wall (see Fig. 13). The propagation delay is given by111 where is the point of reflection on the wall corresponding to the ’th transmitter and the ’th receiver, as shown in Fig. 13. Note that, as the wall is stationary, the delay does not vary from one pulse to the next. Therefore the expression in Eq. (54) assumes the same value for .Combining Eqs. (53) and (54), the total baseband signal received by the ’th receiver, corresponding to the ’th pulse with the ’th transmitter active, is given by By gating out the wall return in the time domain, we gain access to the sparse behind-the-wall scene of a few stationary and moving targets of interest. Therefore the time-gated received signal contains only contributions from the targets behind the wall as well as any residuals of the wall not removed or fully mitigated by gating. In this section, we assume that wall clutter is effectively suppressed by gating. Therefore, using Eq. (57), we obtain 7.2.Linear Model Formulation and CS ReconstructionWith the observed scene divided into pixels in cross-range and downrange, consider and discrete values of the expected horizontal and vertical velocities, respectively. Therefore an image with pixels in cross-range and downrange is associated with each considered horizontal and vertical velocity pair, resulting in a four-dimensional (4-D) target space. Note that the considered velocities contain the (0, 0) velocity pair to include stationary targets. Sampling the received signal at times , we obtain a vector . For the ’th velocity pair , we vectorize the corresponding cross-range versus downrange image into an scene reflectivity vector . The vector is a weighted indicator vector defining the scene reflectivity corresponding to the ’th considered velocity pair, i.e., if there is a target at the spatial grid point (, ) with motion parameters , then the value of the corresponding element of should be nonzero; otherwise, it is zero. Using the developed signal model in Eqs. (53) and (58), we obtain the linear system of equations where the matrix is of dimension . The ’th column of consists of the received signal corresponding to a target at pixel with motion parameters , and the ’th element of the ’th column can be written as where is the two-way signal traveling time, corresponding to , from the ’th transmitter to the ’th spatial grid point and back to the ’th receiver for the ’th pulse.Stacking the received signal samples corresponding to pulses from all transmitting and receiving element pairs, we obtain the measurement vector as where Finally, forming the matrix as we obtain the linear matrix equation with being the concatenation of target reflectivity vectors corresponding to every possible considered velocity combination.The model described in Eq. (64) permits the scene reconstruction within the CS framework. We measure a dimensional vector of elements randomly chosen from . The reduced set of measurements can be expressed as where is a measurement matrix. For measurement reduction simultaneously along the spatial, slow time, and fast time dimensions, the specific structure of the matrix is given by where is an identity matrix with the subscript indicating its dimensions, and , and denote the reduced number of transmit elements, receive elements, pulses, and fast time samples, respectively, with the total number of reduced measurements . The matrix is an matrix, is an matrix, is a matrix, and each of the matrices is a matrix for determining the reduced number of transmitting elements, receiving elements, pulses and fast time samples, respectively. Each of the three matrices , , and consists of randomly selected rows of an identity matrix. These choices of reduced matrix dimensions amount to selection of subsets of existing available degrees of freedom offered by the fully deployed imaging system. Any other matrix structure does not yield to any hardware simplicity or saving in acquisition time. On the other hand, three different choices, discussed in Sec. 6.2, are available for compressive acquisition of each pulse in fast time.Given the reduced measurement vector in Eq. (65), we can recover by solving the following equation, We note that the reconstructed vector can be rearranged into matrices of dimensions in order to depict the estimated target reflectivity for different vertical and horizontal velocity combinations. Note that (1) stationary targets will be localized for the (0,0) velocity pair, and (2) two targets located at the same spatial location but moving with different velocities will be distinguished and their corresponding reflectivity and motion parameters will be estimated.7.3.Illustrative ResultsA real data collection experiment was conducted in the Radar Imaging Laboratory, Villanova University. The system and signal parameters are the same as described in Sec. 6.3. The origin of the coordinate system was chosen to be at the center of the receive array. The scene behind the wall consisted of one stationary target and one moving target, as shown in Fig. 14. A metal sphere of 0.3 m diameter, placed on a 1-m-high Styrofoam pedestal, was used as the stationary target. The pedestal was located 1.25 m behind the wall, centered at (0.49 m, 2.45 m). A person walked toward the front wall at a speed of approximately along a straight line path, which is located 0.2 m to the right of the transmitter. The back and the right side wall in the region behind the front wall were covered with RF absorbing material, whereas the 8-in.-thick concrete side-wall on the left and the floor were uncovered. A coherent processing interval of 15 pulses was selected. The image region is chosen to be , centered at (, ), and divided into in cross-range and downrange. As the human moves directly toward the radar, we only consider varying vertical velocity from to , with a step size of , resulting in three velocity pixels. The space-slow time-fast time response of the scene consists of measurements. First, we reconstruct the scene without time gating the wall response. Only 33.3% of the 15 pulses and 13.9% of the fast-time samples are randomly selected for each of the eight receive elements, resulting in space-slow time-fast time measured data. This is equivalent to 4.6% of the total data volume. Figure 15 depicts the CS based result, corresponding to the three velocity bins, obtained with the number of OMP iterations set to 50. We observe from Fig. 15(a) and 15(b) that both the stationary sphere and the moving person cannot be localized. The reason behind this failure is twofold: (1) the front wall is a strong extended target, and as such most of the degrees of freedom of the reconstruction process are used up for the wall, and (2) the low SCR, due to the much weaker returns from the moving and stationary targets compared to the front wall reflections, causes the targets to be not reconstructed with the residual degrees of freedom of the OMP. These results confirm that the performance of the sparse reconstruction scheme is hindered by the presence of the front wall. Fig. 15Imaging result for both stationary and moving targets without time gating: (a) CS reconstructed image ; (b) CS reconstructed image ; (c) CS reconstructed image .  After removal of the front wall return from the received signals through time gating, the space-slow time-fast time data includes measurements. For CS, we used all eight receivers, randomly selected five pulses (33.3% of 15) and chose 400 Gaussian random measurements (19.5% of 2048) in fast time, which amounts to using 6.5% of the total data volume. The number of OMP iterations was set to 4. Figure 16(a)–16(c) shows the respective images corresponding to the 0, , and velocities. It is apparent that with the wall gated out, both the stationary and moving targets have been correctly localized even with the reduced set of measurements. 8.ConclusionIn this paper, we presented a review of important approaches for sparse behind-the-wall scene reconstruction using CS. These approaches address the unique challenges associated with fast and efficient imaging in urban operations. First, considering stepped-frequency SAR operation, we presented a linear matrix modeling formulation, which enabled application of sparsity-based reconstruction of a scene of stationary targets using a significantly reduced data volume. Access to background scene without the targets of interest was assumed to render the scene sparse upon coherent subtraction. Subsequent sparse reconstruction using a much reduced data volume was shown to successfully detect and accurately localize the targets. Second, assuming no prior access to a background scene, we examined the performance of joint mitigation of the wall backscattering and sparse scene reconstruction in TWRI applications. We focused on subspace projections approach, which is a leading method for combating wall clutter. Using real data collected with a stepped-frequency radar, we demonstrated that the subspace projection method maintains proper performance when acting on reduced data measurements. Third, a sparsity-based approach for imaging of interior building structure was presented. The technique made use of the prior information about building construction practices of interior walls to both devise an appropriate linear model and design a sparsifying dictionary based on the expected wall alignment relative to the radar’s scan direction. The scheme was shown to provide reliable determination of building layouts, while achieving substantial reduction in data volume. Fourth, we described a group sparse reconstruction method to exploit the rich indoor multipath environment for improved target detection under efficient data collection. A ray-tracing approach was used to derive a multipath model, considering reflections not only due to targets interactions with interior walls, but also the multipath propagation resulting from ringing within the front wall. Using stepped-frequency radar data, it was shown that this technique successfully reconstructed the ground truth without multipath ghosts and also increased the SCR at the true target locations. Fifth, we detected and localized moving humans behind walls and inside enclosed structures using an approach that combines sparsity-driven radar imaging and change detection. Removal of stationary background via CD resulted in a sparse scene of moving targets, whereby CS schemes could exploit full benefits of sparsity-driven imaging. An appropriate CD linear model was developed that allowed scene reconstruction within the CS framework. Using pulsed radar operation, it was demonstrated that a sizable reduction in the data volume is provided by CS without degradation in system performance. Finally, we presented a CS-based technique for joint localization of stationary and moving targets in TWRI applications. The front wall returns were suppressed through time gating, which was made possible by the short temporal support characteristic of the UWB transmit waveform. The SCR enhancement as a result of time gating permitted the application of CS techniques for scene reconstruction with few observations. We established an appropriate signal model that enabled formulation of linear modeling with sensing matrices for reconstruction of the downrange-cross-range-velocity space. Results based on real data experiments demonstrated that joint localization of stationary and moving targets can be achieved via sparse regularization using a reduced set of measurements without any degradation in system performance. ReferencesThrough-the-Wall Radar Imaging, CRC Press, Boca Raton, FL

(2010). Google Scholar

“Special issue on Advances in Indoor Radar Imaging,”

J. Franklin Inst., 345

(6), 556722

(2008). http://dx.doi.org/10.1016/j.jfranklin.2008.05.001 JFINAB 0016-0032 Google Scholar

“Special issue on remote sensing of building interior,”

IEEE Trans. Geosci. Rem. Sens., 47

(5), 12701420

(2009). http://dx.doi.org/10.1109/TGRS.2009.2017053 IGRSD2 0196-2892 Google Scholar

E. Baranoski,

“Through-wall imaging: historical perspective and future directions,”

J. Franklin Inst., 345

(6), 556

–569

(2008). http://dx.doi.org/10.1016/j.jfranklin.2008.01.005 JFINAB 0016-0032 Google Scholar

S. E. Borek,

“An overview of through-the-wall surveillance for homeland security,”

in Proc. 34th, Applied Imagery and Pattern Recognition Workshop,

19

–21

(2005). Google Scholar

H. Burchett,

“Advances in through wall radar for search, rescue and security applications,”

in Proc. Inst. of Eng. and Tech. Conf. Crime and Security,

511

–525

(2006). Google Scholar

A. MartoneK. RanneyR. Innocenti,

“Through-the-wall detection of slow-moving personnel,”

Proc. SPIE, 7308 73080Q1

(2009). http://dx.doi.org/10.1117/12.818513 PSISDG 0277-786X Google Scholar

X. P. Masbernatet al.,

“An MIMO-MTI approach for through-the-wall radar imaging applications,”

in Proc. 5th Int. Waveform Diversity and Design Conf.,

(2010). Google Scholar

M. G. AminF. Ahmad,

“Change detection analysis of humans moving behind walls,”

IEEE Trans. Aerosp. Electron. Syst., 49

(3),

(2013). IEARAX 0018-9251 Google Scholar

M. AminF. AhmadW. Zhang,

“A compressive sensing approach to moving target indication for urban sensing,”

in Proc. IEEE Radar Conf.,

509

–512

(2011). Google Scholar

J. Moultonet al.,

“Target and change detection in synthetic aperture radar sensing of urban structures,”

in Proc. IEEE Radar Conf.,

(2008). Google Scholar

A. MartoneK. RanneyR. Innocenti,

“Automatic through-the-wall detection of moving targets using low-frequency ultra-wideband radar,”

in Proc. IEEE Radar Conf.,

39

–43

(2010). Google Scholar

S. S. RamH. Ling,

“Through-wall tracking of human movers using joint Doppler and array processing,”

IEEE Geosci. Rem. Sens. Lett., 5

(3), 537

–541

(2008). http://dx.doi.org/10.1109/LGRS.2008.924002 IGRSBY 1545-598X Google Scholar

C. P. LaiR. M. Narayanan,

“Through-wall imaging and characterization of human activity using ultrawideband (UWB) random noise radar,”

Proc. SPIE, 5778 186

–195

(2005). http://dx.doi.org/10.1117/12.604154 PSISDG 0277-786X Google Scholar

C. P. LaiR. M. Narayanan,

“Ultrawideband random noise radar design for through-wall surveillance,”

IEEE Trans. Aerosp. Electronic Syst., 46

(4), 1716

–1730

(2010). http://dx.doi.org/10.1109/TAES.2010.5595590 IEARAX 0018-9251 Google Scholar

S. S. Ramet al.,

“Doppler-based detection and tracking of humans in indoor environments,”

J. Franklin Inst., 345

(6), 679

–699

(2008). http://dx.doi.org/10.1016/j.jfranklin.2008.04.001 JFINAB 0016-0032 Google Scholar

E. F. Greneker,

“RADAR flashlight for through-the-wall detection of humans,”

Proc. SPIE, 3375 280

–285

(1998). http://dx.doi.org/10.1117/12.327172 PSISDG 0277-786X Google Scholar

T. ThayaparanL. StankovicI. Djurovic,

“Micro-Doppler human signature detection and its application to gait recognition and indoor imaging,”

J. Franklin Inst., 345

(6), 700

–722

(2008). http://dx.doi.org/10.1016/j.jfranklin.2008.01.003 JFINAB 0016-0032 Google Scholar

I. OrovicS. StankovicM. Amin,

“A new approach for classification of human gait based on time-frequency feature representations,”

Signal Process., 91

(6), 1448

–1456

(2011). http://dx.doi.org/10.1016/j.sigpro.2010.08.013 SPRODR 0165-1684 Google Scholar

A. R. Hunt,

“Use of a frequency-hopping radar for imaging and motion detection through walls,”

IEEE Trans. Geosci. Rem. Sens., 47

(5), 1402

–1408

(2009). http://dx.doi.org/10.1109/TGRS.2009.2016084 IGRSD2 0196-2892 Google Scholar

F. AhmadM. G. AminP. D. Zemany,

“Dual-frequency radars for target localization in urban sensing,”

IEEE Trans. Aerosp. Electronic Syst., 45

(4), 1598

–1609

(2009). http://dx.doi.org/10.1109/TAES.2009.5310321 IEARAX 0018-9251 Google Scholar

N. Maarefet al.,

“A study of UWB FM-CW Radar for the detection of human beings in motion inside a building,”

IEEE Trans. Geosci. Rem. Sens., 47

(5), 1297

–1300

(2009). http://dx.doi.org/10.1109/TGRS.2008.2010709 IGRSD2 0196-2892 Google Scholar

F. SoldovieriR. SolimeneR. Pierri,

“A simple strategy to detect changes in through the wall imaging,”

Prog. Electromagn. Res. M, 7 1

–13

(2009). PELREX 1043-626X Google Scholar

T. S. RalstonG. L. CharvatJ. E. Peabody,

“Real-time through-wall imaging using an ultrawideband multiple-input multiple-output (MIMO) phased array radar system,”

in Proc. IEEE Intl. Symp. Phased Array Systems and Technology,

551

–558

(2010). Google Scholar

F. Ahmadet al.,

“Design and implementation of near-field, wideband synthetic aperture beamformers,”

IEEE Trans. Aerosp. Electronic Syst., 40

(1), 206

–220

(2004). http://dx.doi.org/10.1109/TAES.2004.1292154 IEARAX 0018-9251 Google Scholar

F. AhmadM. G. AminS. A. Kassam,

“Synthetic aperture beamformer for imaging through a dielectric wall,”

IEEE Trans. Aerosp. Electronic Syst., 41

(1), 271

–283

(2005). http://dx.doi.org/10.1109/TAES.2005.1413761 IEARAX 0018-9251 Google Scholar

M. G. AminF. Ahmad,

“Wideband synthetic aperture beamforming for through-the-wall imaging,”

IEEE Signal Process. Mag., 25

(4), 110

–113

(2008). http://dx.doi.org/10.1109/MSP.2008.923510 ISPRE6 1053-5888 Google Scholar

F. AhmadM. Amin,

“Multi-location wideband synthetic aperture imaging for urban sensing applications,”

J. Franklin Inst., 345

(6), 618

–639

(2008). http://dx.doi.org/10.1016/j.jfranklin.2008.03.003 JFINAB 0016-0032 Google Scholar

F. SoldovieriR. Solimene,

“Through-wall imaging via a linear inverse scattering algorithm,”

IEEE Geosci. Rem. Sens. Lett., 4

(4), 513

–517

(2007). http://dx.doi.org/10.1109/LGRS.2007.900735 IGRSBY 1545-598X Google Scholar

F. SoldovieriG. PriscoR. Solimene,

“A multi-array tomographic approach for through-wall imaging,”

IEEE Trans. Geosci. Rem. Sens., 46

(4), 1192

–1199

(2008). http://dx.doi.org/10.1109/TGRS.2008.915754 IGRSD2 0196-2892 Google Scholar

E. M. Lavelyet al.,

“Theoretical and experimental study of through-wall microwave tomography inverse problems,”

J. Franklin Inst., 345

(6), 592

–617

(2008). http://dx.doi.org/10.1016/j.jfranklin.2008.01.006 JFINAB 0016-0032 Google Scholar

M. M. Nikolicet al.,

“An approach to estimating building layouts using radar and jump-diffusion algorithm,”

IEEE Trans. Antennas Propag., 57

(3), 768

–776

(2009). http://dx.doi.org/10.1109/TAP.2009.2013420 IETPAK 0018-926X Google Scholar

C. Leet al.,

“Ultrawideband (UWB) radar imaging of building interior: measurements and predictions,”

IEEE Trans. Geosci. Rem. Sens., 47

(5), 1409

–1420

(2009). http://dx.doi.org/10.1109/TGRS.2009.2016653 IGRSD2 0196-2892 Google Scholar

E. ErtinR. L. Moses,

“Through-the-wall SAR attributed scattering center feature estimation,”

IEEE Trans. Geosci. Rem. Sens., 47

(5), 1338

–1348

(2009). http://dx.doi.org/10.1109/TGRS.2008.2008999 IGRSD2 0196-2892 Google Scholar

M. AftanasM. Drutarovsky,

“Imaging of the building contours with through the wall UWB radar system,”

Radioeng. J., 18

(3), 258

–264

(2009). Google Scholar

F. AhmadY. ZhangM. G. Amin,

“Three-dimensional wideband beamforming for imaging through a single wall,”

IEEE Geosci. Rem. Sens. Lett., 5

(2), 176

–179

(2008). http://dx.doi.org/10.1109/LGRS.2008.915742 IGRSBY 1545-598X Google Scholar

L. P. SongC. YuQ. H. Liu,

“Through-wall imaging (TWI) by radar: 2-D tomographic results and analyses,”

IEEE Trans. Geosci. Rem. Sens., 43

(12), 2793

–2798

(2005). http://dx.doi.org/10.1109/TGRS.2005.857914 IGRSD2 0196-2892 Google Scholar

M. DehmollaianM. ThielK. Sarabandi,

“Through-the-wall imaging using differential SAR,”

IEEE Trans. Geosci. Rem. Sens., 47

(5), 1289

–1296

(2009). http://dx.doi.org/10.1109/TGRS.2008.2010052 IGRSD2 0196-2892 Google Scholar

M. DehmollaianK. Sarabandi,

“Refocusing through building walls using synthetic aperture radar,”

IEEE Trans. Geosci. Rem. Sens., 46

(6), 1589

–1599

(2008). http://dx.doi.org/10.1109/TGRS.2008.916212 IGRSD2 0196-2892 Google Scholar

F. AhmadM. G. Amin,

“Noncoherent approach to through-the-wall radar localization,”

IEEE Trans. Aerosp. Electronic Syst., 42

(4), 1405

–1419

(2006). http://dx.doi.org/10.1109/TAES.2006.314581 IEARAX 0018-9251 Google Scholar

F. AhmadM. G. Amin,

“A noncoherent radar system approach for through-the-wall imaging,”

Proc. SPIE, 5778 196

–207

(2005). http://dx.doi.org/10.1117/12.609867 PSISDG 0277-786X Google Scholar

Y. YangA. Fathy,

“Development and implementation of a real-time see-through-wall radar system based on FPGA,”

IEEE Trans. Geosci. Rem. Sens., 47

(5), 1270

–1280

(2009). http://dx.doi.org/10.1109/TGRS.2008.2010251 IGRSD2 0196-2892 Google Scholar

F. AhmadM. G. Amin,

“High-resolution imaging using capon beamformers for urban sensing applications,”

in Proc. IEEE Int. Conf. on Acoustics, Speech, and Signal Process.,

II-985

–II-988

(2007). Google Scholar

M. Soumekh, Synthetic Aperture Radar Signal Processing with Matlab Algorithms, John Wiley and Sons, New York, NY

(1999). Google Scholar

Y-S. YoonM. G. Amin,

“Spatial filtering for wall-clutter mitigation in through-the-wall radar imaging,”

IEEE Trans. Geosci. Rem. Sens., 47

(9), 3192

–3208

(2009). http://dx.doi.org/10.1109/TGRS.2009.2019728 IGRSD2 0196-2892 Google Scholar

R. Burkholder,

“Electromagnetic models for exploiting multi-path propagation in through-wall radar imaging,”

in Proc. Int. Conf. Electromagnetics in Advanced Applications,

572

–575

(2009). Google Scholar

T. DogaruC. Le,

“SAR images of rooms and buildings based on FDTD computer models,”

IEEE Trans. Geosci. Rem. Sens., 47

(5), 1388

–1401

(2009). http://dx.doi.org/10.1109/TGRS.2009.2013841 IGRSD2 0196-2892 Google Scholar

S. KideraT. SakamotoT. Sato,

“Extended imaging algorithm based on aperture synthesis with double-scattered waves for UWB radars,”

IEEE Trans. Geosci. Rem. Sens., 49

(12), 5128

–5139

(2011). http://dx.doi.org/10.1109/TGRS.2011.2158108 IGRSD2 0196-2892 Google Scholar

P. SetlurM. AminF. Ahmad,

“Multipath model and exploitation in through-the-wall and urban radar sensing,”

IEEE Trans. Geosci. Rem. Sens., 49

(10), 4021

–4034

(2011). http://dx.doi.org/10.1109/TGRS.2011.2128331 IGRSD2 0196-2892 Google Scholar

F. AhmadM. G. AminS. A. Kassam,

“A beamforming approach to stepped-frequency synthetic aperture through-the-wall radar imaging,”

in Proc. IEEE Int. Workshop on Computational Advances in Multi-Sensor Adaptive Processing,

24

–27

(2005). Google Scholar

F. AhmadM. G. Amin,

“Performance of autofocusing schemes for single target and populated scenes behind unknown walls,”

Proc. SPIE, 6547 654709

(2007). http://dx.doi.org/10.1117/12.720085 PSISDG 0277-786X Google Scholar

F. AhmadM. G. AminG. Mandapati,

“Autofocusing of through-the-wall radar imagery under unknown wall characteristics,”

IEEE Trans. Image Process., 16

(7), 1785

–1795

(2007). http://dx.doi.org/10.1109/TIP.2007.899030 IIPRE4 1057-7149 Google Scholar

G. WangM. G. Amin,

“Imaging through unknown walls using different standoff distances,”

IEEE Trans. Signal Process., 54

(10), 4015

–4025

(2006). http://dx.doi.org/10.1109/TSP.2006.879325 ITPRED 1053-587X Google Scholar

G. WangM. G. AminY. Zhang,

“A new approach for target locations in the presence of wall ambiguity,”

IEEE Trans. Aerosp. Electronic Syst., 42

(1), 301

–315

(2006). http://dx.doi.org/10.1109/TAES.2006.1603424 IEARAX 0018-9251 Google Scholar

Y. YoonM. G. Amin,