|

|

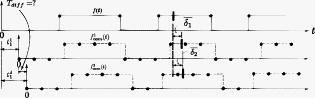

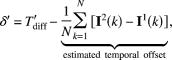

1.IntroductionFor multicamera systems, synchronization is a must to provide accurate temporal correlation for incorporating image information from multiple viewpoints. Synchronicity can be achieved through real-time hardware synchronization1 or by establishing a time relationship between sequences recorded by unsynchronized video cameras.2 While ensuring high precision synchronization, hardware solutions are costly and complex. In a scenario where synchronous video sequences provided by hardware are not feasible, it is still possible to obtain synchronicity using image features.3, 4, 5 These feature-based methods depend on the existence of salient and robust features in the scene. The failure of such features to exist in the scene and the error of detecting, tracking, and matching them would lead to incorrect synchronization. In this paper, we present a simple and yet effective method, termed random on-off light source (ROOLS), to recover the temporal offset at subframe accuracy. It utilizes an auxiliary light source such as an LED to provide temporal cues. Compared to special-purpose hardware approaches, our method is far less complex and is inexpensive. Compared to feature-based approaches, ROOLS is more robust since it is completely independent of scene properties. 2.Problem StatementWithout loss of generality, we consider the case of two video cameras. Let the time instances of the video frames taken by the ’th camera be denoted by where denotes the length of the ’th sequence, and denotes the time of the ’th frame in the ’th sequence. Note that and are measured by a common clock.In a typical situation, identical video cameras of constant frame interval are used, when Synchronizing two video sequences in such situation is equivalent to measuring the temporal offset between their initial frames3.Proposed Method3.1.FormulationWe propose to use a single temporally coded light source such as an LED as the signal to be captured by the cameras for synchronization. The light signal is essentially a time-continuous binary-valued function denoted as It is sampled at by the ’th camera, producing a time-discrete binary-valued sequenceSince is binary valued, it can be characterized by time instants where its function value rises from 0 to 1 or drops from 1 to 0. We term each of these instants a transition event. Let denote a subsequence of all transition events in ,For each , we havewhere ⊕ denotes the exclusive or operator, and is an arbitrarily small real positive number. For the ’th camera, let denote the transition events corresponding to . Obviously, as part of , can be expressed as follows:where is a subsequence of . For each , it satisfiesThis notation and their relationship are illustrated in Fig. 1 .3.2.Achieving Sub-Frame-Interval PrecisionGiven and , consider the difference between a pair of corresponding transition events: Provided that is an independent and identically distributed (i.i.d.) random variable uniformly distributed over with mean and variance being and . The averaged difference is a random variable with mean and variance being and . However,where and are the mean positions of transition events with respect to and , as illustrated by Fig. 1. According to central limit theorem,6 the averaged sum of a sufficiently large number of i.i.d. random variables each with finite mean and variance approximates normal distribution. Hence, takes a normal distribution with mean of and variance of . From Eq. 11 we obtainSince , Eq. 12 can be further written as where , . When is sufficiently large, the variance of will be negligibly small, leading to high precision estimation of .3.3.Transition Detection AccuracyIn real-world applications, the binary sequence is obtained through quantifying the image intensity of the light source by certain threshold . For samples crossing transition events, the quantified binary value might flip, causing the transition event to shift one frame backward. If we take a sample right before the edge where signal rises from 0 to 1 for instance, its intensity is close to 1 and incorrectly quantified to 1. Equation 13 tells us that the shift will introduce additional error to the estimation. Suppose the light source intensity is normalized and is chosen as the threshold so that the probabilities for transitions to flip from 0 to 1 and 1 to 0 are identical. Let denote a single shift event in a video sequence . Its probability density function (pdf) is , with expectation and variance . Let denote averaged transition shift. Because are i.i.d. random variables, once again by the use of central limit theorem, we have . According to Eq. 13, the extra error introduced by transition shift in two video sequences turns out to be . It can be proved that takes a normal distribution with mean of 0 and variance of . Counting in , the total error of temporal offset estimation is 3.4.Random Binary Sequence DesignThe proposed method requires to be i.i.d. random variables uniformly distributed in . To achieve this, we set the transition time where is the time of the previous transition and is uniformly distributed in . Transition time generated in this way can be proved to ensure meeting the requirement.3.5.Transition MatchingThe estimation of the temporal offset requires the transition events of the two cameras be matched. We refer to this process as transition matching. Let the segment between two consecutive transition events be denoted as : The binary sequence can be equivalently represented by a sequence of transition segments. Let denote the difference between two segments. Transition matching can be equivalently achieved by matching two sequences of transition segment. Based on the observation that the difference of corresponding segments would be small, optimal transition matching can be obtained by solving the following formula:where denotes the number of overlapping segments, and and are the lengths of the two segment sequences. The first term assesses the similarity of two overlapping segment sequences. Howerver, considering only the first term possibly leads to erroneous matching due to short overlapping length . To avoid this, the second term is introduced to give a large penalty for small . The optimal solution is sought by evaluating all combinations of and .4.Experiments4.1.Hardware and ConfigurationThe experiment system is made up of two Sony HVR-V1 high-definition (HD) video cameras, an LED array clock providing the ground truth, and a single temporally encoded LED light source. The video cameras operate at 200 frames per second. The values of and in Eq. 15 are set to 2 and 6, which ensures there are at least two frames between adjacent transition events and avoids ambiguity in transition matching. We selected to quantize the image intensity of the LED a binary value. 4.2.Experiment ResultsWe conducted three groups of experiments under illumination conditions including daylight, fluorescent lighting, and darkness. The results are shown in Table 1 . In all the tests, only 200 transition events were used. The average estimation error was about 0.08 frame intervals. We observe that all estimation errors are less than 0.2 frame intervals. This would be explained later. Table 1Results of 10 experiments.

4.3.Comprison with Other MethodsThe proposed method was compared with the feature-based approaches3, 4, 5 and the results are summarized in Table 2 . The comparison indicates ROOLS achieves higher estimation accuracy than existing approaches. Table 2Average temporal offset error of various approaches

4.4.Analysis and DiscussionsThe property of normal distribution states that 3 standard deviations from the mean account for about 99.7% of the distribution. When transitions are used, according to Eq. 14, the standard deviation is about 0.05. The estimation error is bounded in frame intervals. This explains why the estimation error in Table 1 are bounded in 0.2 frame intervals. The performance of the proposed method can be improved by increasing . 5.ConclusionWe presented an innovative approach toward synchronizing commercial video cameras. It achieved high-precision synchronization at the low cost of adding only a simple temporally coded light source. The proposed method requiring the video cameras to have identical frame rates is not a serious limitation since using identical video cameras for one task is convenient and typical. AcknowledgmentsThe research work presented in this paper is supported by National Natural Science Foundation of China, Grant No. 60875024. referencesT. Kanade, H. Saito, and S. Vedula, The 3D Room: Digitizing Time-Varying 3D Events by Synchronized Multiple Video Streams,

(1998) Google Scholar

A. Whitehead, R. Laganiere, and P. Bose,

“Temporal synchronization of video sequences in theory and in practice,”

132

–137

(2005). Google Scholar

Y. Caspi and M. Irani,

“Spatio-temporal alignment of sequences,”

IEEE Trans. Pattern Anal. Mach. Intell., 24 1409

–1424

(2002). https://doi.org/10.1109/TPAMI.2002.1046148 Google Scholar

C. Rao, A. Gritai, M. Shah, and T. Syeda-Mahmood,

“View-invariant alignment and matching of video sequences,”

939

–945

(2003). Google Scholar

S. N. Sinha and M. Pollefeys,

“Synchronization and calibration of camera networks from silhouettes,”

116

–119

(2004). Google Scholar

W. Feller, An Introduction to Probability Theory and Its Applications, 2 Wiley, New York

(1971). Google Scholar

|